I/O graph 1

I/O graph 2

I/O graph 3

Choose models to compare

Publications (1)

Overview

ReaderLM-v2 is a 1.5B parameter language model that converts raw HTML into markdown or JSON, handling up to 512K tokens combined input/output length with support for 29 languages. Unlike its predecessor that treated HTML-to-markdown as a 'selective-copy' task, v2 approaches it as a translation process, enabling superior handling of complex elements like code fences, nested lists, tables, and LaTeX equations. The model maintains consistent performance across varying context lengths and introduces direct HTML-to-JSON generation capabilities with predefined schemas.

Methods

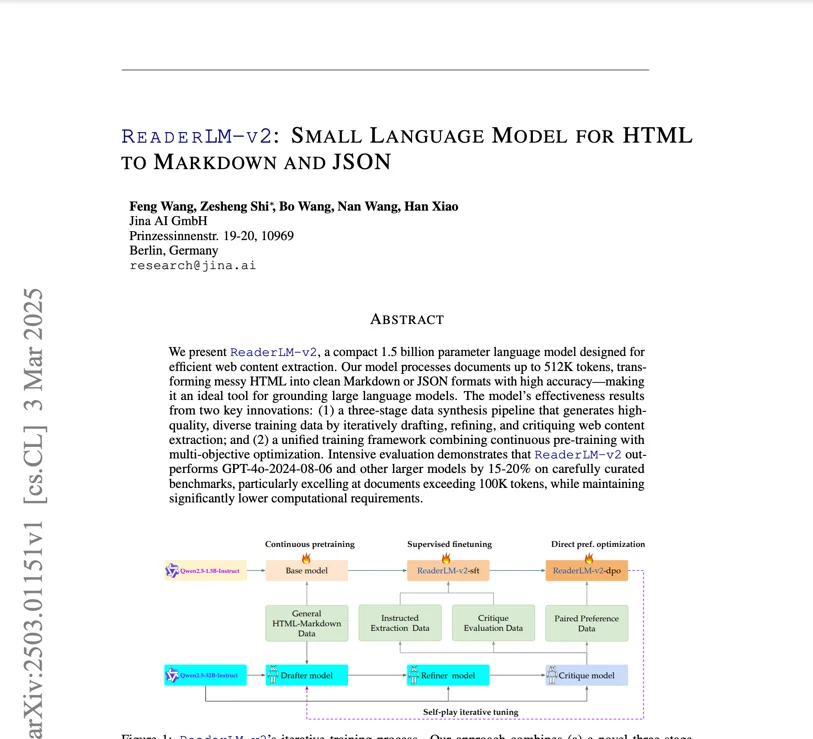

Built on Qwen2.5-1.5B-Instruction, ReaderLM-v2's training involved a html-markdown-1m dataset of ten millions HTML documents, averaging 56,000 tokens each. The training process included: 1) long-context pretraining using ring-zag attention and RoPE to expand context from 32K to 256K tokens, 2) supervised fine-tuning with refined datasets, 3) direct preference optimization for output alignment, and 4) self-play reinforcement tuning. Data preparation followed a three-step pipeline (Draft-Refine-Critique) powered by Qwen2.5-32B-Instruction, with specialized models trained for specific tasks before merging via linear parameter interpolation.

Performance

In comprehensive benchmarks, ReaderLM-v2 outperforms larger models like Qwen2.5-32B-Instruct and Gemini2-flash-expr on HTML-to-Markdown tasks. For main content extraction, it achieves ROUGE-L of 0.84, Jaro-Winkler of 0.82, and significantly lower Levenshtein distance (0.22) compared to competitors. In HTML-to-JSON tasks, it maintains competitive performance with F1 scores of 0.81 and 98% pass rate. The model processes at 67 tokens/s input and 36 tokens/s output on a T4 GPU, with significantly reduced degeneration issues through contrastive loss training.

Best Practice

The model is accessible through a Google Colab notebook demonstrating HTML-to-markdown conversion, JSON extraction, and instruction-following. For HTML-to-Markdown tasks, users can input raw HTML without prefix instructions, while JSON extraction requires specific schema formatting. The create_prompt helper function facilitates easy prompt creation for both tasks. While the model works on Colab's free T4 GPU tier (requiring vllm and triton), it has limitations without bfloat16 or flash attention 2 support. RTX 3090/4090 is recommended for production use. The model will be available on AWS SageMaker, Azure, and GCP marketplace, licensed under CC BY-NC 4.0 for non-commercial use.

Blogs that mention this model