folder_special

Featured

school

Academic Publications

February 17, 2026

jina-embeddings-v5-text: Task-Targeted Embedding Distillation

February 11, 2026

Embedding Inversion via Conditional Masked Diffusion Language Models

January 22, 2026

Embedding Compression via Spherical Coordinates

December 29, 2025

Vision Encoders in Vision-Language Models: A Survey

December 04, 2025

Jina-VLM: Small Multilingual Vision Language Model

AAAI 2026

October 01, 2025

jina-reranker-v3: Last but Not Late Interaction for Document Reranking

NeurIPS 2025

August 31, 2025

Efficient Code Embeddings from Code Generation Models

EMNLP 2025

June 24, 2025

jina-embeddings-v4: Universal Embeddings for Multimodal Multilingual Retrieval

ICLR 2025

March 04, 2025

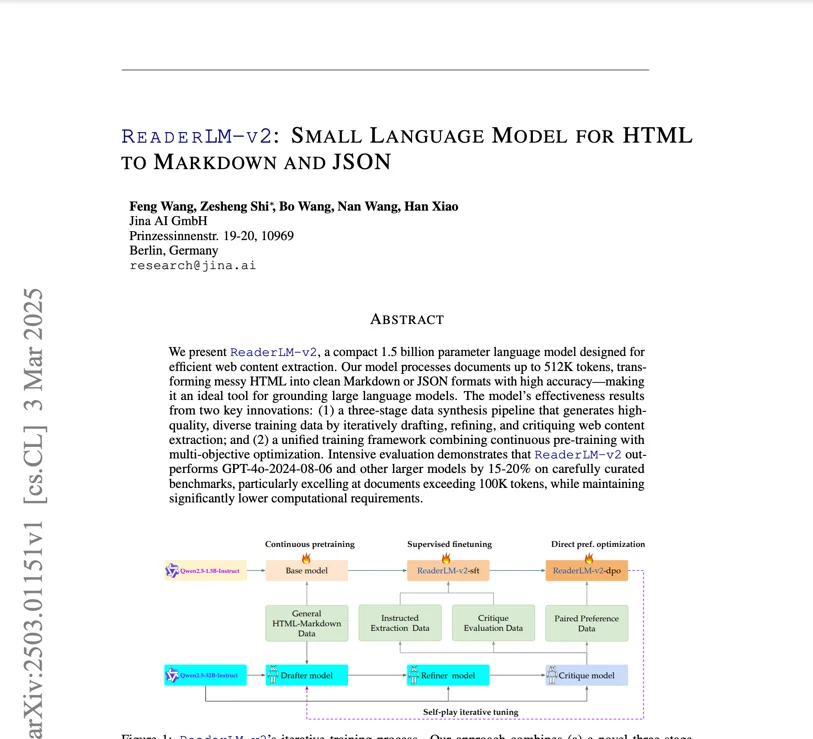

ReaderLM-v2: Small Language Model for HTML to Markdown and JSON

ACL 2025

December 17, 2024

AIR-Bench: Automated Heterogeneous Information Retrieval Benchmark

ICLR 2025

December 12, 2024

jina-clip-v2: Multilingual Multimodal Embeddings for Text and Images

ECIR 2025

September 18, 2024

jina-embeddings-v3: Multilingual Embeddings With Task LoRA

SIGIR 2025

September 07, 2024

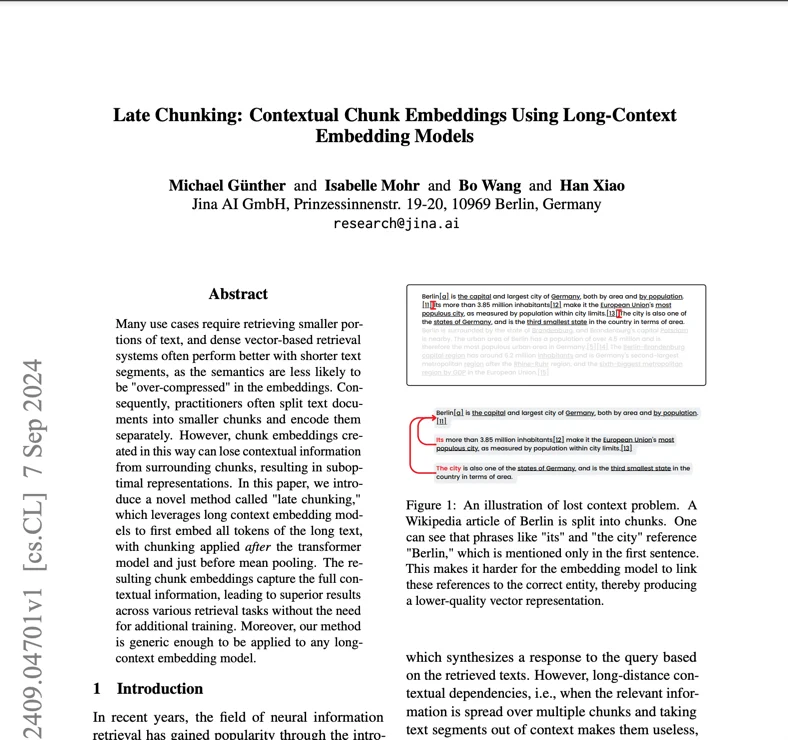

Late Chunking: Contextual Chunk Embeddings Using Long-Context Embedding Models

EMNLP 2024

August 30, 2024

Jina-ColBERT-v2: A General-Purpose Multilingual Late Interaction Retriever

WWW 2025

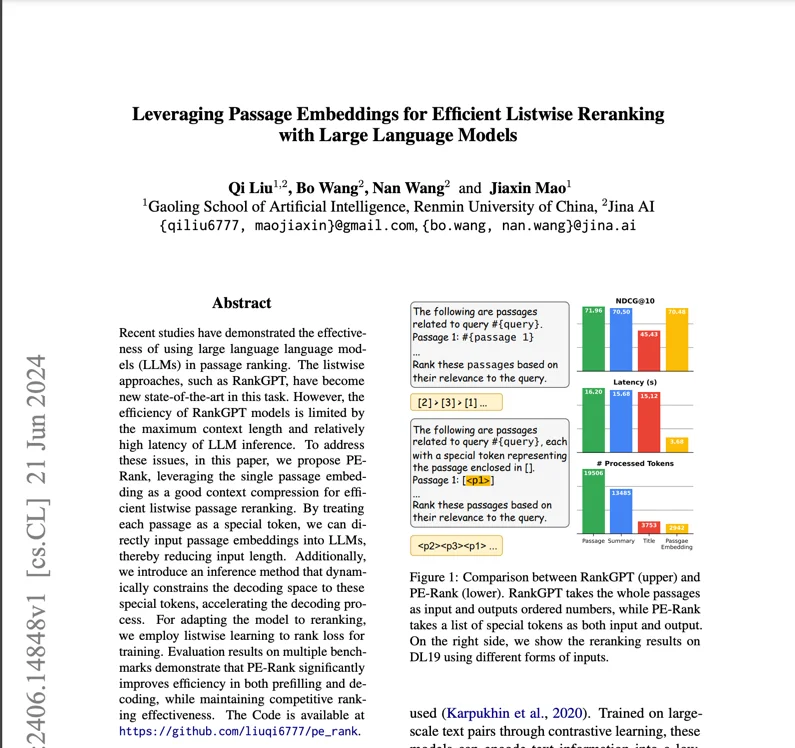

June 21, 2024

Leveraging Passage Embeddings for Efficient Listwise Reranking with Large Language Models

ICML 2024

May 30, 2024

Jina CLIP: Your CLIP Model Is Also Your Text Retriever

February 26, 2024

Multi-Task Contrastive Learning for 8192-Token Bilingual Text Embeddings

October 30, 2023

Jina Embeddings 2: 8192-Token General-Purpose Text Embeddings for Long Documents

EMNLP 2023

July 20, 2023

Jina Embeddings: A Novel Set of High-Performance Sentence Embedding Models

19 publications in total.

folder_special

Featured

school

Academic

All

Press release

Tech blog

Event

Opinion

February 19, 2026 • 7 minutes read

jina-embeddings-v5-text: New SOTA Small Multilingual Embeddings

Two sub-1B multilingual embeddings with best-in-class performance, available on Elastic Inference Service, Llama.cpp and MLX.

February 17, 2026

jina-embeddings-v5-text: Task-Targeted Embedding Distillation

Text embedding models are widely used for semantic similarity tasks, including information retrieval, clustering, and classification. General-purpose models are typically trained with single- or multi-stage processes using contrastive loss functions. We introduce a novel training regimen that combines model distillation techniques with task-specific contrastive loss to produce compact, high-performance embedding models. Our findings suggest that this approach is more effective for training small models than purely contrastive or distillation-based training paradigms alone. Benchmark scores for the resulting models, jina-embeddings-v5-text-small and jina-embeddings-v5-text-nano, exceed or match the state-of-the-art for models of similar size. jina-embeddings-v5-text models additionally support long texts (up to 32k tokens) in many languages, and generate embeddings that remain robust under truncation and binary quantization. Model weights are publicly available, hopefully inspiring further advances in embedding model development.

February 11, 2026

Embedding Inversion via Conditional Masked Diffusion Language Models

We frame embedding inversion as conditional masked diffusion, recovering all tokens in parallel through iterative denoising rather than sequential autoregressive generation. A masked diffusion language model is conditioned on the target embedding via adaptive layer normalization, requiring only 8 forward passes through a 78M parameter model with no access to the target encoder. On 32-token sequences across three embedding models, the method achieves 81.3% token accuracy and 0.87 cosine similarity.

January 22, 2026

Embedding Compression via Spherical Coordinates

We present a compression method for unit-norm embeddings that achieves 1.5x compression, 25% better than the best prior lossless method. The method exploits that spherical coordinates of high-dimensional unit vectors concentrate around pi/2, causing IEEE 754 exponents to collapse to a single value and high-order mantissa bits to become predictable, enabling entropy coding of both. Reconstruction error is below 1e-7, under float32 machine epsilon. Evaluation across 26 configurations spanning text, image, and multi-vector embeddings confirms consistent improvement. The method requires no training.

December 29, 2025

Vision Encoders in Vision-Language Models: A Survey

Vision encoders have remained comparatively small while language models scaled from billions to hundreds of billions of parameters. This survey analyzes vision encoders across 70+ vision-language models from 2023–2025 and finds that training methodology matters more than encoder size: improvements in loss functions, data curation, and feature objectives yield larger gains than scaling by an order of magnitude. Native resolution handling improves document understanding, and multi-encoder fusion captures complementary features no single encoder provides. We organize encoders into contrastive, self-supervised, and LLM-aligned families, providing a taxonomy and practical selection guidance for encoder design and deployment.

December 04, 2025 • 7 minutes read

Jina-VLM: Small Multilingual Vision Language Model

New 2B vision language model achieves SOTA on multilingual VQA, no catastrophic forgetting on text-only tasks.

December 04, 2025

Jina-VLM: Small Multilingual Vision Language Model

We present jina-vlm, a 2.4B parameter vision-language model that achieves state-of-the-art multilingual visual question answering among open 2B-scale VLMs. The model couples a SigLIP2 vision encoder with a Qwen3 language backbone through an attention-pooling connector that enables token-efficient processing of arbitrary-resolution images. Across standard VQA benchmarks and multilingual evaluations, jina-vlm achieves leading results while preserving competitive text-only performance. Model weights and code are publicly released.

October 03, 2025 • 7 minutes read

Jina Reranker v3: 0.6B Listwise Reranker for SOTA Multilingual Retrieval

New 0.6B-parameter listwise reranker that considers the query and all candidate documents in a single context window.

October 01, 2025

jina-reranker-v3: Last but Not Late Interaction for Document Reranking

jina-reranker-v3 is a 0.6B parameter multilingual document reranker that introduces a novel last but not late interaction. Unlike late interaction models such as ColBERT that perform separate encoding followed by multi-vector matching, our approach conducts causal self-attention between query and documents within the same context window, enabling rich cross-document interactions before extracting contextual embeddings from the last token of each document. This compact architecture achieves state-of-the-art BEIR performance with 61.94 nDCG@10 while being significant smaller than generative listwise rerankers.

AAAI 2026

September 30, 2025 • 8 minutes read

Embeddings Are AI’s Red-Headed Stepchild

Embedding models aren't the most glamorous aspect of the AI industry, but image generators and chatbots couldn't exist without them.