I/O graph

Choose models to compare

Publications (3)

Overview

Jina Embeddings v3 is a groundbreaking multilingual text embedding model that transforms how organizations handle text understanding and retrieval across languages. At its core, it solves the critical challenge of maintaining high performance across multiple languages and tasks while keeping computational requirements manageable. The model particularly shines in production environments where efficiency matters - it achieves state-of-the-art performance with just 570M parameters, making it accessible for teams that can't afford the computational overhead of larger models. Organizations needing to build scalable, multilingual search systems or analyze content across language barriers will find this model especially valuable.

Methods

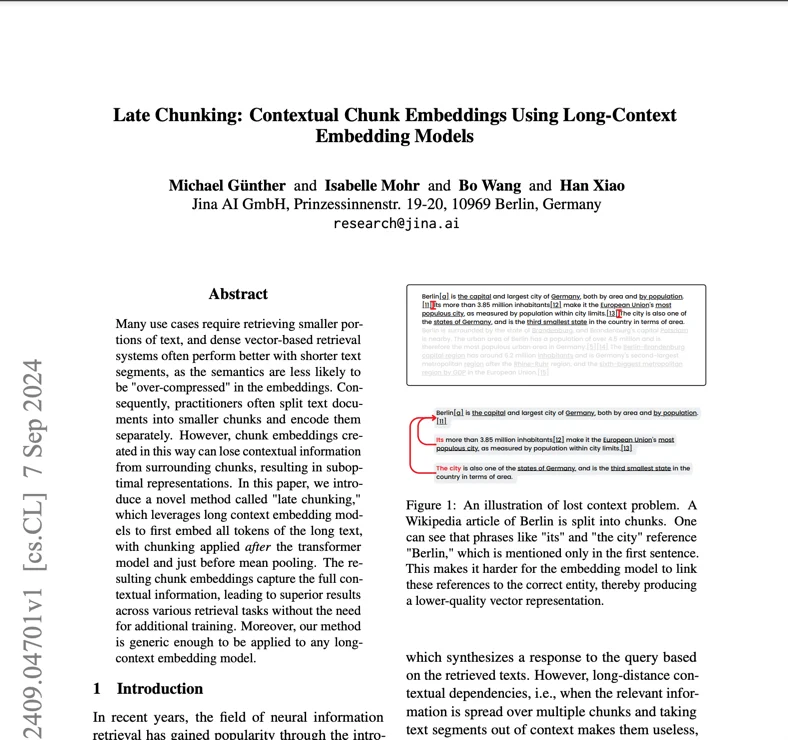

The model's architecture represents a significant innovation in embedding technology, built on a foundation of jina-XLM-RoBERTa with 24 layers and enhanced with task-specific Low-Rank Adaptation (LoRA) adapters. LoRA adapters are specialized neural network components that optimize the model for different tasks like retrieval, classification, or clustering without increasing the parameter count significantly - they add less than 3% to the total parameters. The model incorporates Matryoshka Representation Learning (MRL), allowing embeddings to be flexibly reduced from 1024 to as low as 32 dimensions while preserving performance. Training involved a three-stage process: initial pre-training on multilingual text from 89 languages, fine-tuning on paired texts for embedding quality, and specialized adapter training for task optimization. The model supports context lengths up to 8,192 tokens through Rotary Position Embeddings (RoPE), with an innovative base frequency adjustment technique that improves performance on both short and long texts.

Performance

The model demonstrates exceptional efficiency-to-performance ratio in real-world testing, outperforming both open-source alternatives and proprietary solutions from OpenAI and Cohere on English tasks while excelling in multilingual scenarios. Most surprisingly, it achieves better results than e5-mistral-7b-instruct, which has 12 times more parameters, highlighting its remarkable efficiency. In MTEB benchmark evaluations, it achieves an average score of 65.52 across all tasks, with particularly strong performance in Classification Accuracy (82.58) and Sentence Similarity (85.80). The model maintains consistent performance across languages, scoring 64.44 on multilingual tasks. When using MRL for dimension reduction, it retains strong performance even at lower dimensions - for example, maintaining 92% of its retrieval performance at 64 dimensions compared to the full 1024 dimensions.

Best Practice

To effectively deploy Jina Embeddings v3, teams should consider their specific use case to select the appropriate task adapter: retrieval.query and retrieval.passage for search applications, separation for clustering tasks, classification for categorization, and text-matching for semantic similarity. The model requires CUDA-capable hardware for optimal performance, though its efficient architecture means it needs significantly less GPU memory than larger alternatives. For production deployment, AWS SageMaker integration provides a streamlined path to scalability. The model excels in multilingual applications but may require additional evaluation for low-resource languages. While it supports long documents up to 8,192 tokens, optimal performance is achieved with the late chunking feature for very long texts. Teams should avoid using the model for tasks requiring real-time generation or complex reasoning - it's designed for embedding and retrieval, not text generation or direct question answering.

Blogs that mention this model