AgentChain uses Large Language Models (LLMs) for planning and orchestrating multiple Agents or Large Models (LMs) for accomplishing sophisticated tasks. AgentChain is fully multimodal: it accepts text, image, audio, tabular data as input and output.

- 🧠 LLMs as the brain: AgentChain leverages state-of-the-art Large Language Models to provide users with the ability to plan and make decisions based on natural language inputs. This feature makes AgentChain a versatile tool for a wide range of applications, such as task execution given natural language instructions, data understanding, and data generation.

- 🌟 Fully Multimodal IO: AgentChain is fully multimodal, accepting input and output from various modalities, such as text, image, audio, or video (coming soon). This feature makes AgentChain a versatile tool for a wide range of applications, such as computer vision, speech recognition, and transitioning from one modality to another.

- 🤝 Orchestrate Versatile Agents: AgentChain can orchestrate multiple agents to perform complex tasks. Using composability and hierarchical structuring of tools AgentChain can choose inelligently which tools to use and when for a certain task. This feature makes AgentChain a powerful tool for projects that require complex combination of tools.

- 🔧 Customizable for Ad-hoc Needs: AgentChain can be customized to fit specific project requirements, making it a versatile tool for a wide range of applications. Specific requirements can be met by enhancing capabilities with new agents (and distributed architecture coming soon).

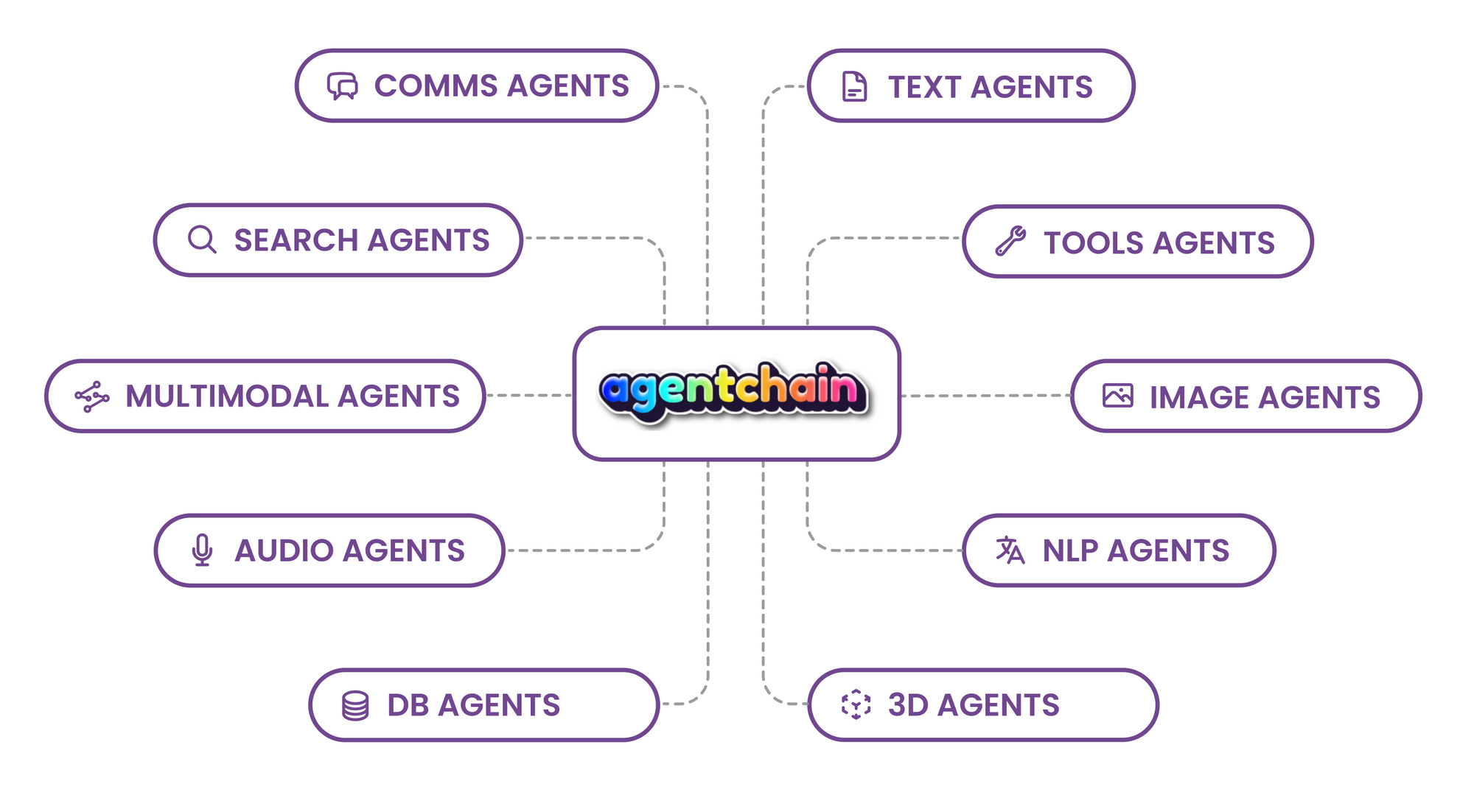

tagAgents in AgentChain

AgentChain is a sophisticated system with the goal of solving general problems. It can orchestrate multiple agents to accomplish sub-problems. These agents are organized into different groups, each with their unique set of capabilities and functionalities. Here are some of the agent groups in AgentChain:

tagSearchAgents

The SearchAgents group is responsible for gathering information from various sources, including search engines, online databases, and APIs. The agents in this group are highly skilled at retrieving up-to-date world knowledge and information. Some examples of agents in this group include the Google Search API, Bing API, Wikipedia API, and Serp.

tagCommsAgents

The CommsAgents group is responsible for handling communication between different parties, such as sending emails, making phone calls, or messaging via various platforms. The agents in this group can integrate with a wide range of platforms. Some examples of agents in this group include TwilioCaller, TwilioEmailWriter, TwilioMessenger and Slack.

tagToolsAgents

The ToolsAgents group is responsible for performing various computational tasks, such as performing calculations, running scripts, or executing commands. The agents in this group can work with a wide range of programming languages and tools. Some examples of agents in this group include Math, Python REPL, and Terminal.

tagMultiModalAgents

The MultiModalAgents group is responsible for handling input and output from various modalities, such as text, image, audio, or video (coming soon). The agents in this group can process and understand different modalities. Some examples of agents in this group include OpenAI Whisper, Blip2, Coqui, and StableDiffusion.

tagImageAgents

The ImageAgents group is responsible for processing and manipulating images, such as enhancing image quality, object detection, or image recognition. The agents in this group can perform complex operations on them. Some examples of agents in this group include Upscaler, ControlNet and YOLO.

tagDBAgents

The DBAgents group is responsible for adding and fetching data from your database, such as getting metrics or aggregations from your database. The agents in this group will interact with databases and enrich other agents with your database information. Some examples of agents in this group include SQL, MongoDB, ElasticSearch, Qrant and Notion.

tagGet started

- Clone the AgentChain repo

- Install requirements:

pip install -r requirements.txt - Download model checkpoints:

bash download.sh - Depending on the agents you need in-place, make sure to export environment variables:

OPENAI_API_KEY={YOUR_OPENAI_API_KEY} # mandatory since the LLM is central in this application

SERPAPI_API_KEY={YOUR_SERPAPI_API_KEY} # make sure to include a serp API key in case you need the agent to be able to search the web

# These environment variables are needed in case you want the agent to be able to make phone calls

AWS_ACCESS_KEY_ID={YOUR_AWS_ACCESS_KEY_ID}

AWS_SECRET_ACCESS_KEY={YOUR_AWS_SECRET_ACCESS_KEY}

TWILIO_ACCOUNT_SID={YOUR_TWILIO_ACCOUNT_SID}

TWILIO_AUTH_TOKEN={YOUR_TWILIO_AUTH_TOKEN}

AWS_S3_BUCKET_NAME={YOUR_AWS_S3_BUCKET_NAME} # make sure to create an S3 bucket with public access- Install

ffmpeglibrary (needed for whisper):sudo apt update && sudo apt install ffmpeg(Ubuntu command) - Run the main script:

python main.py