In the ever-evolving landscape of Large Language Model (LLM), OpenAI's unveiling of fine-tuning for GPT-3.5 Turbo stands out as a noteworthy milestone. This advancement beckons with the tantalizing prospect of leveraging the vast prowess of this AI powerhouse, tailored to specific nuances. Yet, as is often the case with groundbreaking innovations, a closer look can offer a more nuanced understanding.

tagComparing Prompt Engineering vs. Fine-Tuning

Before venturing deeper, let's acquaint ourselves with these two trending players in the LLM arena: prompt engineering and fine-tuning.

Imagine the colossal GPT-3.5 Turbo as a grand piano, its keys sprawling in multitude. Prompt engineering is akin to an artist subtly mastering those keys to elicit the desired tunes. With just the right prompts (questions or statements you feed into the LLM), you can navigate the vast spectrum of the model's knowledge. This approach is nimble, adaptable, and remarkably effective. For those looking to perfect this craft, tools like PromptPerfect subtly rise as a beacon, crafting the perfect harmony between user intent and AI response.

On the other hand, fine-tuning is a more profound process. If prompt engineering is about mastering the keys, fine-tuning is about recalibrating the piano itself. It allows for a deeper, more tailored customization, aligning the model's behavior meticulously with specific datasets.

tagFine-Tuned Language: Beyond The Machine's Syntax

At their core, LLMs like GPT-4 have astounded us with their capabilities. Their prose, though often coherent, has been criticized for lacking the nuanced, idiomatic quality inherent to human language. Now, fine-tuning attempts to blend the machine's efficiency with a touch of human-like idiosyncrasy.

OpenAI’s fine-tuning promises to take these models a step further into the realm of nuanced understanding. Preliminary tests hint at a fine-tuned GPT-3.5 Turbo standing shoulder-to-shoulder with the mightier GPT-4 in specific domains. Such precision could mean AI transcends beyond mere transactional interactions, evolving into a more collaborative partner, displaying comprehension, imagination, and even a semblance of empathy.

tagThe True Potential: Bridging Gaps and Deepening Connections

To truly grasp this, let's delve into a few envisioned applications:

- Medical Chatbots: Picture a chatbot, equipped not just with medical facts but with genuine warmth. Trained on medical research, patient experiences, and counseling techniques, these AI companions could stand as pillars of support for patients, empathetically navigating their concerns and emotions. With inputs from medical journals, patient forums, and consultation transcripts, they could potentially merge hard medical knowledge with compassionate human wisdom.

- Writing Assistants: The dreaded writer’s block could find its match in an AI muse, tailored to resonate with an author's unique style and influences. Using fine-tuning, one could create an AI writing companion infused with the essence of literary greats, from Vonnegut to Woolf. These would be more than mere tools; they'd be partners in the creative process, guiding writers into realms of unbridled creativity.

- Farsi-speaking Retail Chatbots: In the world of e-commerce, language should not be a barrier. For Iranian businesses, fine-tuned chatbots equipped with product knowledge and a nuanced understanding of Farsi linguistics could bridge cultural and linguistic divides, ensuring every customer feels at home.

This exploration paints a vivid picture. Fine-tuning has the potential to infuse AI systems with curated human wisdom, elevating their capacities to comfort, inspire, and connect. As we progress, ensuring these models reflect our higher values could lead to an AI that appreciates the myriad hues of human experience, transcending its binary origins.

Yet, with promise comes price.

And this is where we find ourselves at a crossroads. While the allure of deep customization through fine-tuning is undeniable, the economic implications are equally compelling.

tagThe Realities of the Fine-Tuning Wallet

Now, the brass tacks. While the siren song of customization is tempting, the costs of heeding its call can be steep.

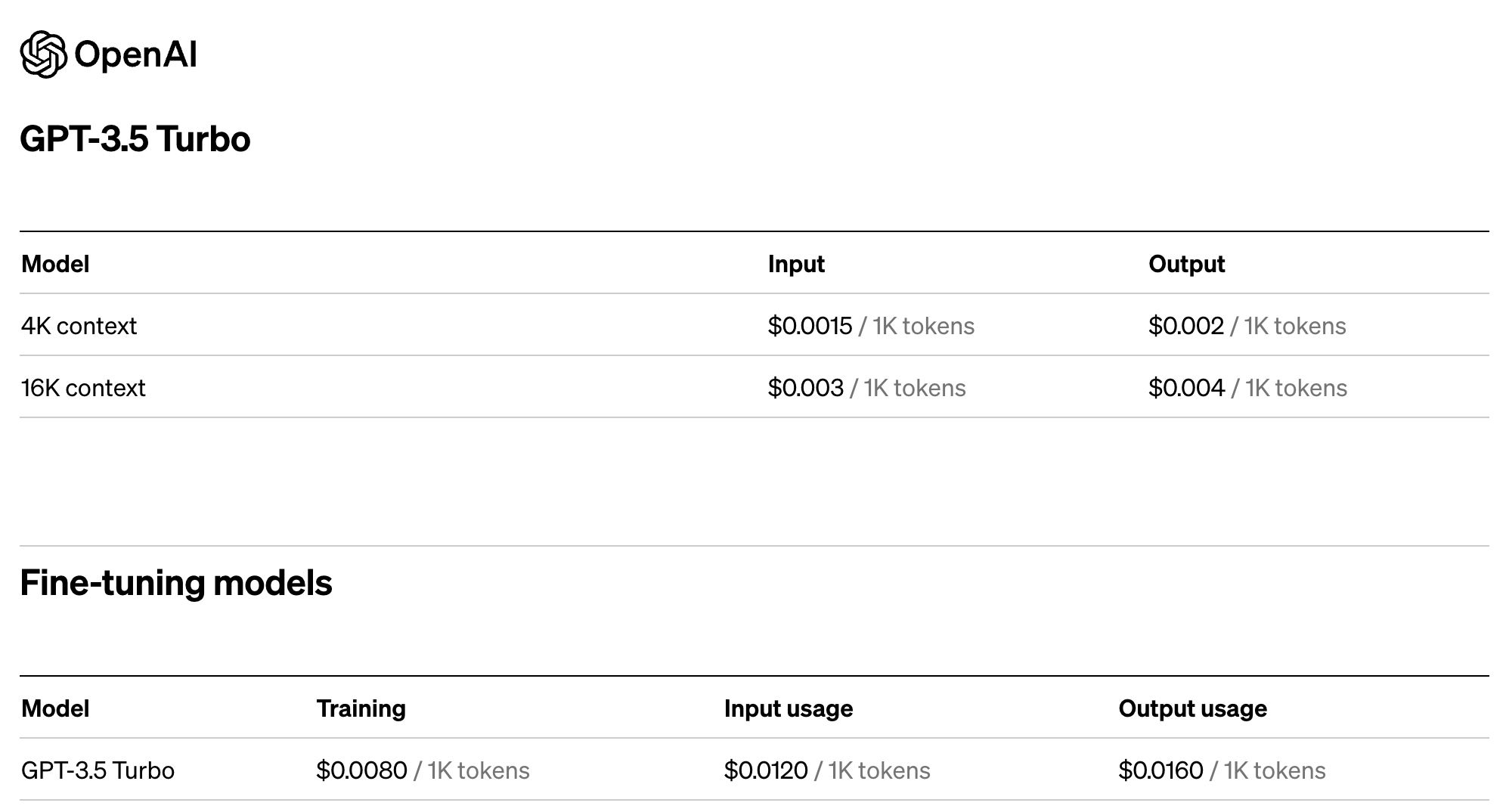

A conversation spanning 1,000 tokens on GPT-3.5 will gently knock your wallet for $0.0035. But, if you're looking at its fine-tuned avatar:

- Training demands $0.0080 for every 1,000 tokens.

- Input costs soar to $0.0120 per 1,000 tokens — 8 times the basic version.

- Outputs? A lofty $0.0160 for each 1,000 tokens.

Hence, our innocent 1,000-token chat now demands a 10-fold premium at $0.0360, excluding the initial training.

Let’s extrapolate. Imagine a bustling chatbot, engaging in 10,000 daily chit-chats, each 2,000 tokens long:

- GPT-3.5’s bill stands at a palatable $35 per day.

- The fine-tuned variant? A whopping 10,000 every month.

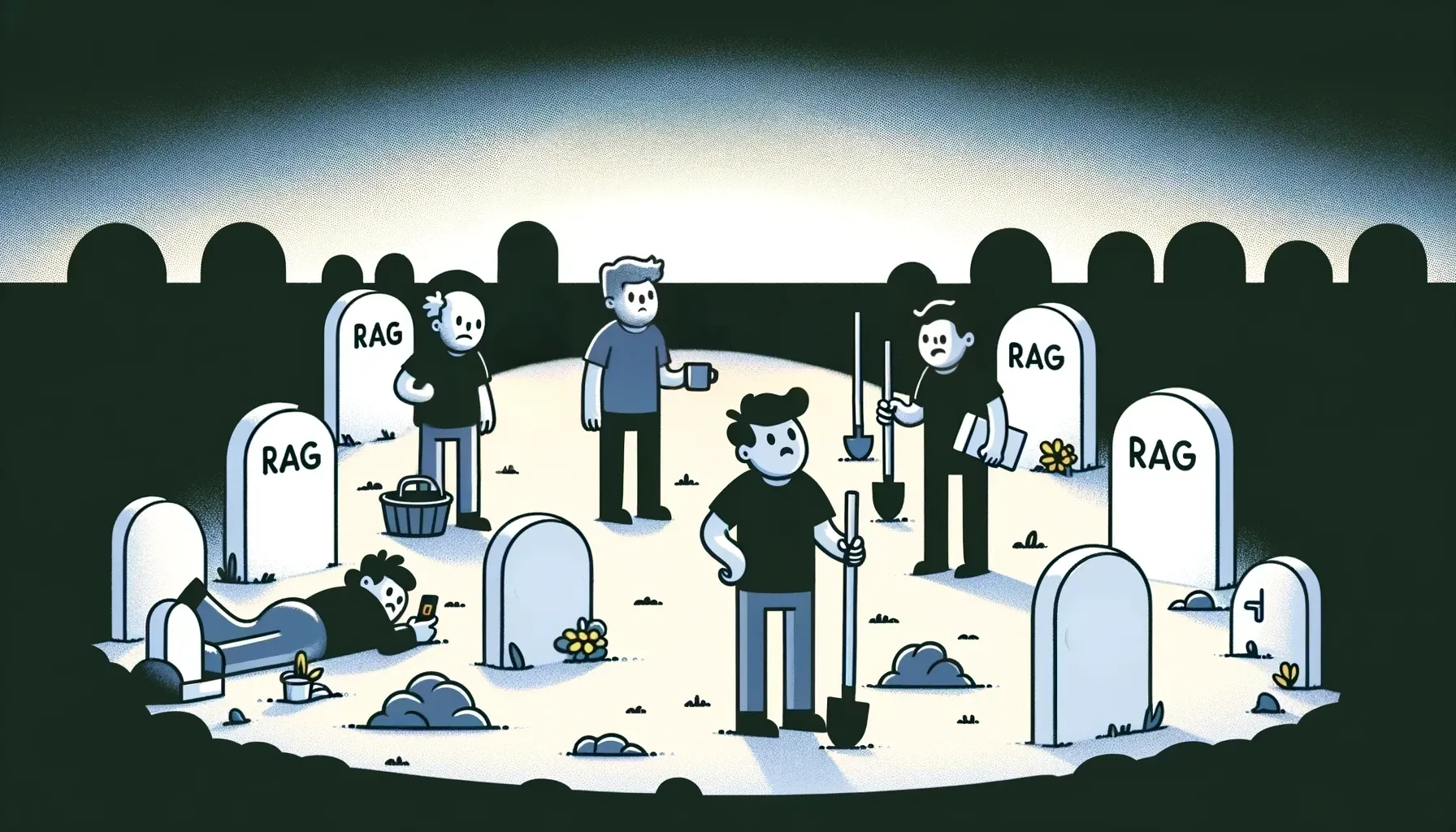

When dissected, the proposition becomes evident: for the lion's share of applications, fine-tuning GPT-3.5’s lofty claims hardly align with its exorbitant fees. If astute prompt engineering can procure 90% of your performance goals at a mere tenth of the price, the economics for that additional, often imperceptible improvement begins to wane.

tagStrategizing in the AI Epoch

There's no denying that certain niches, craving pixel-perfect precision, will find the premiums of fine-tuning justifiable. Yet, for the broader spectrum, the costs might be hard to reconcile with the benefits.

| Criteria | Prompt Engineering | Fine-tuning LLMs |

|---|---|---|

| Definition | Adjusting inputs to derive desired outputs. | Modifying the model using specific new data. |

| Strengths | - Quick to implement & test. | - Deep, specialized customization. |

| - Often cost-effective for broad applications. | - Can introduce entirely new knowledge. | |

| - Agile, allowing easy modifications. | - Tends to be more precise in niche areas. | |

| Weaknesses | - Limited to the base model's existing knowledge. | - Often higher upfront cost and time. |

| - May require many iterations for optimal results. | - Potential for overfitting to specific data. | |

| Use Cases | Generalist tasks, rapid prototypes. | Specialist tasks, niche applications. |

| Customization Depth | Surface-level adjustments via prompts. | In-depth, ingrained behavioral shifts. |

| Maintenance | Continuous refinement of prompts. | Less frequent, but may need retraining. |

| Associated Tools | PromptPerfect as an example. | OpenAI's fine-tuning API. |

As we navigate this AI epoch, it's pivotal to weave through the cacophony with a discerning ear. The tantalizing promise of boundless customization should not overshadow the pragmatic efficiencies of prompt engineering. As AI democratizes, the victors will be those who strategically blend prompt engineering’s agility with judicious, nuanced fine-tuning. Beware the mirages; sometimes, the key to AI mastery lies in the subtle symphony of prompts.