It is exciting to witness the growing community of developers building innovative, sophisticated LLM-powered applications using LangChain. This dynamic ecosystem promotes rapid development and innovation and also unveils unlimited potential for a wide array of use cases.

Amidst this, Langchain-serve plays an important role by making LangChain applications readily available and accessible to end-users. It streamlines deployment and delivery for LangChain applications and makes it easy for application developers to reach their target audience.

tagFeatures

With Langchain-serve, deploying and distributing LangChain applications becomes a breeze. It has a feature set designed to streamline the deployment process:

- API Management: Define your APIs in Python using the

@servingdecorator, ensuring a secure interaction with Bearer tokens. This seamlessly integrates your local LangChain applications with external applications, broadening its reach and functionality. - LLM-powered Slack Bots: Build, deploy, and distribute Slack bots thanks to the simple

@slackbotdecorator. This puts your LangChain application where your users are, providing them with access to your application within their Slack workspace. - Interactivity and Collaboration: Enable real-time interactions with LLMs via WebSockets, optionally keeping humans in the loop.

- FastAPI Integration: Wrap your LangChain apps with FastAPI endpoints and deploy them with a single command.

Furthermore, Langchain-serve takes care of all your infrastructure headaches, enabling you to focus solely on developing and refining your applications.

- Secure and Scalable: Enjoy secure, serverless, scalable, and highly available applications on Jina AI Cloud.

- Hardware Configuration: Easily configure the hardware resources for your application with a single command.

- Persistent Storage: Use the built-in persistent storage for LLM caching, file storage, and more.

- Observability: Monitor your applications with built-in logs, metrics, and traces.

- Availability: Your app remains responsive and available, regardless of the traffic volume.

Alternatively, you can self-host your apps. With a single command, you can export your apps as Kubernetes or Docker Compose YAMLs so that you can host your applications on your own infrastructure.

tagLangchain-serve in action

Before diving into the examples, please make sure you have the langchain-serve package installed. It is available as a PyPI package and you can install it with pip:

pip install langchain-serveNow, let’s see how to quickly deploy a simple LangChain agent as an HTTP endpoint with some examples of building and deploying Slack bots. These examples provide a practical demonstration of Langchain-serve's features and show you how straightforward it is to get your LangChain applications up and running.

tagWrap and deploy a simple agent as a RESTful API

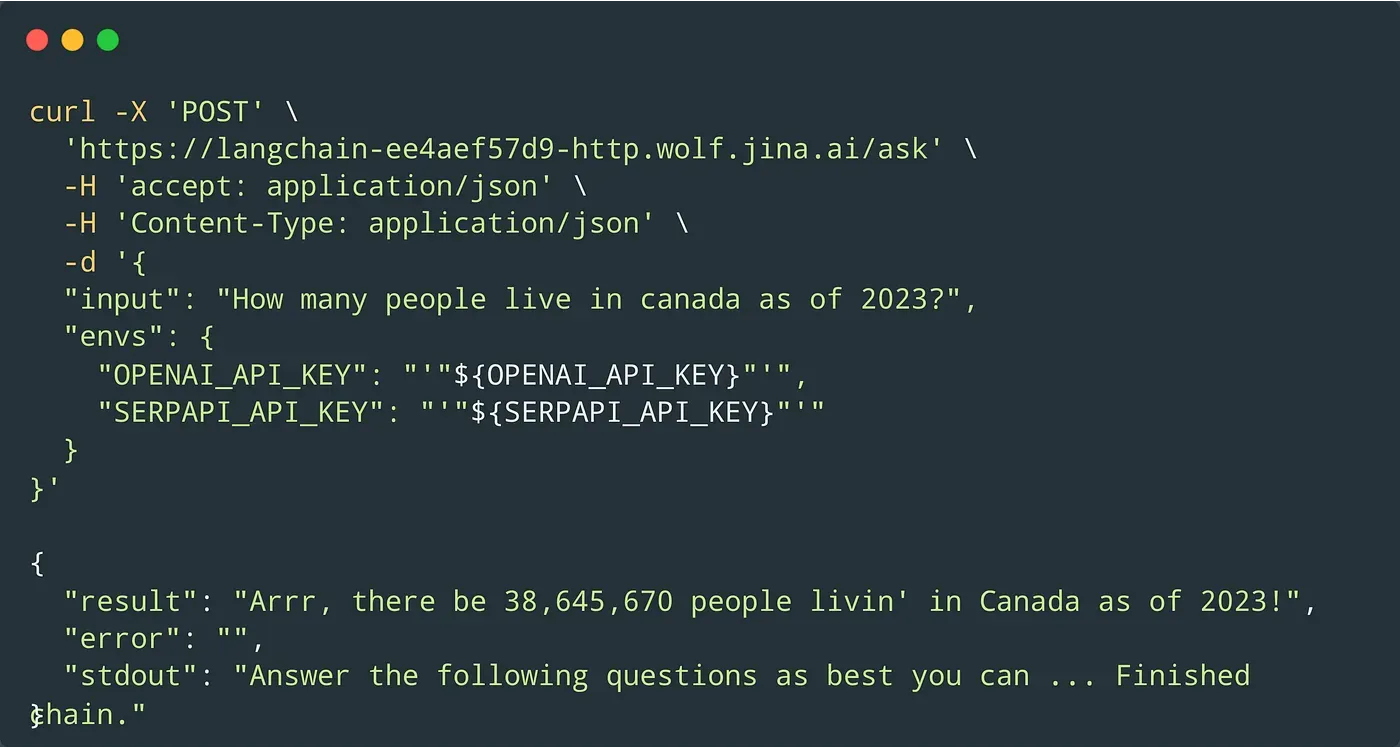

Let’s start with a simple example using ZeroShotAgent with a Search tool to ask a question. We will use the @serving decorator to define a POST /ask endpoint that accepts an input parameter and returns an answer.

This involves four simple steps:

- Refactor your code to one or more functions in a new file

app.pyand add the@servingdecorator. (See above for an example.) - Create a

requirements.txtfile with all the dependencies. - Run

lc-serve deploy local appto deploy and use the app locally. - Run

lc-serve deploy jcloud appto deploy and use the app on Jina AI Cloud.

The @serving decorator also enables real-time streaming and human-in-the-loop integration using WebSockets. Optionally, the endpoints can also be protected with bearer tokens for secure interactions. Follow the readme to go through more elaborate examples to deploy your LangChain APIs on Jina AI Cloud.

tagBuild, deploy, and distribute Slack bots

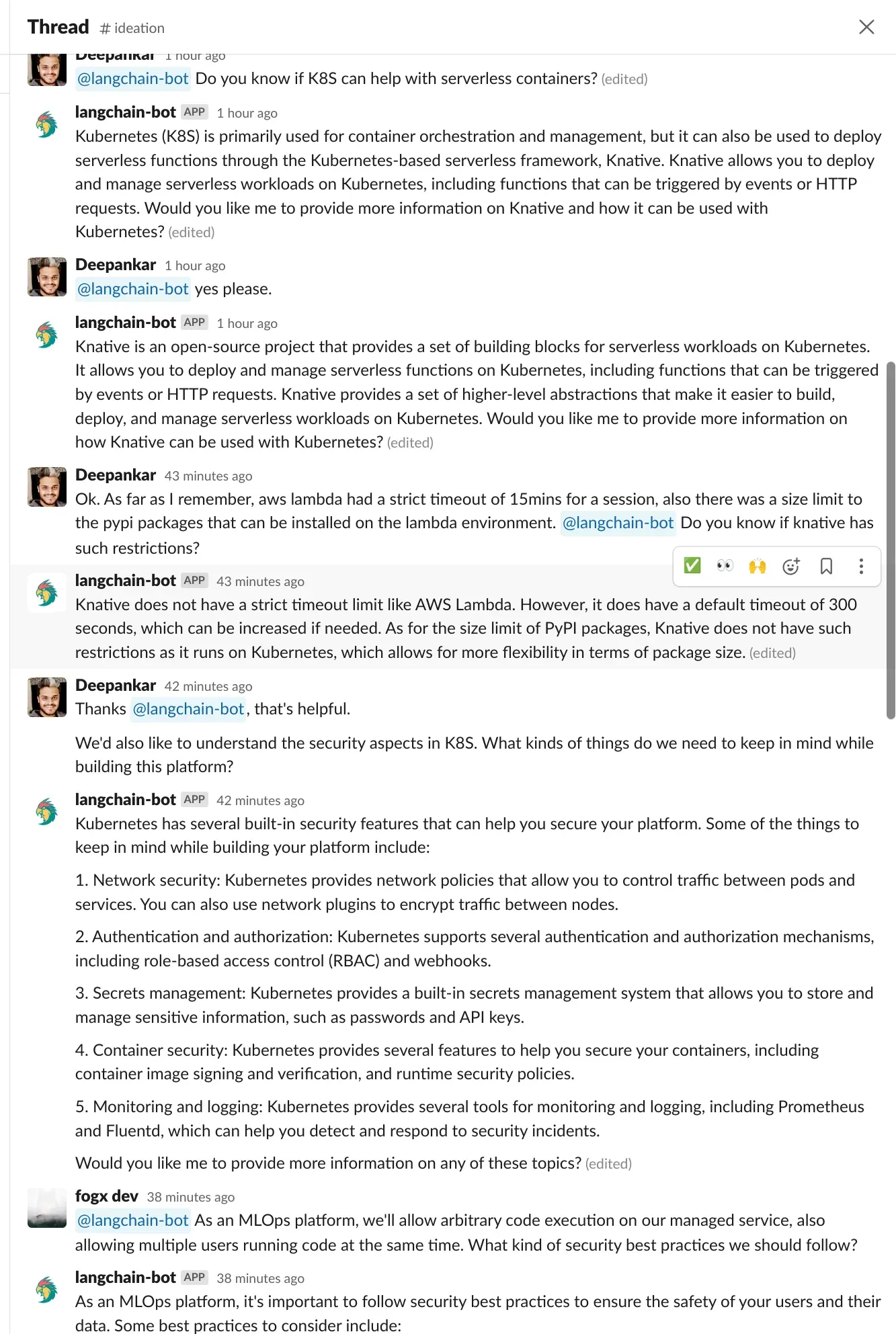

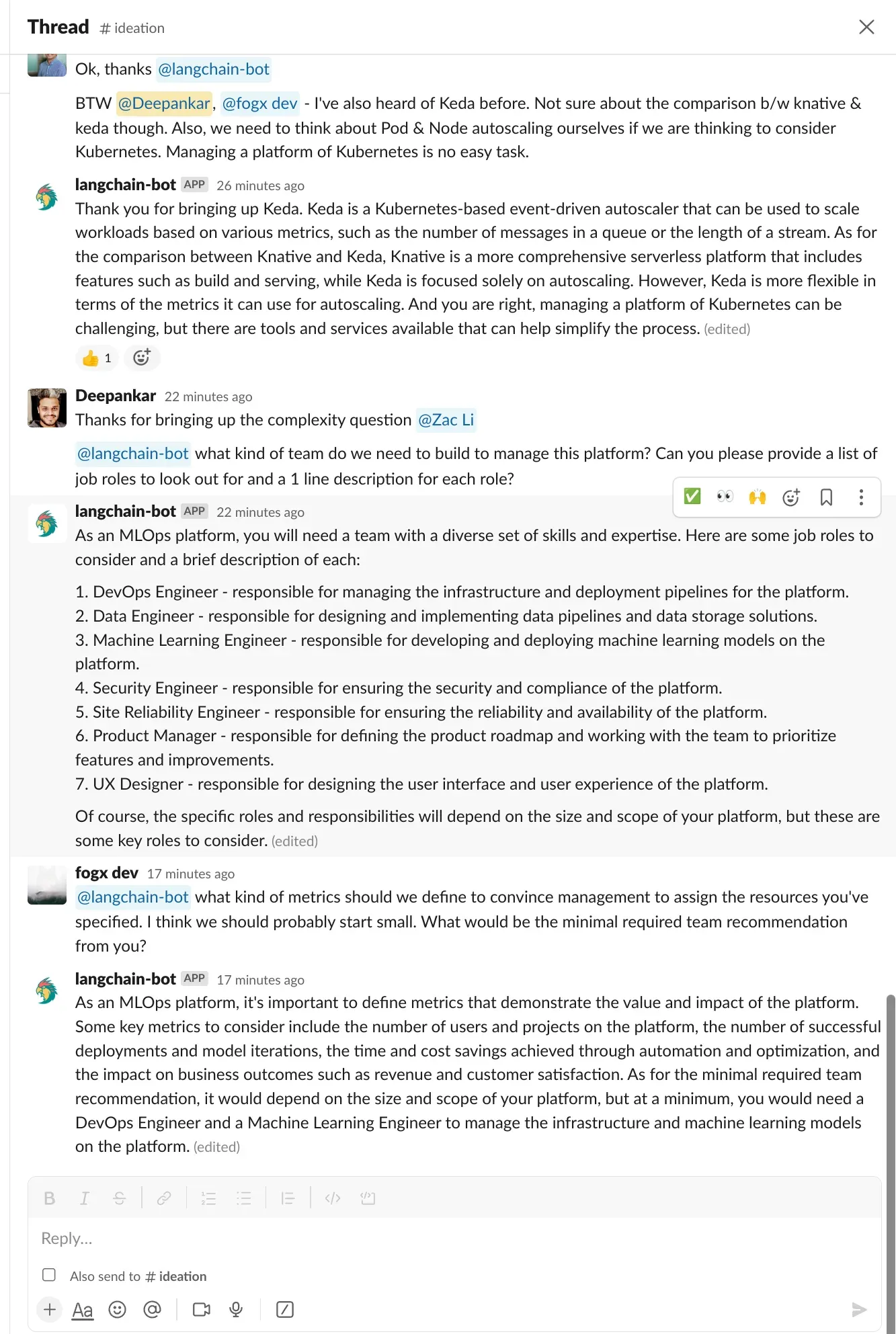

Slack has become an indispensable tool in many modern workplaces, helping teams to communicate and collaborate effectively. With the integration of LLM-powered Slack bots, you can infuse the reasoning power of LLMs into your workspace, providing rich contextual understanding and intelligent responses.

From moderator bots that enrich brainstorming sessions with the knowledge and reasoning capacity of LLMs, to project management bots that provide smart recommendations and summarize key points, the use cases for these Slack bots are endless. By leveraging LangChain and Langchain-serve, these bots can enhance the efficiency and productivity of digital workspaces.

Langchain-serve provides a decorator @slackbot to help build and deploy Slack bots on Jina AI Cloud. Here’s a step-by-step guide that takes you through creating and configuring a Slack app and deploying a demo bot integrated with Langchain-serve:

Let’s delve deeper into the Slack bot demo code and understand how it works so you can modify it for custom applications. We’ll also go through the@slackbot decorator, as it shapes the bot’s behavior.

@slackbot defines the entry point for the bot. This is expected to listen to two types of events: “app-mention” and “DM”

Prompt: We use a predefined prompt template to make the agent act as a Slack bot. This can be easily modified/extended to fit your application.

Memory: Since Slack threads are routinely longer than the token limits for LLMs, we use memory modules defined in LangChain to store the conversation history. Langchain-serve's get_memory function is designed to generate memory objects from a conversation history. However, you can also use custom memory objects like LlamaIndex's memory wrappers.

Tools: Likewise, we have a pre-defined tool for Slack: A Slack thread parser that extracts the conversation history from a thread URL. This is very useful when you share a different thread with the bot.

Workspace: Every bot deployed on Jina AI Cloud gets a persistent storage path. This can be used to cache LLM calls to speed up the response time. This can also be used to store other files that the bot needs to access.

Reply: The reply function is used to send a reply back to the user. It takes a single argument - message - which is the reply message to be sent to the user.

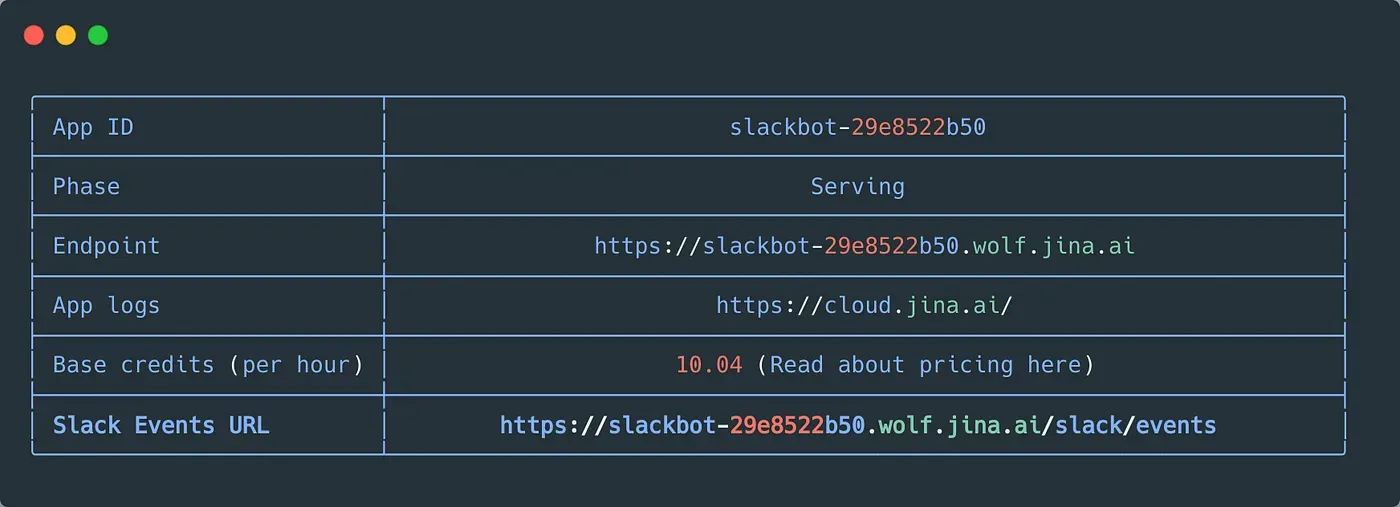

After customizing the bot to fit your needs, you can deploy it on Jina AI Cloud with the following command, where the .env should contain the Slack bot token, signing secret, and other required environment variables.

lc-serve deploy jcloud app --env .env

Once the bot is integrated and tested in your workspace, it’s ready to be rolled out to the workspaces of your end users. For further information on how to distribute your bot globally, you can refer to this guide.

tagLooking ahead

We’ve seen how Langchain-serve makes deploying LangChain applications a breeze. We’ve seen it in action, turning a simple agent into a RESTful API and transforming LLM-powered apps into interactive Slack bots. As we continue to evolve, our sights are set on the next milestone: bringing the intelligence of LangChain to Discord with discordbot.

We’re eager to see the diverse Slackbots and APIs you’ll be shipping for your users, bridging the gap between a local demo and practical, user-friendly applications that harness the full potential of LLMs in the real world.

tagLearn more about Langchain-serve

For more information about Langchain-serve, visit the project page at GitHub, and visit the Jina AI website for more about the Jina ecosystem.

tagGet in touch

Talk to us and keep the conversation going by joining the Jina AI Discord!