Slack is an essential tool for business that has transformed the way teams communicate and collaborate. Introducing LangChain-powered chatbots adds a layer of intelligence to your Slack workspace. These bots don’t just passively respond to commands. They’re like virtual colleagues, capable of understanding context and providing useful feedback.

LangChain-powered chatbots are more than just message handlers. They are like an interactive consultant, fielding questions about your company’s internal data, and putting a wealth of information at your fingertips. The result? A workspace that’s not just connected, but also informed, fostering a more engaged and knowledgeable team.

As a starting point, you can download a demo chatbot from Langchain-serve's repository. Follow this step-by-step guide to create an app in your workspace and deploy it to Jina AI Cloud.

pip install langchain-serve

echo 'SLACK_SIGNING_SECRET=<your-signing-secret>' >> .env

echo 'SLACK_BOT_TOKEN=<your-bot-token>' >> .env

echo 'OPENAI_API_TOKEN=<your-openai-api-token>' >> .env

lc-serve deploy slackbot-demo --env .envIn a previous article, we discussed how you can use the @slackbot decoration to customize and add functionality to your Slack chatbot. We also show a lengthy example interaction where the chatbot participates directly in a brainstorming session.

tagBuilding an HR Slackbot

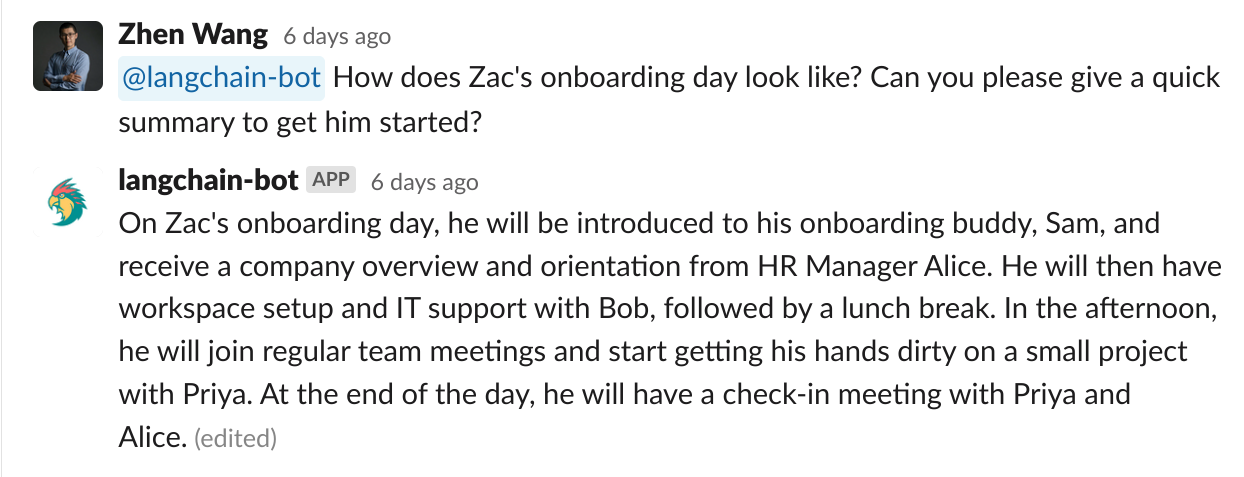

Let’s consider a hypothetical company: “FutureSight AI” (a name courtesy of ChatGPT). FutureSight has typical HR responsibilities, including onboarding new employees. The company is also deploying a chatbot for its HR department that we’ll call langchain-bot.

We'd like this chatbot to be about to answer basic questions like this, but also to do other, more menial HR tasks; like answering questions about company policies and taking notes about Slack discussions.

Now, we're going to show you how.

We’ll focus on constructing a Slack bot specifically designed to automate and streamline HR tasks and interactions. This chatbot, which draws on HR department PDF documents stored on Google Drive, can be an essential tool for both employees and the HR team, promoting efficiency and engagement in the workspace.

We will equip this bot with access to company policies, onboarding procedures, and related information. HR documents, stored as PDFs on the company’s Google Drive, serve as the bot’s knowledge source.

Here’s what we’d like our bot to accomplish:

Imagine an employee tagging the bot in a channel to ask a question. The chatbot should be competent enough to respond directly in the thread. For example, an employee asks:

FutureSight's langchain-bot should then search through the internal documents and directly answer in Slack.

Additionally, we’d like our bot to retain the memory of the conversation as context for follow-up interactions.

tagLet’s get to building

Before starting to code, we need to create a Slack app and configure it. You can follow this readme to get started.

Langchain-serve provides a simple-to-use decorator @slackbot which responds to commands and replies when tagged. You can read more about it here.

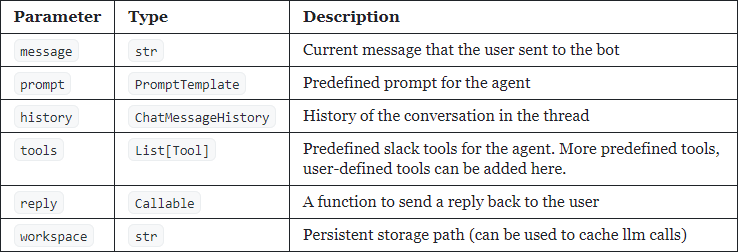

The @slackbot decorator takes the following arguments:

tagSlash commands

We also need to define a couple of key commands for our bot.

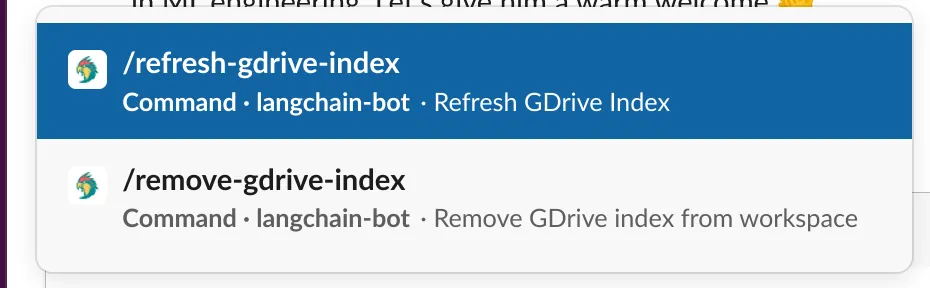

/refresh-gdrive-indexindexes all PDF files from Google Drive, and saves them in the current workspace as LangChainToolinstances. (See the code on GitHub)/remove-gdrive-indexerases all existing indexes from the workspace. (See the code on GitHub)

These functions are made available to the Slack chatbot by using the @slackbot decorator:

from lcserve import slackbot

@slackbot(

commands={

'/refresh-gdrive-index': refresh_gdrive_index,

'/remove-gdrive-index': remove_gdrive_index,

}

)

def hrbot(

message: str,

history: ChatMessageHistory,

reply: Callable,

workspace: str,

**kwargs,

):

llm = OpenAI(temperature=0, verbose=True)

update_cache(workspace)

tools = load_tools_from_disk(llm=llm, path=workspace)

prompt = ConversationalAgent.create_prompt(

tools=tools,

prefix=get_hrbot_prefix(),

suffix=SlackBot.get_agent_prompt_suffix(),

)

memory = get_memory(history)

agent = ConversationalAgent(

llm_chain=LLMChain(llm=llm, prompt=prompt),

allowed_tools=[tool.name for tool in tools],

)

agent_executor = AgentExecutor.from_agent_and_tools(

agent=agent,

tools=tools,

memory=memory,

verbose=True,

max_iterations=4,

handle_parsing_errors=True,

)

reply(agent_executor.run(message))And this makes them available in Slack:

tagRespond to tags

Enabling “respond to tags” is simple, thanks to all the tooling LangChain and Langchain-serve already provide.

- Load tools (added during index refresh) from disk.

- Define a prompt template for the bot.

- Define a memory object to track conversation history.

- Define an

AgentExecutorfromConversationalAgent, tools & memory. - Call

replyonagent_executor.run(message)to send the response to the same Slack thread.

The app then can be deployed with the command:

lc-serve deploy jcloud app --env .envMake sure to follow the example repo to understand the setup, directory structure, etc., and modify the command above as needed to point to the right files and directories.

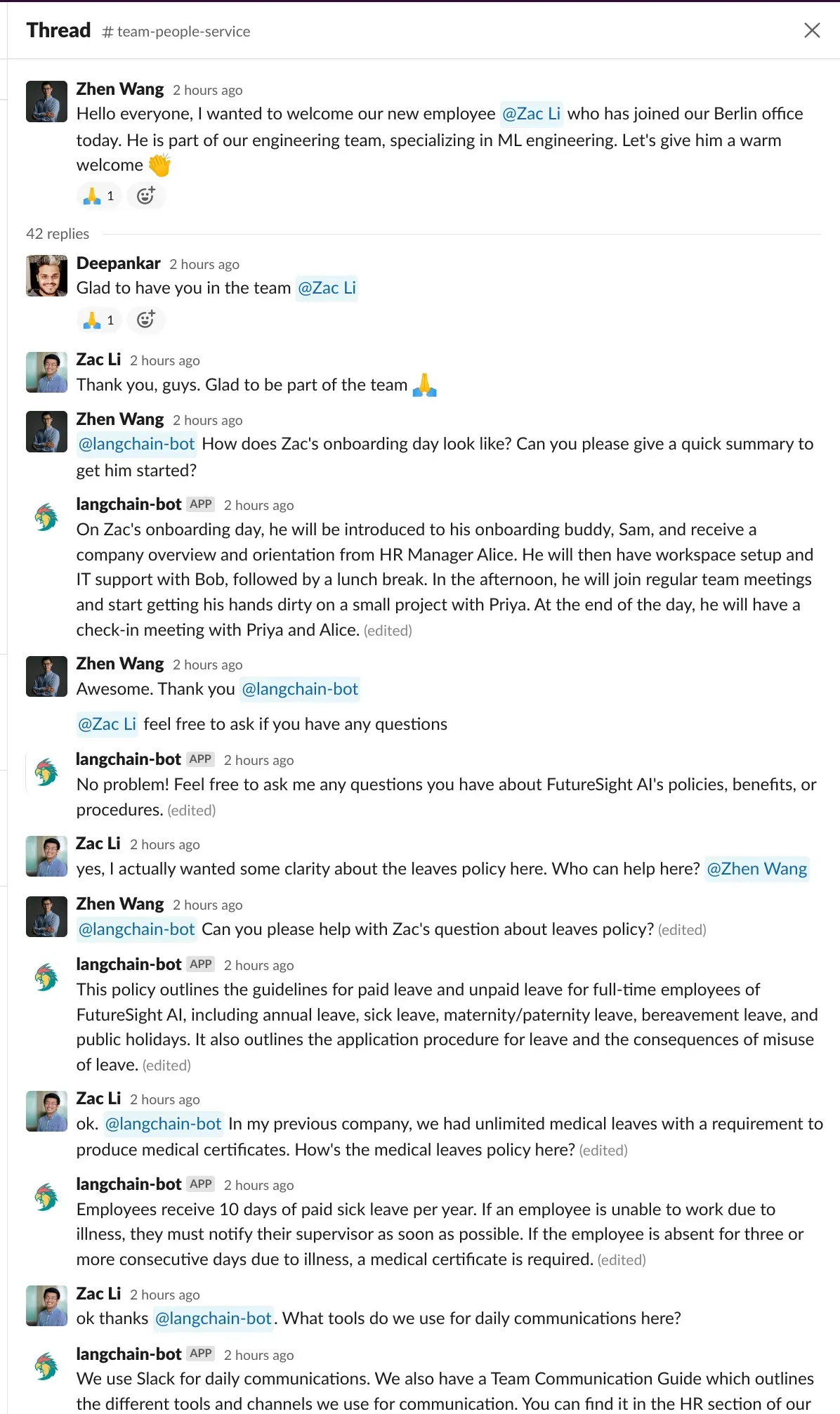

tagUsage

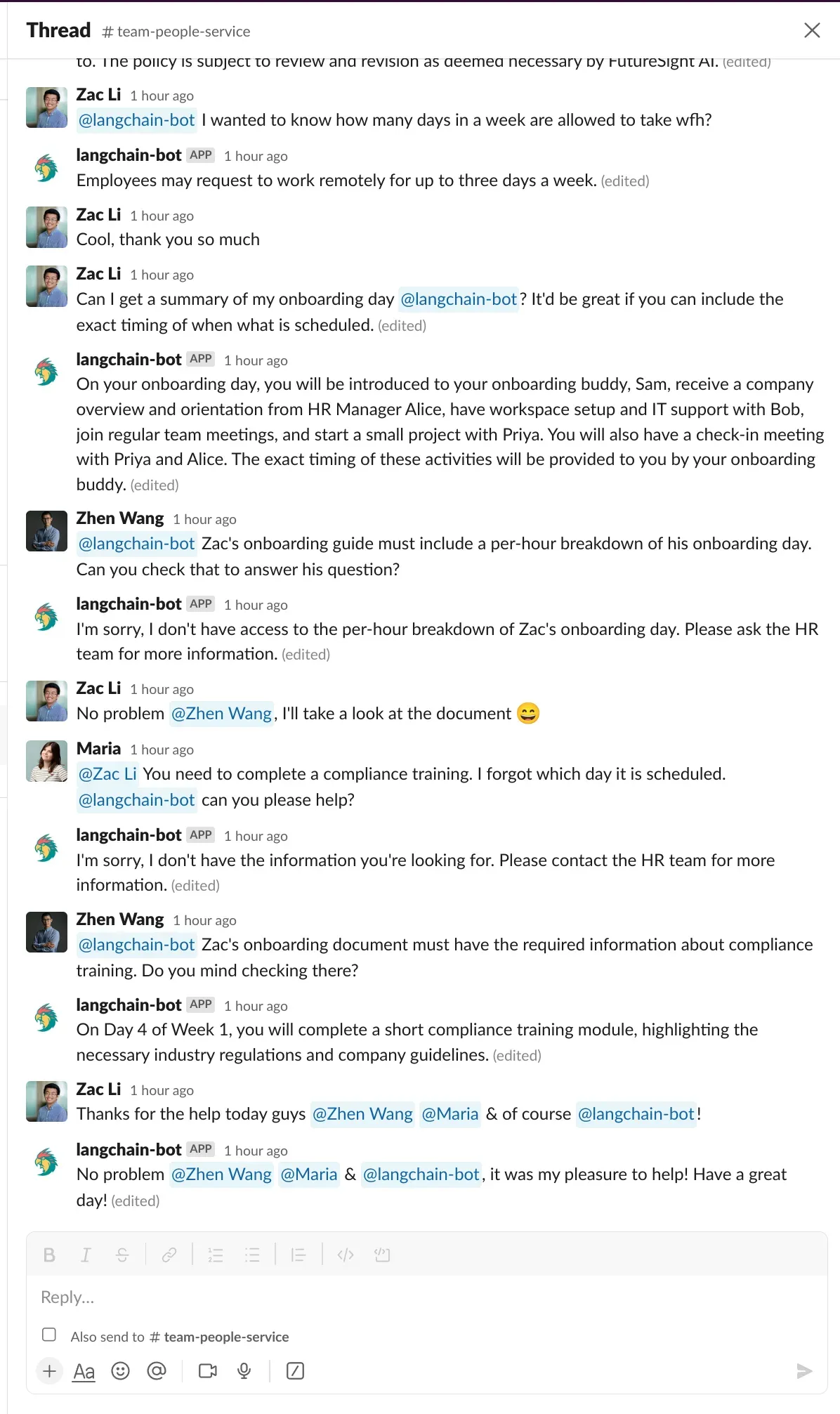

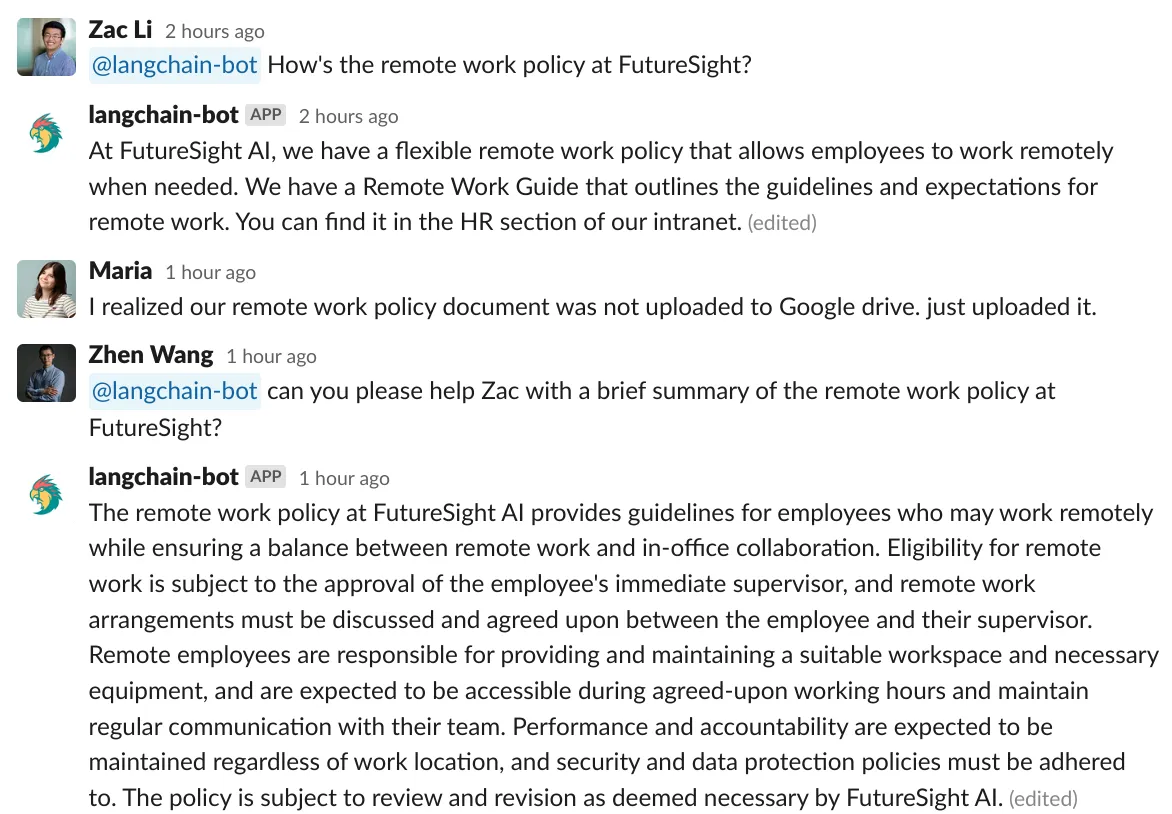

Now, let’s dive into some examples to illustrate how useful this bot can be, especially to a new employee or someone who recently transferred to a new workgroup. The screenshots below show how the bot leverages the company’s HR policies — uploaded as PDFs on Google Drive — to provide useful information.

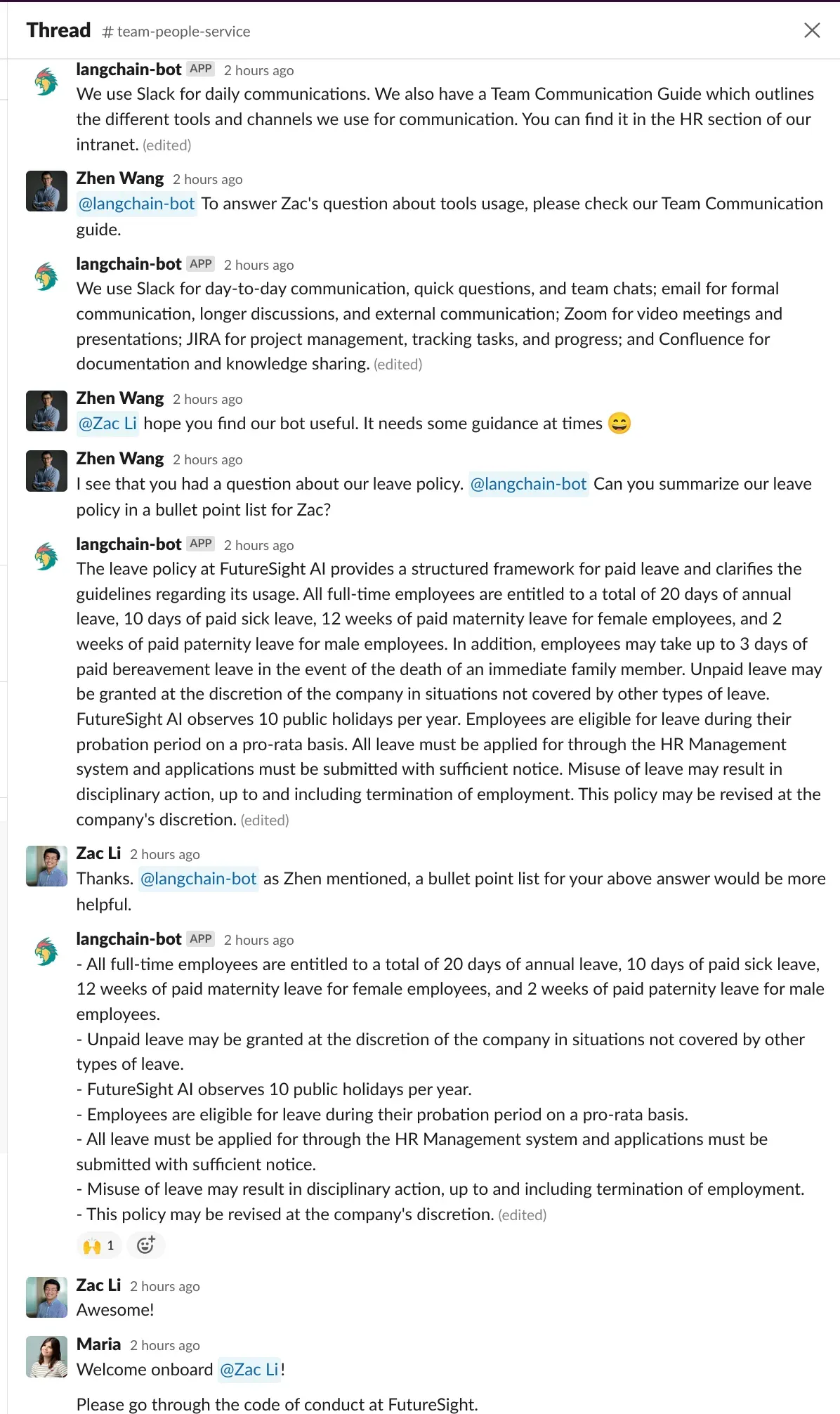

You’ll see how, in instances where the bot doesn’t hit the mark with its responses, an HR team member gently guides it to refer to a specific document, enabling the bot to provide a more accurate response. Through this dynamic human interaction, the chatbot learns and progressively enhances its performance over time.

Adding to the intriguing interaction, a question from the new employee led the HR team to realize that an important document hadn’t been uploaded:

After rectifying the oversight by uploading the document and using the /refresh-gdrive-index slash command to update the bot’s knowledge, the bot was asked to address the query again. And voila! It successfully provided the information. This shows not only the bot’s utility but also its role in identifying gaps in the information available, thus helping keep the system updated and efficient.

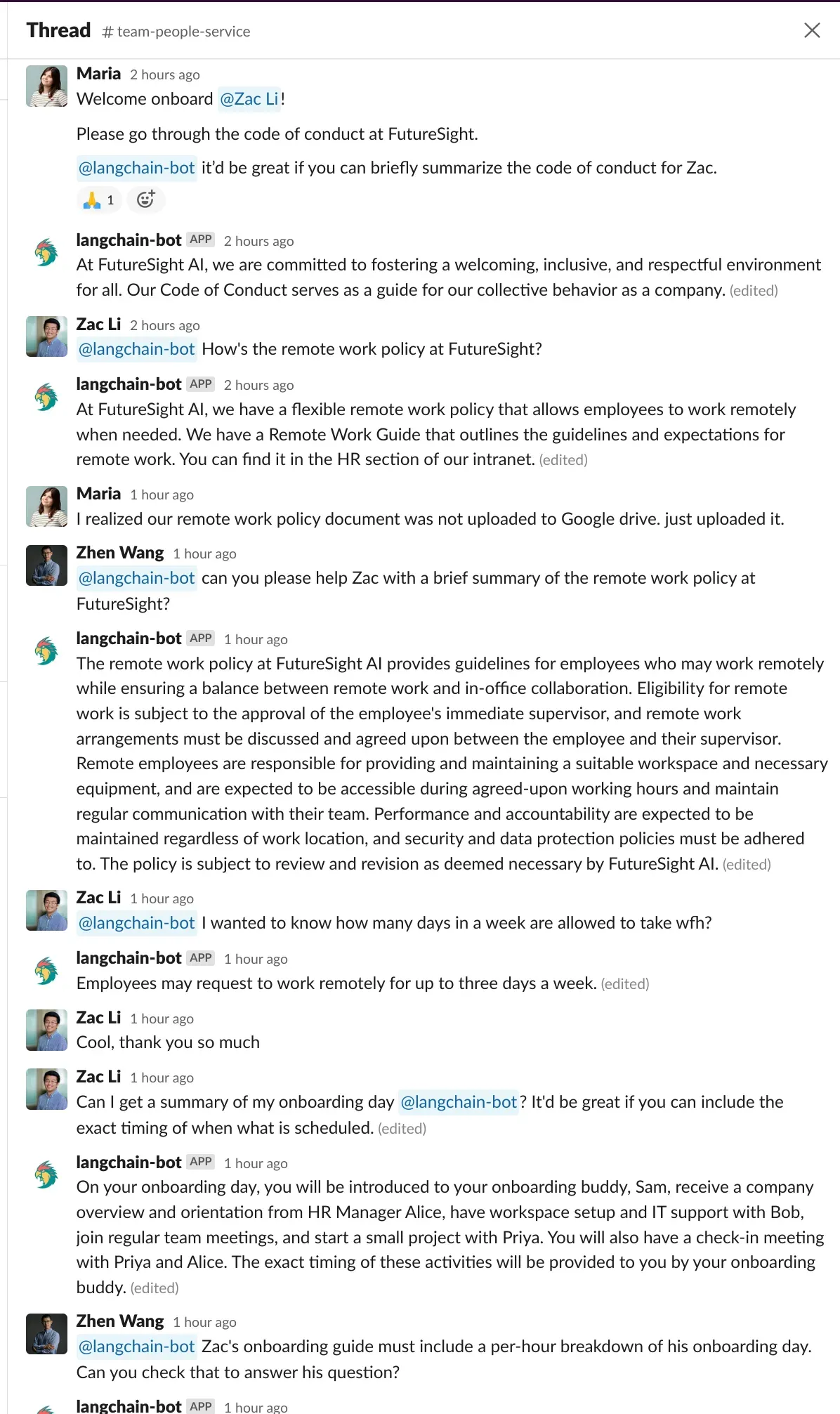

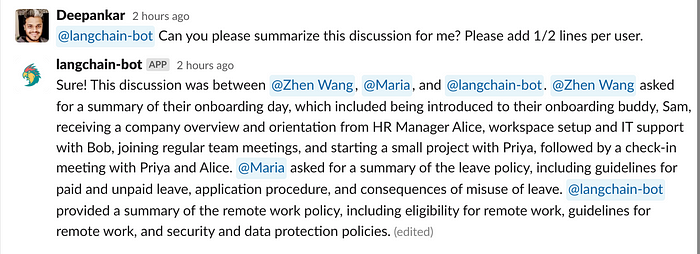

To further demonstrate the bot’s ability to track and recall long threads, we’ve included a screenshot below. It showcases an instance where a user requested the bot to summarize a lengthy conversation. Impressively, the bot was up to the task and delivered a succinct summary, affirming its proficiency in managing complex interactions.

tagExploring further possibilities

We've shown that LangChain-based chatbots have an immense range of applications, from a bot that assists in project management by deciphering the subtleties of your team’s work and proposing efficiency-enhancing insights, to a bot that neatly captures and summarizes the key points and action items from meetings.

tagLearn more

Langchain-serve ensures that LangChain-powered applications are always accessible to end-users. It empowers developers to build advanced Slack bots and REST/WebSocket APIs, that offer high availability, scalability, and observability. All of this, in tandem with the powerful Jina AI Cloud platform, provides a robust ecosystem for creating sophisticated, real-time applications that cater to diverse needs and use cases.

To learn more about Langchain-serve, check out the GitHub repository and read this article from Jina AI.

tagGet in touch

Talk to us and keep the conversation going by joining the Jina AI Discord!