"So, who will be your biggest competitor?"

tagThe tale of Christmas past

A few days before Christmas 2019, I was sitting in a cramped boardroom, surrounded by the investment committee of our seed VC firm. We'd been there for hours, discussing back and forth. This was the final nerve-wracking round of pitching to secure the much-needed $2 million pre-seed fund for my neural search initiative: The birth of Jina AI was on the line. It was a do-or-die moment.

One of the partners, who worked at Google NY since 2005 asked me a question I'll never forget:

"Who will be your biggest competitor?"

"Google, Elastic, Agolia, ..." I confidently answered according to the notes I'd prepped earlier. Then I gritted my teeth, just waiting for them to ask the boring clichéd questions like "how do you compete with Google?". Before they could respond, I added "...but more serious competition may come from a technology that doesn't even need embeddings as an intermediate representation – an end-to-end technology that directly returns the result you want."

They didn't get it. They stuck to the same tired script. Banging on about competing with Google.

But times (and minds) have changed, and now they understand the technology I was talking about.

That technology is generative AI.

And neural search is discriminative AI.

Back then, 15 months after Google released BERT, generative AI simply wasn't the answer for scalable and high quality search. Neural search, a flexible framework that could easily operate with dense embedding representations and combine multiple subtasks was the only realistic way to search multimodal data.

tagThe paradigms they are a'changin': The rise of generative AI

Since 2021, we've seen a huge paradigm shift from single-modal AI to multimodal AI in the industry:

The rise of multimodal AI can be put down to advances in two machine learning techniques over the last few years: Representation learning and transfer learning.

- Representation learning lets models create common representations for all modalities.

- Transfer learning lets models first learn fundamental knowledge, then fine-tune on specific domains.

In 2021 we saw CLIP, a model that captures the alignment between image and text; the following year, DALL·E 2 and Imgen generated high-quality images from text prompts. The generative art led by Stable Diffusion started from a community carnival and has now evolved into an industrial revolution. This is just the tip of a very big iceberg. In future, we'll see more AI applications move beyond one data modality and leverage relationships between different modalities. Ad-hoc approaches are going the way of the dinosaurs as boundaries between data modalities become fuzzy and meaningless.

But before we start to imagine fancy high-level AI applications, there are two fundamental problems we must solve first: search and creation.

Or should I say, search or creation?

tagThe duality of search and creation

Search and creation are two sides of a coin, a duality.

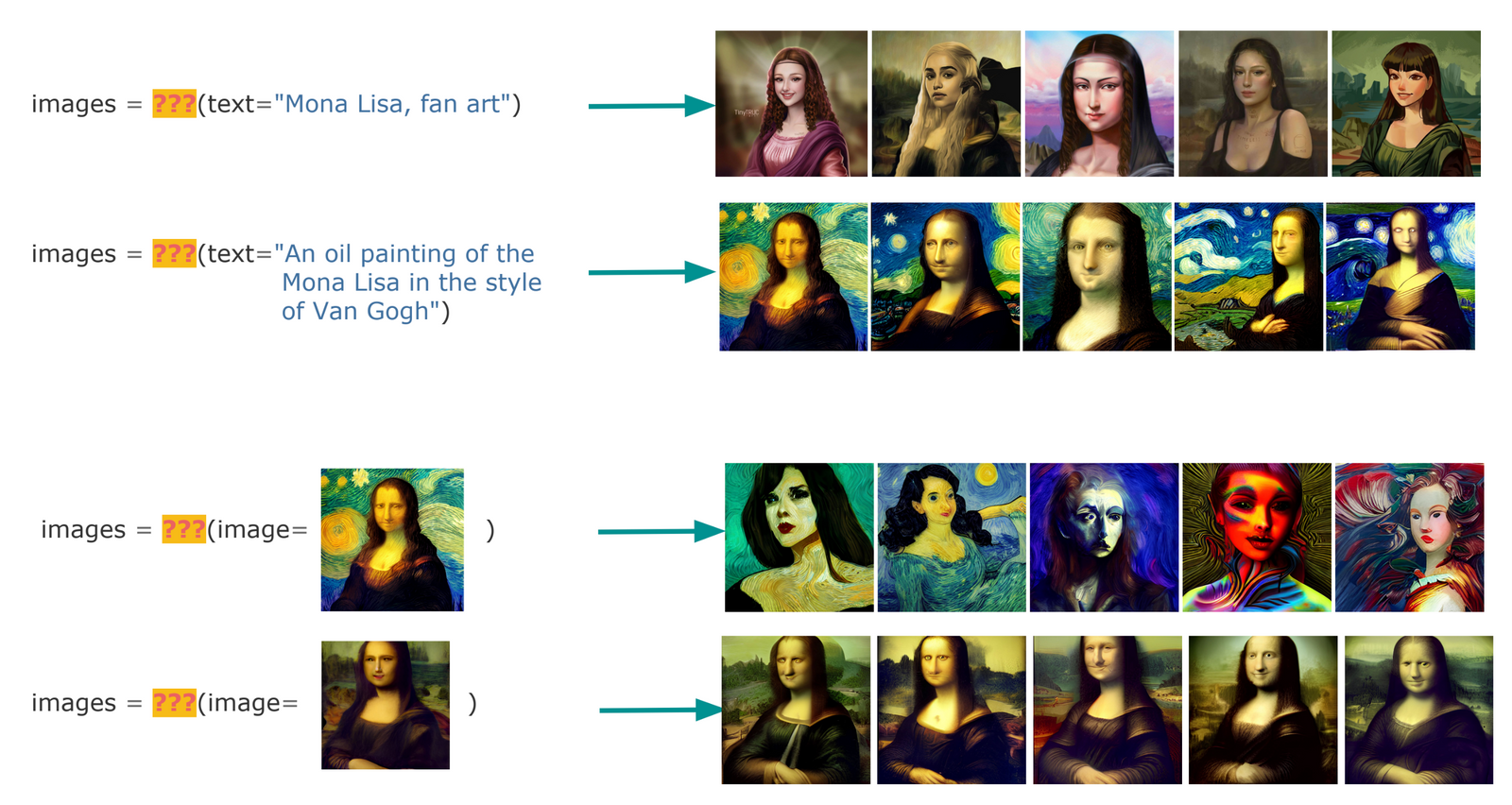

To understand this, let's take text-to-image and image-to-image as examples and look at two functions:

def foo(query: str) -> List[Image]:

...

def bar(query: Image) -> List[Image]:

...So, what are foo and bar? Are they search, where foo represents text-to-image search (CBIR) and bar represents the reverse image search? Or are they creation, where foo represents prompt-to-image generation and bar refers to inpainting?

Can you even tell the difference below? What are the searched results and what are the created results?

Or does it even matter? Search is find what you need; creation is make what you need. If a system returns the result you need, you happily take it. Does it even matter if it's from search or create?

"Oh, but it does matter", you might argue. "Because I don't want to see fictional product images in my e-commerce search results."

Sometimes people do indeed care about the fidelity to the database. That's easy; let's overfit a generative AI model. We'll make the model remember everything it observed from the training data, losing all generality and its ability to improvise. It will only return the training data. There you go, a search system.

A generative AI/creative AI model takes an ax to this oppressive restriction. Let the model improvise, embrace randomness, and then the vibe overpowers the fidelity. A creative AI is an underfitted search system.

Ask for the moon – the future retrieval system may see no difference between search and creation. You can easily control the fidelity and improvisation with a sliding bar. The coin of search and create is in your hand.

tagConclusion

With more and more large language models (LLMs) and the rise of generative AI, using LLMs, especially pretrained language models (PTLMs), has become a popular mechanism to extract knowledge from free-form text on demand.

Despite the problems of reporting bias in the corpus and the lack of robustness to the query, LLMs have some quite successful downstream tasks like persona-grounded dialog, narrative story generation, and metaphor generation. There's also a recent work from COLING2022 that explores cross-modal models like CLIP as a commonsense knowledge base. You can find my notes about this paper here.

The day before writing this post, I read a tweet from Yann LeCun about Galactica - a generative AI with a search-like interface:

From a quick test, it did a decent job at creating an academic tone of voice, but rapidly drifted far off-topic, and had limited domain knowledge. Nevertheless, it's another milestone for generative AI.

Go ahead. Flip the coin. Watch as it tumbles through the air, flipping end over end. Our eyes never drift from its path. As it reaches the apex of its arc, we know the result no longer matters.