tagWhat is Neural Search?

One of the most promising applications of cross-modal machine learning is neural search. The core idea of neural search is to leverage state-of-the-art deep neural networks to build every component of a search system. In short, neural search is deep neural network-powered information retrieval. In academia, it’s often called neural information retrieval (neural IR for short).

Neural search is relevant to many industries. Search engines are critical for every business with an online presence. eCommerce platforms such as Amazon, Alibaba, and Walmart use search to match products with customers. Social media networks like Facebook and LinkedIn use search to connect people with content and groups. The popularity of mobile apps has led to a new type of search: app stores need efficient search algorithms to surface the right app for each user’s needs. Even non-digital businesses can benefit from neural IR technology. For example, hospitals could use it power next-generation clinical decision support systems by finding similar patients in past medical records.

tagWhat is a Neural Search Engine?

A neural search engine is simply a search engine that uses deep learning algorithms to perform its tasks. The three main components of any search engine are the indexer, the query processor, and the ranking algorithm. In a traditional search engine, each of these components would be designed separately using rule-based methods. However, in a neural search engine, all three components are designed using deep learning methods.

This approach has several advantages. First, it allows for end-to-end training of the system which can lead to better performance overall. Second, because all of the components are unified under one framework (like Jina), it’s easier to share information between them which can also lead to better performance. Finally, it makes it possible to use the same framework to build other types of neural search engines (e.g. an image search engine or a video search engine) which can be very helpful in domains where multiple modalities are important.

tagDifferences Between Traditional (Symbolic) Search and Neural Search

The biggest difference between neural search and symbolic search is that neural search uses vectors to represent data, whereas symbolic search relies on symbols. This allows neural search to be much more accurate, as it can utilize the relationships between data points in ways that symbolic search cannot. For instance, a vector representing an image can contain information about the shape, color, and content of the image, which can be used to better match it with other images or documents.

Additionally, neural search is not limited by the expressiveness of symbols like traditional approaches; rather, it can learn arbitrarily complex representations from data. This gives neural search an advantage when it comes to understanding the semantics of a query and retrieving matching documents.

Also, because neural search is based on learning algorithms rather than hand-crafted rules, it is able to adjust to changing real-world conditions over time (by fine-tuning). This is in contrast to symbolic search, which relies on hand-crafted rules that are brittle and require constant maintenance as the real world changes.

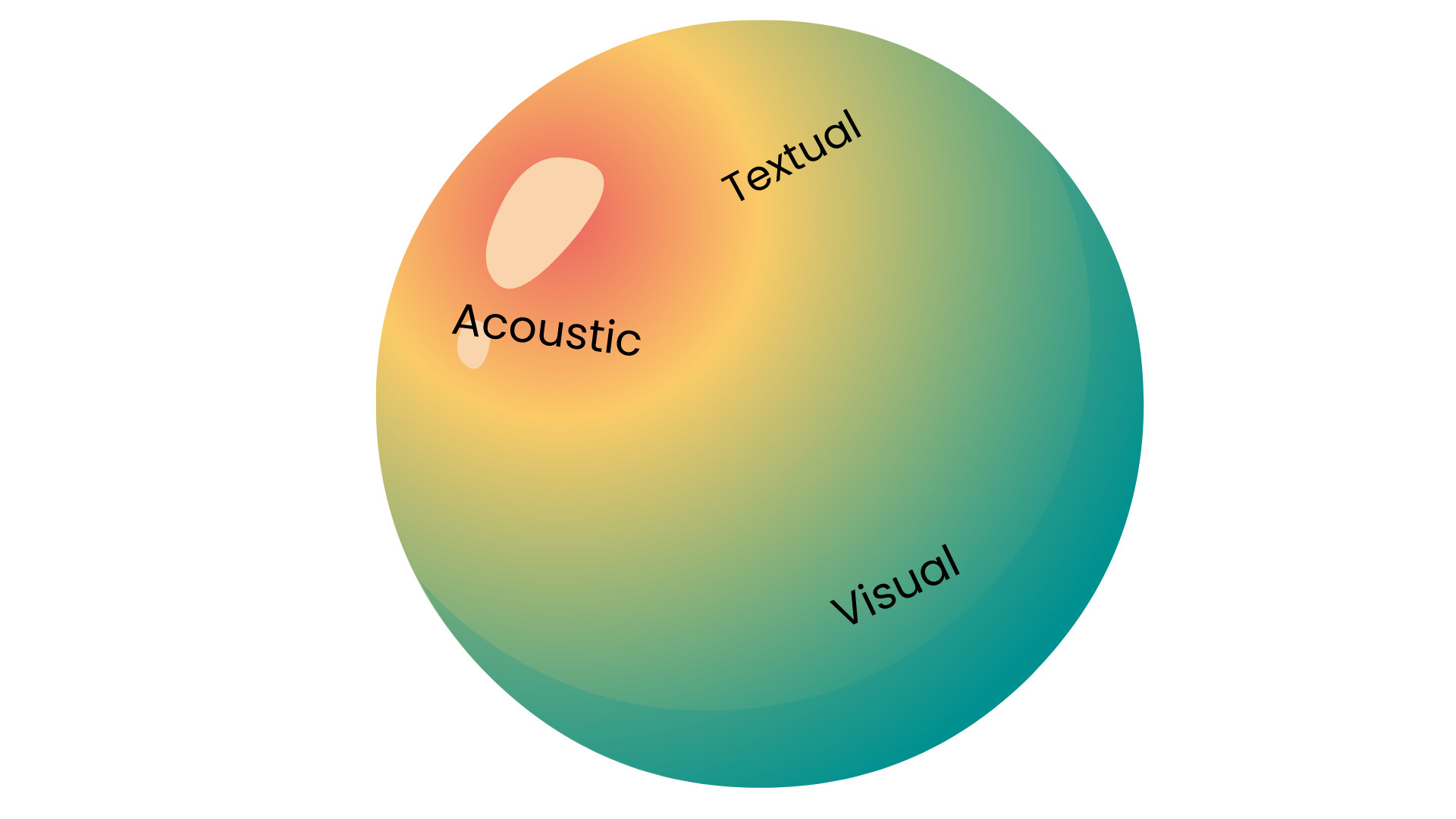

Finally, neural search is not limited to textual data; it can be used with any type of data, including images, videos, audio, and 3D data. Therefore, neural search enables the construction of search systems that are more flexible and powerful than traditional symbolic search engines.

tagWhat Are Neural Search’s Advantages?

- Natural language: With neural search, you can specify what you're looking for in natural language and the search engine will return results that match your query. This is a major improvement over traditional search engines, which can only understand keywords.

- Improved usability: Neural IR systems can provide users with more natural ways to interact with them such as via conversational interfaces powered by chatbots.

- Increased accuracy: Neural search is more accurate than traditional search methods. This is because it can learn complex patterns in data that are difficult for humans to code into rules. For example, a human might write rules to match documents containing the word “cat” with queries about pets, but a neural search engine could learn to match those documents without using the word “cat”, instead using words like "kitty" or "feline". This means that neural search engines can find relevant results even when the user’s query does not exactly match the terms used in the document.

One example is Google Search's use of RankBrain, a machine-learned ranking system that processes billions of searches per day and improves click-through rates by up to 15%.

Enhanced user experience: The improved accuracy of neural search engines leads to enhanced user experiences and satisfaction. When users get better results, they are more likely to use the system again and recommend it to others.

tagDifficulties in Building a Neural Search Engine

Building a neural search engine is no small feat. While the benefits of using deep learning to power a search engine are numerous, there are also a number of challenges that must be addressed.

- Training a neural network requires large amounts of labeled data which can be difficult (and expensive) to obtain. This is especially true for complex tasks like search which require many different types of data (e.g., text, images, videos, etc.).

- The need for expertise in deep learning algorithms and architectures: Deep learning is a very new field and there are still many open questions about which algorithms and architectures work best for various tasks. As a result, building a neural search engine requires access to experts who can design and implement the right models. This can make it difficult to find talent to build a team or consultants to help with specific challenges.

- The need for computational resources: Deep learning algorithms are computationally intensive and training large models can take days or even weeks on standard hardware. This means that building a neural search engine requires access to powerful computing resources, such as GPUs.

- Because all three components of the search engine (indexer, query processor, ranking algorithm) are unified under one framework (e.g. Jina), it’s important to carefully consider how information will flow between them. If not designed properly, this could lead to sub-optimal performance.

Despite these challenges, building a neural search engine is an incredibly exciting endeavor with the potential to revolutionize how we access information on the web.

tagGuide to Building a Neural Search Engine

Here is a step-by-step guide to building a neural search engine the traditional way:

- Collect and index your data: This step is where you take all of the data that you want to be searchable and put it into a format that can be read by a computer. For example, if you wanted to make all of the text in Wikipedia searchable, you would first need to download every article as an HTML file. Then, you would need to write code to extract the body text from each HTML file (ignoring the headers, sidebar content, etc.). This extracted text would then need to be saved in a format that can be read by your neural search engine (more on this later).

- Choose or build a deep neural network: This step is where you choose or build the deep neural network that will power your neural search engine. As mentioned earlier, there are many different types of deep neural networks, so it’s important to choose one that is well-suited for the task of information retrieval. A few popular choices for this task are convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

- Train your deep neural network: Once you have chosen or built your deep neural network, it’s time to train it on your indexed data. The goal of this training process is to fine-tune the parameters of your neural network so that it produces good results when used for information retrieval tasks such as searching through indexed documents. There are many different ways to train a deep neural network; some common methods include supervised learning and reinforcement learning.

- Use your trained model for inference: After your deep neural network has been trained, you can use it for inference tasks such as retrieving documents in response to user queries. When using your trained model for inference, there are typically three main components: 1) a document encoder which converts each document into a fixed-length vector representation; 2) a query encoder which converts user queries into vector representations; 3) a similarity function which compares the vectors produced by the document encoder and query encoder and outputs a score indicating how similar they are.

That seems like a lot of work. But it gets a lot easier if you use a dedicated neural search framework like Jina.

tagThree Best Neural Search Frameworks on the Market

Jina is the open-source framework for neural search and semantic search. It scales easily with minimal resources thanks to its modular design, library ecosystem and growing community.

Google Cloud Search: Built on the same technology that powers Googlebot, Cloud Search crawls, indexes, and serves content from G Suite—including Drive, Gmail, Sites, Groups, Calendar, Hangouts Chat messages and more—to surface the most relevant information for your users.

Microsoft Azure Search: A cloud search service that provides a complete solution to deliver world-class search experiences for customers and developers. It offers built-in connectors that index data from many commonly used enterprise repositories such as SharePoint Online, Sitecore CMS, Drupal 8+ & 9+, SQL Server databases (on premises & in Azure), Cosmos DB, etc.

tagJina: One of the Best Neural Search Frameworks

Neural search is still an emerging technology, and there are not many mature open-source options to choose from. This is where Jina comes in – it is the most complete open-source solution for neural search available today. Not only does it provide all the necessary components for building a neural search system, but it also makes deploying and scaling such a system easy thanks to its cloud-native design.

Another key advantage of using Jina is its performance. Thanks to its unique architecture based on microservices, Jina can scale horizontally very easily without sacrificing performance. And because each component of a Jina system can run in parallel, a neural search system built with Jina can achieve lightning-fast retrieval speeds.

In addition to being scalable and fast, Jina is also very flexible. It supports all popular deep learning frameworks (TensorFlow, PyTorch, MXNet etc.), so you can use whichever one you’re most comfortable with. And because it uses the gRPC protocol internally, you can easily integrate it with other systems using Jina Client or any of the gRPC language bindings (including Java, Python, C++ and Go).

One final advantage of Jina over other tools is its rich ecosystem. In addition to the core framework itself, Jina also provides a number of helpful services and libraries that make working with neural search easier (such as an Executor Hub for sharing trained models and a CLI tool for managing deployments). Plus, thanks to its support for both Kubernetes and Docker Compose, Jina can be used in a wide variety of environments.

tagConclusion

In conclusion, neural search is a powerful tool that is rewriting the rules of search. The core idea is to leverage deep neural networks to build every component of a search system. This approach has led to significant advances in the field of information retrieval and has the potential to revolutionize the way we search for information.

Applications that use neural search are able to better understand the user’s intent and provide more relevant results. As the technology matures, it will become increasingly important for search engines and other information retrieval systems to adopt neural search in order to remain competitive. In addition to its potentially significant impact on commercial search applications, neural search also holds great promise for academic research in areas such as digital humanities and scientific literature discovery.

The ability to automatically extract features from unstructured data sources and the improved semantical understanding of queries are two key advantages that make neural search a very promising technology. In addition, because neural models are learned from data, they are more easily adapted to changes in the underlying distribution than rule-based methods. This makes them particularly well suited for applications where the training data is constantly changing, such as e-commerce or news recommendation.