Available via

I/O graph

Choose models to compare

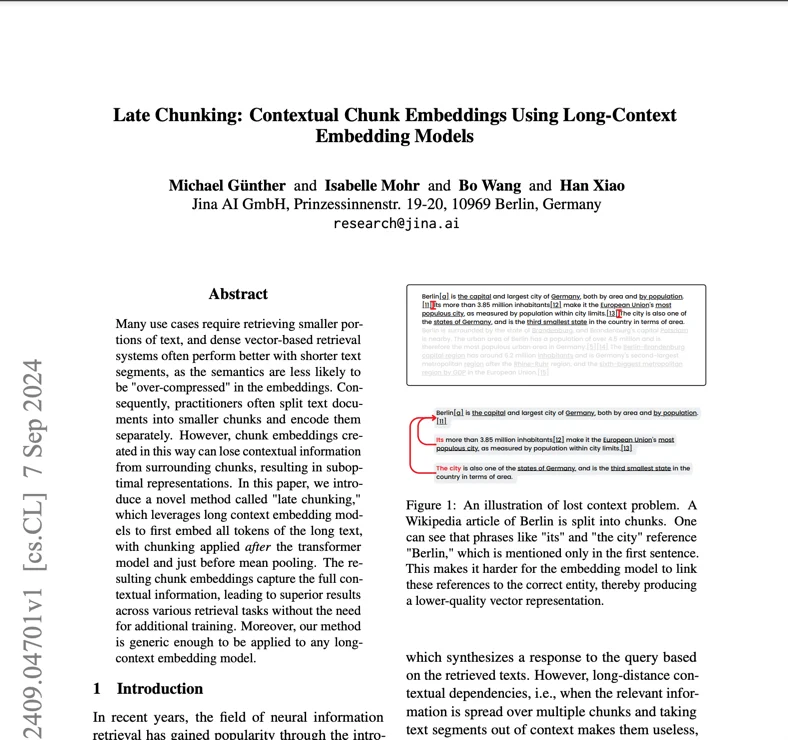

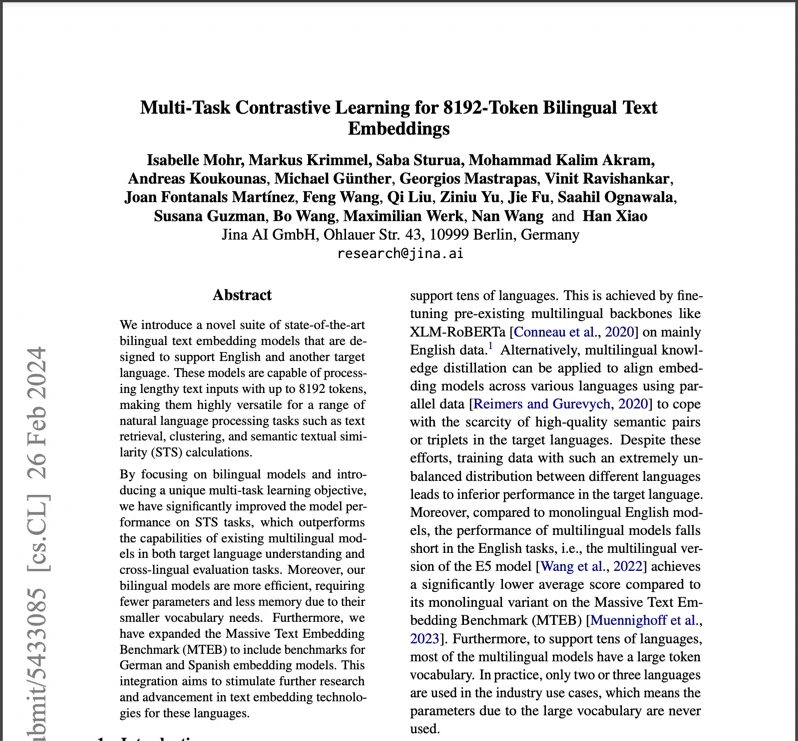

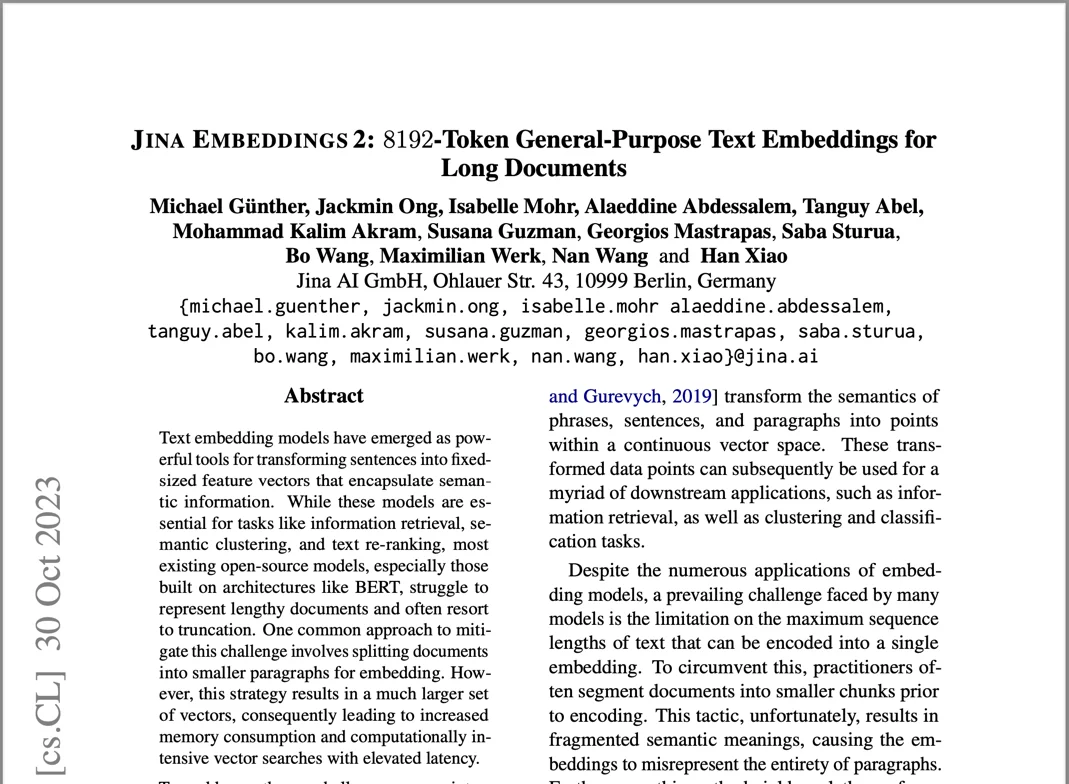

Publications (3)

Overview

Jina Embeddings v2 Base English is a groundbreaking open-source text embedding model that solves the critical challenge of processing long documents while maintaining high accuracy. Organizations struggling with analyzing extensive legal documents, research papers, or financial reports will find this model particularly valuable. It stands out by handling documents up to 8,192 tokens in length—16 times longer than traditional models—while matching the performance of OpenAI's proprietary solutions. With a compact size of 0.27GB and efficient resource usage, it offers an accessible solution for teams seeking to implement advanced document analysis without excessive computational overhead.

Methods

The model's architecture combines a BERT Small backbone with an innovative symmetric bidirectional ALiBi (Attention with Linear Biases) mechanism, eliminating the need for traditional positional embeddings. This architectural choice enables the model to extrapolate far beyond its training length of 512 tokens, handling sequences up to 8,192 tokens without performance degradation. The training process involved two key phases: initial pretraining on the C4 dataset, followed by refinement on Jina AI's curated collection of over 40 specialized datasets. This diverse training data, including challenging negative examples and varied sentence pairs, ensures robust performance across different domains and use cases. The model produces 768-dimensional dense vectors that capture nuanced semantic relationships, achieved with a relatively modest 137M parameters.

Performance

In real-world testing, Jina Embeddings v2 Base English demonstrates exceptional capabilities across multiple benchmarks. It outperforms OpenAI's text-embedding-ada-002 in several key metrics: classification (73.45% vs 70.93%), reranking (85.38% vs 84.89%), retrieval (56.98% vs 56.32%), and summarization (31.6% vs 30.8%). These numbers translate to practical advantages in tasks like document classification, where the model shows superior ability to categorize complex texts, and in search applications, where it better understands user queries and finds relevant documents. However, users should note that performance may vary when dealing with highly specialized domain-specific content not represented in the training data.

Best Practice

To effectively deploy Jina Embeddings v2 Base English, teams should consider several practical aspects. The model requires CUDA-capable hardware for optimal performance, though its efficient architecture means it can run on consumer-grade GPUs. It's available through multiple channels: direct download from Hugging Face, AWS Marketplace deployment, or the Jina AI API with 10M free tokens. For production deployments, AWS SageMaker in the us-east-1 region offers the most scalable solution. The model excels at general-purpose text analysis but may not be the best choice for highly specialized scientific terminology or domain-specific jargon without fine-tuning. When processing long documents, consider breaking them into meaningful semantic chunks rather than arbitrary splits to maintain context integrity. For optimal results, implement proper text preprocessing and ensure clean, well-formatted input data.

Blogs that mention this model