Some things go together, like peanut butter and jelly, tea and biscuits, gin and tonic, or DocArray and Otter.

We live in a world where information is usually multimodal — where texts, images, videos, and other media co-exist and come mixed together. The emergence of large language models has transformed the way we process, understand, and gain insights from this kind of complex, poorly structured multimedia data.

The nature of the Otter model — reading in combinations of images, videos, and texts as prompts — makes DocArray almost perfectly suited to it.

With DocArray, you can represent, send, and store multi-modal data easily. It integrates seamlessly with a wide array of databases, including Weaviate, Qdrant, and ElasticSearch, presenting users with a consistent API format for vector search. With DocArray, you can navigate your multimodal data effortlessly.

DocArray was designed for the data our complex, multimedia, multi-modal world naturally generates, exactly the same kind of data that Otter was designed to process. This article will show you how to bring the two together to get the most out of the Otter model.

tagThe Otter model

The Otter Model is a multimodal model fine-tuned for in-context instruction. It has been adapted from the OpenFlamingo model from LAION, which is an open-source reproduction of DeepMind's Flamingo model. To train it, Otter’s developers created a partially human-curated dataset of 2.8 million instruction sets with examples.

The way in-context instruction works is to not just ask the AI model to respond to some prompt but to give it, as part of the prompt, a few examples of how you want it to respond. Instead of “zero-shot learning”, where the model responds without new information, this is “few-shot learning”, where it’s given a bit of positive instruction in the form of a small example set of inputs and appropriate responses.

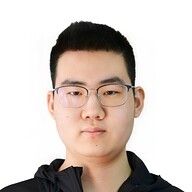

For example:

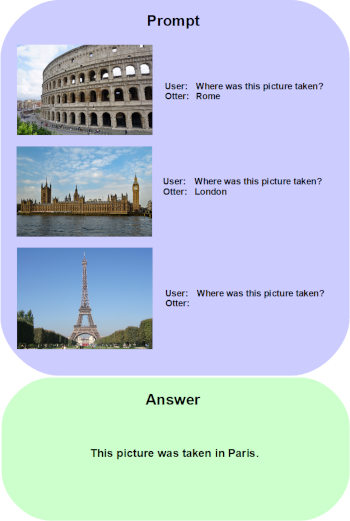

Now using the same images, the same question, but different answers in the examples:

You can see in this example how Otter learns from the two examples given in each prompt what class of answer is required. When the question is “Where was this picture taken?” “Paris” is the same kind of answer as “Rome” and “London”, while “at the Eiffel Tower” is the same kind of answer as “at the Colosseum” and “near Tower Bridge”. This is the kind of processing Otter was trained for.

tagBuilding multi-modal prompts with DocArray

tagPrerequisites

First, Otter is an enormous model and will require a great deal of memory and very powerful GPUs. You will need either two GTX 3090 24G or a single A100.

If you have secured the necessary hardware, the next step is to install DocArray. Assuming you have Python and pip installed and they are on the path, perform the following command in a terminal:

pip install docarrayNext, download the environment for the Otter model from GitHub:

git clone https://github.com/Luodian/Otter.gitFinally, use conda to create a new virtual Python environment and install all necessary dependencies in it:

conda env create -f environment.ymlThe file environment.yml is in the root directory of the git downloaded in the previous step.

tagCreating the classes and functions with DocArray

A multi-modal prompt is a combination of different data types in a single prompt. This is where DocArray can shine because it allows us to tap into the richer, multi-faceted contexts that diverse data types offer.

For this article, we will build a small application that answers questions about pictures, with prompts that contain example images with example answers.

First, we will define the prompt object format in DocArray:

from docarray import BaseDoc

from docarray.typing import ImageUrl

class OtterDoc(BaseDoc):

url: ImageUrl

prompt: str

answer: strA prompt contains an image URL, a string prompt, and a string answer.

Now, we can create a prompt to pass to the model containing multiple instances of OtterDoc, using the three images below:

from docarray import DocList

docs = DocList[OtterDoc](

[

OtterDoc(

url="https://upload.wikimedia.org/wikipedia/commons/1/1f/Colosseo_Romano_Rome_04_2016_6289.jpg",

prompt="Where was this picture taken?",

answer="Rome",

),

OtterDoc(

url="https://upload.wikimedia.org/wikipedia/commons/a/a3/Westminster%2C_2023.jpg",

prompt="Where was this picture taken?",

answer="London",

),

OtterDoc(

url="https://upload.wikimedia.org/wikipedia/commons/d/d4/Eiffel_Tower_20051010.jpg",

prompt="Where was this picture taken?",

answer="", ## leave this blank and Otter will answer

),

]

)

The Otter model has been trained with in-context instruction-response pairs, so it requires a specific text template to correctly process its input.

prompt = f"<image>User: {first_instruction} "

+ f"GPT:<answer> {first_response}<endofchunk>"

+ f"<image>User: {second_instruction} GPT:<answer>"The User and GPT role labels are essential and must be used in the way shown above.

Let’s also write a helper function to convert a DocList containing PromptDoc instances into a prompt:

def format_prompt(docs: DocList) -> str:

conversation = ""

for doc in docs:

conversation += f"<image>User: {doc.prompt} GPT:<answer> {doc.answer}"

if len(doc.answer) != 0:

conversation += "<|endofchunk|>"

return conversationWe will also need a list of images ordered to match the text prompts. For this, we're going to use the load_pil() function from DocArray:

images = [doc.url.load_pil() for doc in docs]tagBuilding the inference engine

Next, we will need to import Otter. It contains functions that automatically download the Otter model:

from otter.modeling_otter import OtterForConditionalGeneration

import transformers

model = OtterForConditionalGeneration.from_pretrained(

"luodian/otter-9b-hf", device_map="auto"

)

tokenizer = model.text_tokenizer

image_processor = transformers.CLIPImageProcessor()Now that we're all set, we can preprocess the input text and image data into Otter’s input vectors:

vision_x = image_processor.preprocess(images, return_tensors="pt")["pixel_values"]

.unsqueeze(1)

.unsqueeze(0)

model.text_tokenizer.padding_side = "left"

lang_x = model.text_tokenizer([format_prompt(docs)], return_tensors="pt")

Finally, we can query the Otter model with the preprocessed input:

generated_text = model.generate(

vision_x=vision_x.to(model.device),

lang_x=lang_x["input_ids"].to(model.device),

attention_mask=lang_x["attention_mask"].to(model.device),

max_new_tokens=256,

num_beams=3,

no_repeat_ngram_size=3,

)

print(f"Result: {model.text_tokenizer.decode(generated_text[0])}")For the example given, this will produce:

Result: This picture was taken in Paris.This is correct, recalling that this is the image Otter is answering questions about:

tagBeyond in-context prompting

DocArray and Otter are useful for more than just multi-modal in-context learning. It can support any multimedia prompt. For example, consider this prompt:

prompt = "<image>User: tell me what's the relation between "

+ "the first image and this image <image> GPT:<answer>"We could readily construct a DocArray extension for these kinds of queries.

For example, using the two images below:

When we did it, we got results like this:

The first image shows two cats sleeping on a couch, while the second

image shows a forest and the sunlight shining through them. The

relation between these two images is that the cats are resting in a

cozy indoor environment, which contrasts with the natural setting

of the forest. The sunlight streaming through the trees, and the

forests adds a sense of warmth and tranquility to the scene, creating

a peaceful atmosphere.This does not exhaust the possibilities for constructing multi-modal queries with DocArray and Otter.

tagConclusion

So, we've had a look at the Otter model and the powerful DocArray library and how they go together like macaroni and cheese. Joining Otter with DocArray brings multi-modal AI to life, enabling it to interpret and respond to mixed data types like images and text in a way that feels too natural to resist.

And hey, we didn't just talk about it, we showed you just how easy it is to build a multi-modal prompt.

tagExplore the possibilities

Check out DocArray’s documentation, GitHub repo, and Discord to explore multi-modal data modeling and what it can do for your use case.

You can also check Otter's GitHub repo and paper.