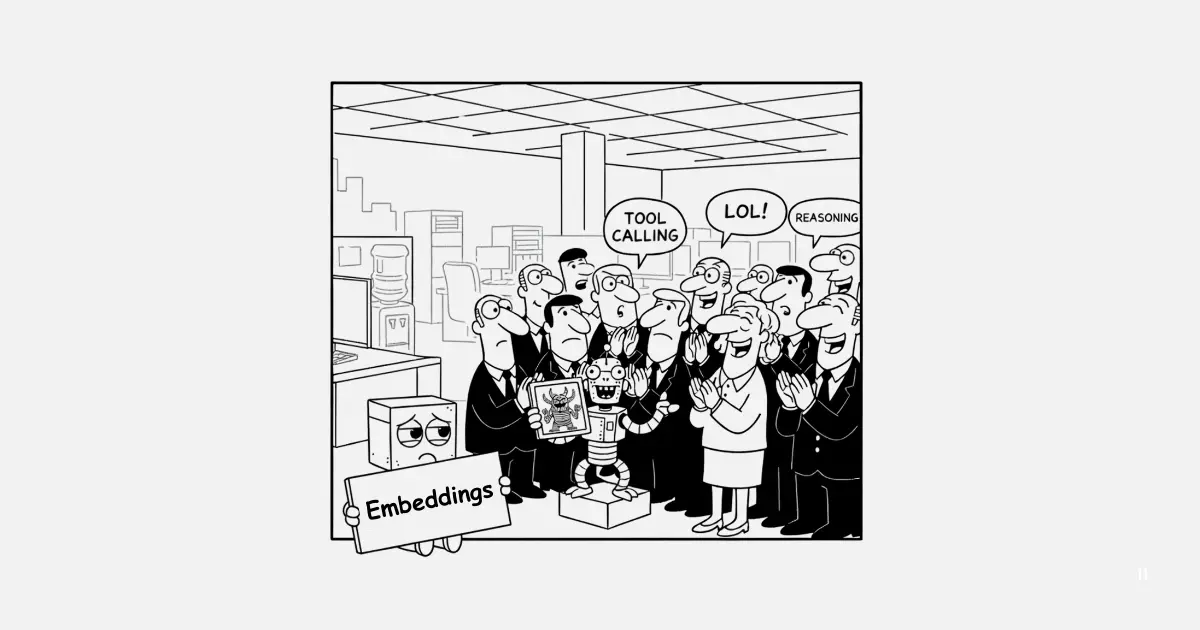

Embedding models are the red-headed stepchild of AI. Not as sexy as image generation, nor as headline-grabbing as LLM chatbots, nor as apocalyptic as predictions of artificial superintelligence, semantic embeddings are esoteric and technical, and regular consumers haven’t got much direct use for them.

Embeddings are vectors that translate semantics into geometric relationships in high-dimensional spaces. But try saying it to someone who doesn’t work with them, and watch their eyes glaze over in boredom.

That doesn’t mean they aren’t important, or even revolutionary. Representing the semantics of texts as vectors in high-dimensional spaces has been around since the 1950s, but neural networks with transformer architectures have extended it to speech, images, video, and pretty much every kind of digital data. The qualitative improvements in computational semantics in the last decade have been transformational, and the implications for search engines, recommendation algorithms, automated classifiers, and decision systems have been enormous, even if they rarely make the news.

One thing missed in this underreported revolution is that most neural networks, including recent transformer-based ones, are implicitly embedding models. Large language models, generative image models, and machine translation systems all work by translating their inputs into high-dimensional vector spaces that preserve their essential semantics — i.e., create embeddings — and then use that to produce an output. Those models can be readily repurposed to generate embeddings for information retrieval and other purposes.

In this article, we’ll discuss the main issues involved in using generative language models (e.g., LLMs) to produce text embeddings and how we are using this work to improve our models.

tagEncoders and Decoders

The terms “encoder” and “decoder” get used a lot in AI model development, but often in very confusing ways. The terms relate to concepts in electrical engineering and information theory and go back to an era when neural networks were more theoretical than practical.

In its original conception, an encoder converts its input into a machine-usable form, and a decoder does the opposite, converting data into something more humanly useful. If that seems very abstract, that’s because it is. To help, let’s look at some concrete examples.

In traditional digital electronics (not AI), an encoder is a device that coverts a rich input source, like an analog sound captured by a microphone, into a binary sequence that can be stored in computer memory, on physical digital media, or transmitted over a digital network like the internet.

A lot of the early work that led to embedding models and generative AI was in machine translation, where the basic architecture matched this picture closely.

You can look at an embedding model as just the “encoder” half of an encoder-decoder machine translation model, with an added adapter for producing embedding vectors.

And you can look at a generative model as just the “decoder” half:

It can be very counterintuitive to think about neural network models this way. The relationship between data modeling, semantics, and compression is complicated and very abstract, but it’s important to how we understand AI models. The difference between encoder and decoder models is about how they’re used, not about their architecture.

tagTransformer-Based Encoders and Decoders are the Same

Encoders and decoders are fairly abstract concepts, but if we’re talking about transformer-based models, they both have almost identical architectures .

The examples below are indicative of generative language models and text embedding models, but with some small changes, the same thing applies to other media types.

Both encoder-only and decoder-only models take texts as input, and apply tokenization and then vectorization — essentially looking up the tokens in a dictionary and substituting the appropriate vector for each — then add padding as appropriate. The result is a fixed-length input vector for the rest of the model.

In a decoder-only generative language model, this input vector is passed into a transformer model and then to a decoder adapter that transforms its output into one or more tokens. Text-generating models then append these new tokens to the input and run again to add further tokens.

Nearly all of this picture is shared with transformer-based text embedding architectures, except that instead of a decoder apparatus producing tokens, an encoder apparatus produces an embedding.

The main differences between the two aren’t fundamentally architectural; they’re in how they’re trained and used.

Generative language models are typically unidirectional (or “causal”): They generate the next token by only looking at the previous token. Embedding models are usually bidirectional (or “non-causal”).

This affects how they’re trained. Generative model training reflects that, by learning from amounts of text, passed to it one token at a time. Embedding models are usually pre-trained using “masked language modeling” (MLM) techniques, which means that they look at the words both before and after a gap to generate a semantic representation of the missing word.

Nonetheless, in principle, converting a generative LLM into an embedding model just means replacing the decoder apparatus with an encoder and fine-tuning it for the new use.

So why don’t we do that?

tagTransformer-Based Encoders and Decoders are Different

Researchers have offered three big reasons not to convert generative language models into text embedding models, but each one has come under question lately.

Bidirectional (“non-causal”) attention is better than unidirectional (“causal”) attention.

Ever since the seminal BERT model, we’ve taken for granted that bidirectional attention is better than unidirectional simply because more information is available. The model can see tokens in their full context, not just in the context of the preceding words.

However, more recent studies (Wang et al. 2023, Gisserot-Boukhlef et al. 2025) suggest that there isn’t any big difference in outcomes between bidirectional and unidirectional pre-training, although there are some small advantages to each, depending on what factors you’re looking at.

Generative LLMs suffer from the curse of dimensionality, making them bad at generalization.

LLMs are not designed to be good embedding models. Their internal vector representations — the hidden layers — typically have far more dimensions than an embedding model would have. The models are too big, and they can fail to generalize because a large semantic space doesn’t have to contain compact representations.

This problem is sometimes called the curse of dimensionality, and it plagues neural networks.

Unlike embeddings, generative language models are graded on a curve. Generative models don’t use those internal embedding vectors directly; they use the language that comes from transforming them back into tokens. If the model seems to talk fluently and coherently, we rank it highly. Embedding models, in contrast, have actual tasks to perform that demand correct generalization. So the curse of dimensionality doesn’t seem so important for generative models, but is lethal to an embedding model.

Recently, however, generative language models have shaken off some of the curse of dimensionality. High-performance generative small language models (SLMs) with fewer than 10 billion parameters are now quite commonplace, and although they are motivated more by a desire to have smaller, more efficient language models than any interest in embeddings, we can still take advantage of them to build better models.

People have already tried it, and it didn’t work very well.

The duality of embedding models and generative models is not new, but embedding models adapted from generative ones have typically been far larger than comparably-performing embedding models. OpenAI adapted GPT-3 for embeddings in 2022, but, despite roughly 175 billion parameters, it performs roughly on par with MLM-trained embedding models of under a billion parameters.

However, the NV-Embed model family, which NVIDIA adapted from the 7-billion-parameter Mistral-7B SLM, has achieved state-of-the-art performance on standard embedding benchmarks. This is proof enough that adapting generative language models can work pretty well in practice.

tagBenefits of Adapting Generative Models

Repurposing decoder-style generative language models for encoder-style embeddings may not have disadvantages, but it doesn’t seem to have any advantages either, at least on paper. But in practice, there are a few tangible benefits.

First, generative language models are the focus of a lot of research and money because they’re the sexy part of AI. Embedding models adapted from them can appropriate that extra attention and effort at little extra cost. Developing and training a new embedding model from scratch is an expensive operation compared to the contrastive fine-tuning used to optimize a pre-trained model, so this purely economic benefit is pretty significant.

As an example, our recently released models jina-code-embeddings-1.5b and jina-code-embeddings-0.5b are the first code embedding models based on code generation backbones, specifically Qwen2.5-Coder-1.5B and Qwen2.5-Coder-0.5B, respectively. We improved their embedding performance considerably because we were able to focus all of our attention on training them for good embeddings instead of doing complex and costly pre-training.

Second, it’s possible to transfer the capabilities of generative models to new domains.

The jina-embeddings-v4 model does exactly that. It adapts Qwen2.5-VL-3B-Instruct, a 3.8 billion-parameter vision-language model, for multimodal embeddings. Its exceptional performance as an embedding model for diagrams, screenshots, and other visual document image inputs relies on the generative model’s pre-existing natural language understanding abilities. Instead of training a model from scratch first to understand language, then to parse printed text in images, and finally to produce good embeddings, we were able to transfer knowledge from a pre-existing image embedding model and a generative language model, focusing instead on contrastive training for embedding tasks.

tagEmbeddings Get the Job Done

All else being equal, there isn’t a clear advantage to using generative language models as the backbone for an embedding model. If you have to build a text embedding model from scratch, it doesn’t seem to make much difference if you use bidirectional or unidirectional pre-training. Investing in data quality, task specialization, and fine-tuning for embedding quality counts for much more.

But all else is not equal.

Embedding models see nothing like the funding that generative language models get, and using their models as our backbones lets us devote more effort to making better embedding models because of the resources they devoted to their pre-training.

Embeddings face special problems because they don’t have the impressive-looking demos that generative AI is so famous for. Instead, they have important, really-existing use cases in which accuracy, quality, and cost count for everything. Information retrieval, classification tasks, recommendation systems, spam and fraud detection, and content moderation — all are actual jobs that embedding models are doing now.

Embeddings aren’t so sexy, but they do get the job done. So if we can reappropriate a little of the better-funded work from the sexy side of AI to its red-headed stepchildren, that seems fair enough.