Classification is a common downstream task for embeddings. Text embeddings can categorize text into predefined labels for spam detection or sentiment analysis. Multimodal embeddings like jina-clip-v1 can be applied to content-based filtering or tag annotation. Recently, classification has also found use in routing queries to appropriate LLMs based on complexity and cost, e.g. simple arithmetic queries might be routed to a small language model. Complex reasoning tasks could be directed to more powerful, but costlier LLMs.

Today, we're introducing Jina AI's Search Foundation's new Classifier API. Supporting zero-shot and few-shot online classification, it's built on our latest embedding models like jina-embeddings-v3 and jina-clip-v1. The Classifier API builds on the online passive-aggressive learning, allowing it to adapt to new data in real-time. Users can begin with a zero-shot classifier and use it immediately. They can then incrementally update the classifier by submitting new examples or when concept drift occurs. This enables efficient, scalable classification across various content types without extensive initial labeled data. Users can also publish their classifiers for public use. When our new embeddings release, such as the upcoming multilingual jina-clip-v2, users can immediately access them through the Classifier API, ensuring up-to-date classification capabilities.

tagZero-Shot Classification

The Classifier API offers powerful zero-shot classification capabilities, allowing you to categorize text or image without pre-training on labeled data. Every classifier starts with zero-shot capabilities, which can later be enhanced with additional training data or updates - a topic we'll explore in the next section.

tagExample 1: Route LLM Requests

Here's an example using the classifier API for LLM query routing:

curl https://api.jina.ai/v1/classify \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_API_KEY_HERE" \

-d '{

"model": "jina-embeddings-v3",

"labels": [

"Simple task",

"Complex reasoning",

"Creative writing"

],

"input": [

"Calculate the compound interest on a principal of $10,000 invested for 5 years at an annual rate of 5%, compounded quarterly.",

"分析使用CRISPR基因编辑技术在人类胚胎中的伦理影响。考虑潜在的医疗益处和长期社会后果。",

"AIが自意識を持つディストピアの未来を舞台にした短編小説を書いてください。人間とAIの関係や意識の本質をテーマに探求してください。",

"Erklären Sie die Unterschiede zwischen Merge-Sort und Quicksort-Algorithmen in Bezug auf Zeitkomplexität, Platzkomplexität und Leistung in der Praxis.",

"Write a poem about the beauty of nature and its healing power on the human soul.",

"Translate the following sentence into French: The quick brown fox jumps over the lazy dog."

]

}'This example demonstrates the use of jina-embeddings-v3 to route user queries in multiple languages (English, Chinese, Japanese, and German) into three categories, which correspond to three different sizes of LLMs. The API response format is as follows:

{

"usage": {"total_tokens": 256, "prompt_tokens": 256},

"data": [

{"object": "classification", "index": 0, "prediction": "Simple task", "score": 0.35216382145881653},

{"object": "classification", "index": 1, "prediction": "Complex reasoning", "score": 0.34310275316238403},

{"object": "classification", "index": 2, "prediction": "Creative writing", "score": 0.3487184941768646},

{"object": "classification", "index": 3, "prediction": "Complex reasoning", "score": 0.35207709670066833},

{"object": "classification", "index": 4, "prediction": "Creative writing", "score": 0.3638903796672821},

{"object": "classification", "index": 5, "prediction": "Simple task", "score": 0.3561534285545349}

]

}The response includes:

usage: Information about token usage.data: An array of classification results, one for each input.- Each result contains the predicted label (

prediction) and a confidence score (score). Thescorefor each class is computed via softmax normalization - for zero-shot it's based on cosine similarities between input and label embeddings underclassificationtask-LoRA; while for few-shot it's based on learned linear transformations of the input embedding for each class - resulting in probabilities that sum to 1 across all classes. - The

indexcorresponds to the position of the input in the original request.

- Each result contains the predicted label (

tagExample 2: Categorize Image & Text

Let's explore a multimodal example using jina-clip-v1. This model can classify both text and images, making it ideal for content categorization across various media types. Consider the following API call:

curl https://api.jina.ai/v1/classify \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_API_KEY_HERE" \

-d '{

"model": "jina-clip-v1",

"labels": [

"Food and Dining",

"Technology and Gadgets",

"Nature and Outdoors",

"Urban and Architecture"

],

"input": [

{"text": "A sleek smartphone with a high-resolution display and multiple camera lenses"},

{"text": "Fresh sushi rolls served on a wooden board with wasabi and ginger"},

{"image": "https://picsum.photos/id/11/367/267"},

{"image": "https://picsum.photos/id/22/367/267"},

{"text": "Vibrant autumn leaves in a dense forest with sunlight filtering through"},

{"image": "https://picsum.photos/id/8/367/267"}

]

}'Note how we upload images in the request, you can also use base64 string to represent an image. The API returns the following classification results:

{

"usage": {"total_tokens": 12125, "prompt_tokens": 12125},

"data": [

{"object": "classification", "index": 0, "prediction": "Technology and Gadgets", "score": 0.30329811573028564},

{"object": "classification", "index": 1, "prediction": "Food and Dining", "score": 0.2765541970729828},

{"object": "classification", "index": 2, "prediction": "Nature and Outdoors", "score": 0.29503118991851807},

{"object": "classification", "index": 3, "prediction": "Urban and Architecture", "score": 0.2648046910762787},

{"object": "classification", "index": 4, "prediction": "Nature and Outdoors", "score": 0.3133063316345215},

{"object": "classification", "index": 5, "prediction": "Technology and Gadgets", "score": 0.27474141120910645}

]

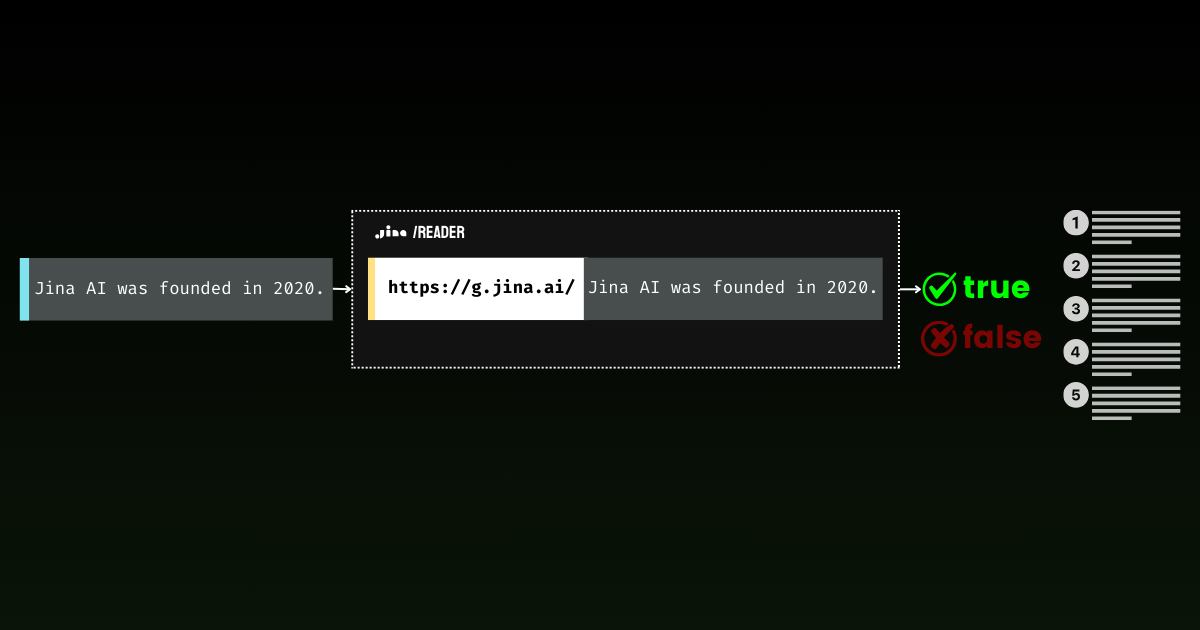

}tagExample 3: Detect If Jina Reader Gets Genuine Content

An interesting application of zero-shot classification is determining website accessibility through Jina Reader. While this might seem like a straightforward task, it's surprisingly complex in practice. Blocked messages vary widely from site to site, appearing in different languages and citing various reasons (paywalls, rate limits, server outages). This diversity makes it challenging to rely on regex or fixed rules to capture all scenarios.

import requests

import json

response1 = requests.get('https://r.jina.ai/https://jina.ai')

url = 'https://api.jina.ai/v1/classify'

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer $YOUR_API_KEY_HERE'

}

data = {

'model': 'jina-embeddings-v3',

'labels': ['Blocked', 'Accessible'],

'input': [{'text': response1.text[:8000]}]

}

response2 = requests.post(url, headers=headers, data=json.dumps(data))

print(response2.text)The script fetches content via r.jina.ai and classifies it as "Blocked" or "Accessible" using the Classifier API. For instance, https://r.jina.ai/https://www.crunchbase.com/organization/jina-ai would likely be "Blocked" due to access restrictions, while https://r.jina.ai/https://jina.ai should be "Accessible".

{"usage":{"total_tokens":185,"prompt_tokens":185},"data":[{"object":"classification","index":0,"prediction":"Blocked","score":0.5392698049545288}]}The Classifier API can effectively distinguish between genuine content and blocked results from Jina Reader.

This example leverages jina-embeddings-v3 and offers a quick, automated way to monitor website accessibility, useful for content aggregation or web scraping systems especially in multilingual settings.

tagExample 4: Filtering Statements from Opinions for Grounding

Another intriguing application of zero-shot classification is filtering statement-like claims from opinions in long documents. Note that the classifier itself cannot determine if something is factually true. Instead, it identifies text that is written in the style of a factual statement, which can then be verified through a grounding API, which is often quite expensive. This two-step process is key to effective fact-checking: first filtering out all those opinions and feelings, then sending the remaining statements for grounding.

Consider this paragraph about the Space Race in 1960s:

The Space Race of the 1960s was a breathtaking testament to human ingenuity. When the Soviet Union launched Sputnik 1 on October 4, 1957, it sent shockwaves through American society, marking the undeniable start of a new era. The silvery beeping of that simple satellite struck fear into the hearts of millions, as if the very stars had betrayed Western dominance. NASA was founded in 1958 as America's response, and they poured an astounding $28 billion into the Apollo program between 1960 and 1973. While some cynics claimed this was a waste of resources, the technological breakthroughs were absolutely worth every penny spent. On July 20, 1969, Neil Armstrong and Buzz Aldrin achieved the most magnificent triumph in human history by walking on the moon, their footprints marking humanity's destiny among the stars. The Soviet space program, despite its early victories, ultimately couldn't match the superior American engineering and determination. The moon landing was not just a victory for America - it represented the most inspiring moment in human civilization, proving that our species was meant to reach beyond our earthly cradle.

This text intentionally mixes different types of writing - from statement-like claims (such as "Sputnik 1 launched on October 4, 1959"), to clear opinions ("breathtaking testament"), emotional language ("struck fear into hearts"), and interpretive claims ("marking the undeniable start of a new era").

The zero-shot classifier's job is purely semantic - it identifies whether a piece of text is written as a statement or as an opinion/interpretation. For example, "The Soviet Union launched Sputnik 1 on October 4, 1959" is written as a statement, while "The Space Race was a breathtaking testament" is clearly written as an opinion.

headers = {

'Content-Type': 'application/json',

'Authorization': f'Bearer {API_KEY}'

}

# Step 1: Split text and classify

chunks = [chunk.strip() for chunk in text.split('.') if chunk.strip()]

labels = [

"subjective, opinion, feeling, personal experience, creative writing, position",

"fact"

]

# Classify chunks

classify_response = requests.post(

'https://api.jina.ai/v1/classify',

headers=headers,

json={

"model": "jina-embeddings-v3",

"input": [{"text": chunk} for chunk in chunks],

"labels": labels

}

)

# Sort chunks

subjective_chunks = []

factual_chunks = []

for chunk, classification in zip(chunks, classify_response.json()['data']):

if classification['prediction'] == labels[0]:

subjective_chunks.append(chunk)

else:

factual_chunks.append(chunk)

print("\nSubjective statements:", subjective_chunks)

print("\nFactual statements:", factual_chunks)And you will get:

Subjective statements: ['The Space Race of the 1960s was a breathtaking testament to human ingenuity', 'The silvery beeping of that simple satellite struck fear into the hearts of millions, as if the very stars had betrayed Western dominance', 'While some cynics claimed this was a waste of resources, the technological breakthroughs were absolutely worth every penny spent', "The Soviet space program, despite its early victories, ultimately couldn't match the superior American engineering and determination"]

Factual statements: ['When the Soviet Union launched Sputnik 1 on October 4, 1957, it sent shockwaves through American society, marking the undeniable start of a new era', "NASA was founded in 1958 as America's response, and they poured an astounding $28 billion into the Apollo program between 1960 and 1973", "On July 20, 1969, Neil Armstrong and Buzz Aldrin achieved the most magnificent triumph in human history by walking on the moon, their footprints marking humanity's destiny among the stars", 'The moon landing was not just a victory for America - it represented the most inspiring moment in human civilization, proving that our species was meant to reach beyond our earthly cradle']Remember, just because something is written as a statement doesn't mean it's true. That's why we need the second step - feeding these statement-like claims into a grounding API for actual fact verification. For example, let's verify this statement: "NASA was founded in 1958 as America's response, and they poured an astounding $28 billion into the Apollo program between 1960 and 1973" with the code below.

ground_headers = {

'Accept': 'application/json',

'Authorization': f'Bearer {API_KEY}'

}

ground_response = requests.get(

f'https://g.jina.ai/{quote(factual_chunks[1])}',

headers=ground_headers

)

print(ground_response.json())which gives you:

{'code': 200, 'status': 20000, 'data': {'factuality': 1, 'result': True, 'reason': "The statement is supported by multiple references confirming NASA's founding in 1958 and the significant financial investment in the Apollo program. The $28 billion figure aligns with the data provided in the references, which detail NASA's expenditures during the Apollo program from 1960 to 1973. Additionally, the context of NASA's budget peaking during this period further substantiates the claim. Therefore, the statement is factually correct based on the available evidence.", 'references': [{'url': 'https://en.wikipedia.org/wiki/Budget_of_NASA', 'keyQuote': "NASA's budget peaked in 1964–66 when it consumed roughly 4% of all federal spending. The agency was building up to the first Moon landing and the Apollo program was a top national priority, consuming more than half of NASA's budget.", 'isSupportive': True}, {'url': 'https://en.wikipedia.org/wiki/NASA', 'keyQuote': 'Established in 1958, it succeeded the National Advisory Committee for Aeronautics (NACA)', 'isSupportive': True}, {'url': 'https://nssdc.gsfc.nasa.gov/planetary/lunar/apollo.html', 'keyQuote': 'More details on Apollo lunar landings', 'isSupportive': True}, {'url': 'https://usafacts.org/articles/50-years-after-apollo-11-moon-landing-heres-look-nasas-budget-throughout-its-history/', 'keyQuote': 'NASA has spent its money so far.', 'isSupportive': True}, {'url': 'https://www.nasa.gov/history/', 'keyQuote': 'Discover the history of our human spaceflight, science, technology, and aeronautics programs.', 'isSupportive': True}, {'url': 'https://www.nasa.gov/the-apollo-program/', 'keyQuote': 'Commander for Apollo 11, first to step on the lunar surface.', 'isSupportive': True}, {'url': 'https://www.planetary.org/space-policy/cost-of-apollo', 'keyQuote': 'A rich data set tracking the costs of Project Apollo, free for public use. Includes unprecedented program-by-program cost breakdowns.', 'isSupportive': True}, {'url': 'https://www.statista.com/statistics/1342862/nasa-budget-project-apollo-costs/', 'keyQuote': 'NASA's monetary obligations compared to Project Apollo's total costs from 1960 to 1973 (in million U.S. dollars)', 'isSupportive': True}], 'usage': {'tokens': 10640}}}With a factuality score of 1, the grounding API confirms this statement is well-grounded in historical fact. This approach opens up fascinating possibilities, from analyzing historical documents to fact-checking news articles in real-time. By combining zero-shot classification with fact verification, we create a powerful pipeline for automated information analysis - first filtering out opinions, then verifying the remaining statements against trusted sources.

tagRemarks on Zero-Shot Classification

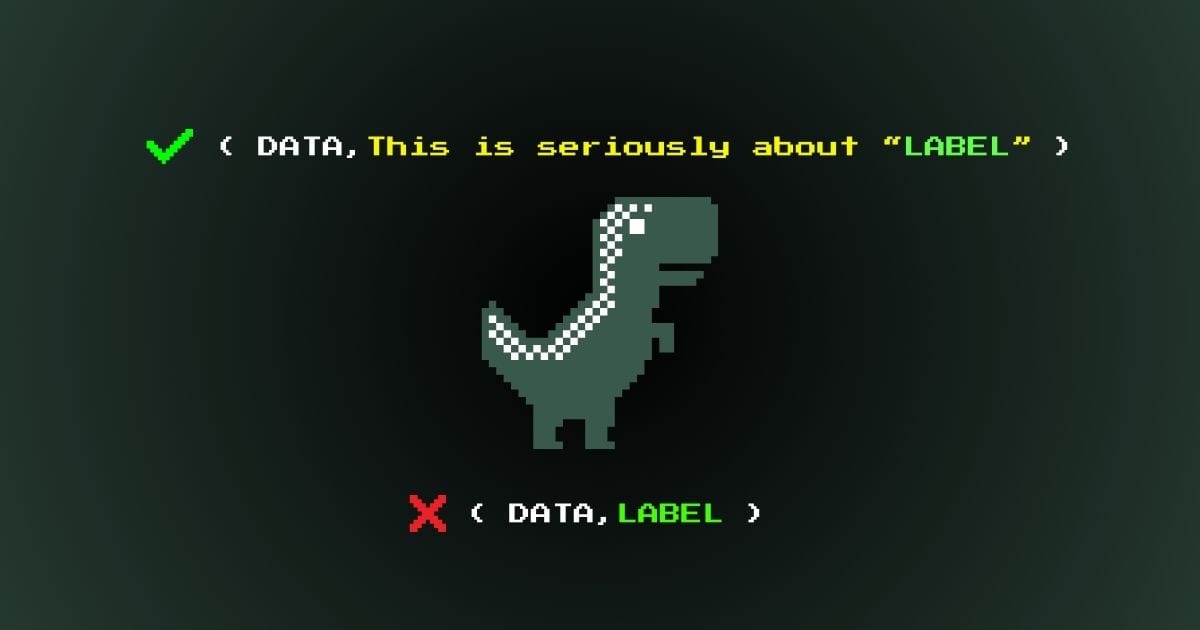

Using Semantic Labels

When working with zero-shot classification, it's crucial to use semantically meaningful labels rather than abstract symbols or numbers. For example, "Technology", "Nature", and "Food" are far more effective than "Class1", "Class2", "Class3" or "0", "1", "2". "Positive sentiment" is more effective than "Positive" and "True". Embedding models understand semantic relationships, so descriptive labels enable the model to leverage its pre-trained knowledge for more accurate classifications. Our previous post explores how to create effective semantic labels for better classification results.

Stateless Nature

Zero-shot classification is fundamentally stateless, unlike traditional machine learning approaches. This means that given the same input and model, results will always be consistent, regardless of who uses the API or when. The model doesn't learn or update based on classifications it performs; each task is independent. This allows for immediate use without setup or training, and offers flexibility to change categories between API calls.

This stateless nature contrasts sharply with few-shot and online learning approaches, which we'll explore next. In those methods, models can adapt to new examples, potentially yielding different results over time or between users.

tagFew-Shot Classification

Few-shot classification offers an easy approach to creating and updating classifiers with minimal labeled data. This method provides two primary endpoints: train and classify.

The train endpoint lets you create or update a classifier with a small set of examples. Your first call to train will return a classifier_id, which you can use for subsequent training whenever you have new data, notice changes in data distribution, or need to add new classes. This flexible approach allows your classifier to evolve over time, adapting to new patterns and categories without starting from scratch.

Similar to zero-shot classification, you'll use the classify endpoint for making predictions. The main difference is that you'll need to include your classifier_id in the request, but you won't need to provide candidate labels since they're already part of your trained model.

tagExample: Train a Support Ticket Assigner

Let's explore these features through an example of classifying customer support tickets for assignment to different teams in a rapidly growing tech startup.

Initial Training

curl -X 'POST' \

'https://api.jina.ai/v1/train' \

-H 'accept: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY_HERE' \

-H 'Content-Type: application/json' \

-d '{

"model": "jina-embeddings-v3",

"access": "private",

"input": [

{

"text": "I cant log into my account after the latest app update.",

"label": "team1"

},

{

"text": "My subscription renewal failed due to an expired credit card.",

"label": "team2"

},

{

"text": "How do I export my data from the platform?",

"label": "team3"

}

],

"num_iters": 10

}'Note that in few-shot learning, we are free to use team1 team2 as the class labels even though they don't have inherent semantic meaning. In the response, you will get a classifier_id that represents this newly created classifier.

{

"classifier_id": "918c0846-d6ae-4f34-810d-c0c7a59aee14",

"num_samples": 3,

}

Note the classifier_id down, you will need it to refer to this classifier later.

Updating Classifier to Adapt Team Restructuring

As the example company grows, new types of issues emerge, and the team structure also changes. The beauty of few-shot classification lies in its ability to quickly adapt to these changes. We can easily update the classifier giving classifier_id and new examples, introducing new team categories (e.g. team4) or reassigning existing issue types to different teams as the organization evolves.

curl -X 'POST' \

'https://api.jina.ai/v1/train' \

-H 'accept: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY_HERE' \

-H 'Content-Type: application/json' \

-d '{

"classifier_id": "b36b7b23-a56c-4b52-a7ad-e89e8f5439b6",

"input": [

{

"text": "Im getting a 404 error when trying to access the new AI chatbot feature.",

"label": "team4"

},

{

"text": "The latest security patch is conflicting with my company firewall.",

"label": "team1"

},

{

"text": "I need help setting up SSO for my organization account.",

"label": "team5"

}

],

"num_iters": 10

}'Using a Trained Classifier

During inference, you only need to provide the input text and the classifier_id. The API handles the mapping between your input and the previously trained classes, returning the most appropriate label based on the classifier's current state.

curl -X 'POST' \

'https://api.jina.ai/v1/classify' \

-H 'accept: application/json' \

-H 'Authorization: Bearer YOUR_API_KEY_HERE' \

-H 'Content-Type: application/json' \

-d '{

"classifier_id": "b36b7b23-a56c-4b52-a7ad-e89e8f5439b6",

"input": [

{

"text": "The new feature is causing my dashboard to load slowly."

},

{

"text": "I need to update my billing information for tax purposes."

}

]

}'The few-shot mode has two unique parameters.

tagParameter num_iters

The num_iters parameter tunes how intensively the classifier learns from your training examples. While the default value of 10 works well for most cases, you can strategically adjust this value based on your confidence in the training data. For high-quality examples that are crucial for classification, increase num_iters to reinforce their importance. Conversely, for less reliable examples, lower num_iters to minimize their impact on the classifier's performance. This parameter can also be used to implement time-aware learning, where more recent examples get higher iteration counts to adapt to evolving patterns while maintaining historical knowledge.

tagParameter access

The access parameter lets you control who can use your classifier. By default, classifiers are private and only accessible to you. Setting access to "public" allows anyone with your classifier_id to use it with their own API key and token quota. This enables sharing classifiers while maintaining privacy - users can't see your training data or configuration, and you can't see their classification requests. This parameter is only relevant for few-shot classification, as zero-shot classifiers are stateless. There's no need to share zero-shot classifiers since identical requests will always yield the same responses regardless of who makes them.

tagRemarks on Few-Shot Learning

Few-shot classification in our API has some unique characteristics worth noting. Unlike traditional machine learning models, our implementation uses one-pass online learning - training examples are processed to update the classifier's weights but aren't stored afterward. This means you can't retrieve historical training data, but it ensures better privacy and resource efficiency.

While few-shot learning is powerful, it does require a warm-up period to outperform zero-shot classification. Our benchmarks show that 200-400 training examples typically provide enough data to see superior performance. However, you don't need to provide examples for all classes upfront - the classifier can scale to accommodate new classes over time. Just be aware that newly added classes may experience a brief cold-start period or class imbalance until sufficient examples are provided.

tagBenchmark

For our benchmark analysis, we evaluated zero-shot and few-shot approaches across diverse datasets, including text classification tasks like emotion detection (6 classes) and spam detection (2 classes), as well as image classification tasks like CIFAR10 (10 classes). The evaluation framework used standard train-test splits, with zero-shot requiring no training data and few-shot using portions of the training set. We tracked key metrics like train size and target class count, allowing for controlled comparisons. To ensure robustness, particularly for few-shot learning, each input went through multiple training iterations. We compared these modern approaches against traditional baselines like Linear SVM and RBF SVM to provide context for their performance.

F1 scores are plotted. For the full benchmark settings, please check out this Google spreadsheet.

The F1 plots reveal interesting patterns across three tasks. Not surprisingly, zero-shot classification shows constant performance from the start, regardless of training data size. In contrast, few-shot learning demonstrates a rapid learning curve, initially starting lower but quickly surpassing zero-shot performance as training data increases. Both methods ultimately achieve comparable accuracy around the 400-sample mark, with few-shot maintaining a slight edge. This pattern holds true for both multi-class and image classification scenarios, suggesting that few-shot learning can be particularly advantageous when some training data is available, while zero-shot offers reliable performance even without any training examples. The table below summarizes the difference between zero-shot and few-shot classification from the API user point of view.

| Feature | Zero-shot | Few-shot |

|---|---|---|

| Primary Use Case | Default solution for general classification | For data outside v3/clip-v1's domain or time-sensitive data |

| Training Data Required | No | Yes |

| Labels Required in /train | N/A | Yes |

| Labels Required in /classify | Yes | No |

| Classifier ID Required | No | Yes |

| Semantic Labels Required | Yes | No |

| State Management | Stateless | Stateful |

| Continuous Model Updates | No | Yes |

| Access Control | No | Yes |

| Maximum Classes | 256 | 16 |

| Maximum Classifiers | N/A | 16 |

| Maximum Inputs per Request | 1,024 | 1,024 |

| Maximum Token Length per Input | 8,192 tokens | 8,192 tokens |

tagSummary

The Classifier API offers powerful zero-shot and few-shot classification for both text and image content, powered by advanced embedding models like jina-embeddings-v3 and jina-clip-v1. Our benchmarks show that zero-shot classification provides reliable performance without training data, making it an excellent starting point for most tasks with support for up to 256 classes. While few-shot learning can achieve slightly better accuracy with training data, we recommend beginning with zero-shot classification for its immediate results and flexibility.

The API's versatility supports various applications, from routing LLM queries to detecting website accessibility and categorizing multilingual content. Whether you're starting with zero-shot or transitioning to few-shot learning for specialized cases, the API maintains a consistent interface for seamless integration into your pipeline. We're particularly excited to see how developers will leverage this API in their applications, and we'll be rolling out support for new embedding models like jina-clip-v2 in the future.