Consider this: given an embedding model , a document , and a candidate label set , you need to determine which label should be assigned to . Essentially, you are asked to use an embedding model for classification tasks. Formally, this is represented as , where is the classifier. This is a classic classification problem that you might learn about in your ML101 course.

However, in this post, we focus on the zero-shot setting, which comes with some constraints:

- You cannot alter the embedding model —it remains a black box that you cannot fine-tune.

- You cannot train since there are no labeled data points available for training.

So, how would you approach this?

Before answering, let's look at whether this is a meaningful question. First, is this a real problem? Second, is it feasible?

- Real-World Relevance: Yes, this is a real scenario. Consider a classification API where users submit data and candidate labels, asking the server to classify the data. In this case, there is no training data, and the server does not fine-tune the embedding model for each user or problem setting. Thus, this is precisely the situation we're dealing with.

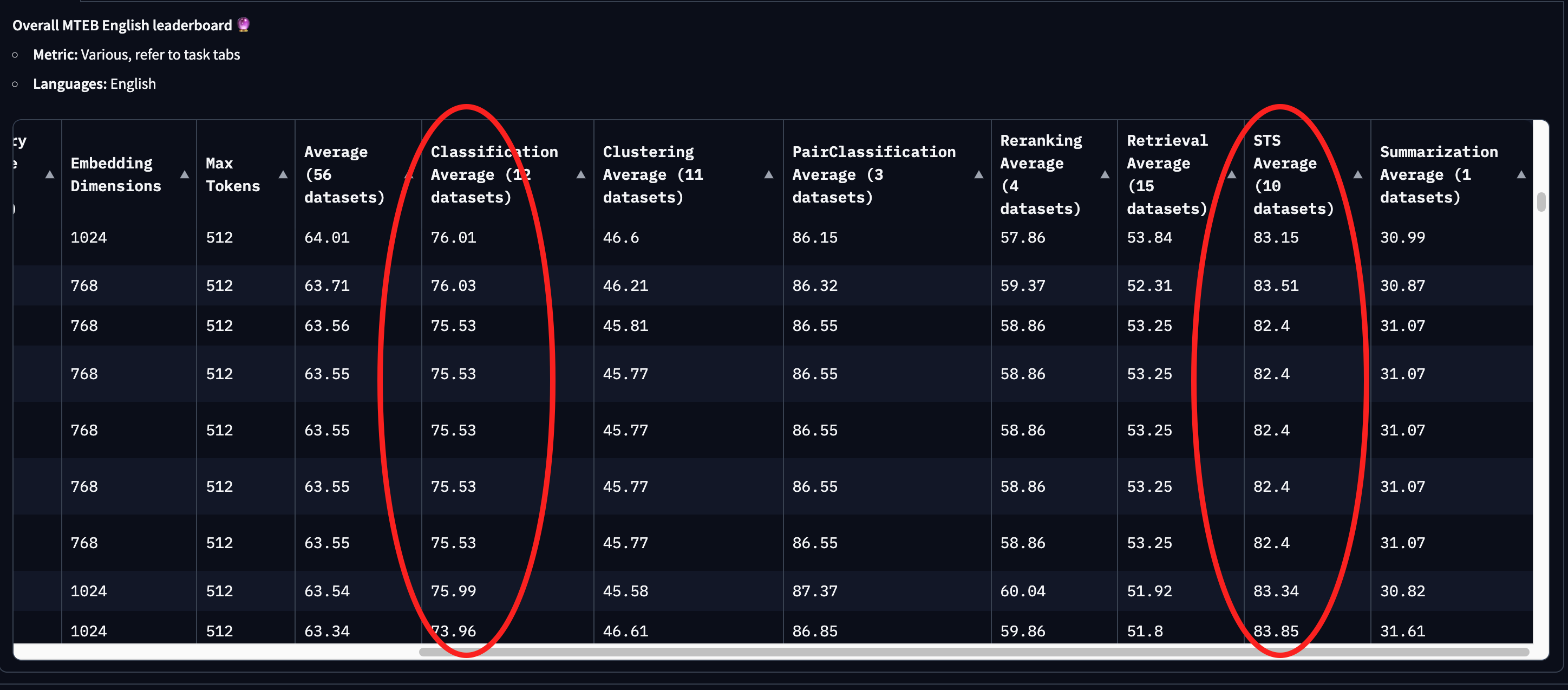

- Feasibility: It is indeed feasible. Embedding models, often encoder-only transformers, are trained on vast amounts of data for general purposes. They are evaluated on multiple tasks in the MTEB (Massive Text Embedding Benchmark), including clustering, sentence similarity, retrieval, and classification. Recently, using LLMs or decoder-only transformers as embedding models, such as NV-embed, has become popular. These models can have parameter sizes up to 7 billion! With such large parameter sizes and extensive training data, these embedding models should possess some zero-shot classification capabilities. It is not surprising that they can handle zero-shot, out-of-domain settings, though not perfectly.

tagZero-Shot Classification Baseline

So, how would you solve this problem? Since you cannot train any classifier with zero labeled data, cannot be parameterized and is mostly just a metric function, like cosine similarity. Therefore, a naive way of performing classification is to embed both the data and all candidate labels using the embedding model, compare the cosine similarity between the data and label embeddings, and select the label with the maximum cosine similarity, i.e. Simple but effective. In fact, this approach is commonly used by researchers when evaluating MTEB classification tasks.

tagCan We Do Better?

Is it possible to improve upon this method? The information we can leverage is limited. We have labels like [sports, politics, finance] or [positive, negative, neutral]. These labels have some inherent semantics (and ambiguities), especially when considered in context with , but it’s still not a lot to work with. Some approaches rely on external knowledge, such as data domain assumptions or label descriptions, to enhance the label semantics—often through data generation using LLMs. While effective, these methods also introduce additional resource consumption and latency, which we will not consider here.

One key observation is that most general-purpose embeddings are better at sentence similarity tasks than on classification tasks. So, if we can somehow rephrase the label into a sentence and compare the data embedding to this rephrased label sentence, could we achieve better performance? If so, what’s the best way to rephrase the label? How much improvement can we expect? And is this method generalizable across different datasets and embedding models? In this post, I conduct some experiments to answer these questions.

tagExperimental Setup

Instead of directly embedding the LABEL and computing the cosine similarity with the embedded document, I first rephrase the LABEL into a more descriptive sentence without looking at the data first. For example, I use constructions like "This is something about $LABEL". Note, this is a pretty generic construction as it does not assume anything of the data: it could be a code snippet, a tweet, a long document, a news article, a soundbite, a docstring, or anything . Specifically, I tested different rephrasing constructions as follows:

label_constructions = [

lambda LABEL: f'{LABEL}',

lambda LABEL: f'{LABEL} ' * 4,

lambda LABEL: f'Label: "{LABEL}".',

lambda LABEL: f'This is something about "{LABEL}".',

lambda LABEL: f'This is seriously about "{LABEL}".',

lambda LABEL: f'This article is about "{LABEL}".',

lambda LABEL: f'This news is about "{LABEL}".',

lambda LABEL: f'This is categorized as "{LABEL}".',

# Descriptive Context Embedding

lambda LABEL: f'This text discusses the topic of "{LABEL}" in detail.',

lambda LABEL: f'A comprehensive overview of "{LABEL}".',

lambda LABEL: f'Exploring various aspects of "{LABEL}".',

# Question-Based Construction

lambda LABEL: f'What are the key features of "{LABEL}"?',

lambda LABEL: f'How does "{LABEL}" impact our daily lives?',

lambda LABEL: f'Why is "{LABEL}" important in modern society?',

# Comparative Context Construction

lambda LABEL: f'"{LABEL}" compared to other related concepts.',

lambda LABEL: f'The differences between "{LABEL}" and its alternatives.',

lambda LABEL: f'Understanding "{LABEL}" and why it stands out.',

]Different rephrase constructions used in the experiment. Note that I do not make strong assumption on the type of the data.

The datasets I’m using for this experiment are TREC, AG News, GoEmotions, and Twitter Eval text classification data. I've also confirmed with our team that those dataset were not used for training our embedding models. Specifically, the labels we try to predict in these datasets are as follows:

- TREC:

['ABBR', 'ENTY', 'DESC', 'HUM', 'LOC', 'NUM'] - AG News:

['World', 'Sports', 'Business', 'Sci/Tech'] - GoEmotions:

['admiration','amusement','anger','annoyance','approval','caring','confusion','curiosity','desire','disappointment','disapproval','disgust','embarrassment','excitement','fear','gratitude','grief','joy','love','nervousness','optimism','pride','realization','relief','remorse','sadness','surprise','neutral'] - Twitter Eval:

['negative', 'neutral', 'positive']

Unlike classic classification tasks, we should not encode the labels into integers (e.g., 0, 1, 2) because we would lose the semantic information inherent in the labels. Moreover, I do not expand the abbreviated labels into their full forms. For example, the full names of the TREC labels are [Description, Entity, Abbreviation, Human, Location, Numeric], but I keep using the original abbreviations in the experiment as:

- It more closely mirrors real-world settings where abbreviated labels are commonly used.

- It allows us to see how well our embedding model can handle labels with extremely weak semantic information. (As I mentioned above, the other extreme is to leverage label descriptions, enhanced or generated by LLMs, which is out of scope.)

The embedding models I'm testing are:

- jina-embeddings-v2-base-en (released in October 2023)

- jina-clip-v1 (released in June 2024)

It might be surprising for some readers to see that I included jina-clip-v1, which is a multimodal embedding model for both image and text. However, our innovation with jina-clip-v1 exactly focused on improving its text retrieval/similarity ability vs. the original CLIP models. So a good chance to test it.

You can try out both models via our Embedding API:

The full implementation can be found in the notebook below:

tagExperimental Results

We measure the Precision, Recall, and (weighted average) F1 score for our experiments. To evaluate the effectiveness of our method, we compute the relative improvement over the zero-shot classification baseline where the LABEL is used as it is. Note jina-embeddings-v2-base-en and jina-clip-v1 have different baseline performance. So we only compare the results within the model not between the two models. The results are then sorted in descending order by their (weighted average) F1 score.

For all metrics, the larger the better. The delta percentage represents the relative improvement over the baseline, i.e. the first row.

tagjina-embeddings-v2-base-en on AG News

LABEL = ['World', 'Sports', 'Business', 'Sci/Tech']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.53 | 0.62 | 0.54 |

| 1 | This news is about "LABEL". | 0.64 (+19%) | 0.67 (+8%) | 0.63 (+17%) |

| 2 | This article is about "LABEL". | 0.63 (+18%) | 0.65 (+5%) | 0.63 (+16%) |

| 3 | This is seriously about "LABEL". | 0.62 (+17%) | 0.64 (+3%) | 0.62 (+15%) |

| 4 | This text discusses the topic of "LABEL" in detail. | 0.60 (+13%) | 0.64 (+3%) | 0.60 (+12%) |

| 5 | A comprehensive overview of "LABEL". | 0.60 (+12%) | 0.67 (+7%) | 0.59 (+10%) |

| 6 | How does "LABEL" impact our daily lives? | 0.59 (+11%) | 0.65 (+4%) | 0.59 (+9%) |

| 7 | "LABEL" compared to other related concepts. | 0.59 (+11%) | 0.65 (+4%) | 0.59 (+9%) |

| 8 | Exploring various aspects of "LABEL". | 0.58 (+9%) | 0.67 (+8%) | 0.58 (+8%) |

| 9 | Why is "LABEL" important in modern society? | 0.58 (+8%) | 0.58 (-7%) | 0.58 (+7%) |

| 10 | This is something about "LABEL". | 0.57 (+7%) | 0.64 (+3%) | 0.57 (+6%) |

| 11 | Understanding "LABEL" and why it stands out. | 0.57 (+7%) | 0.60 (-3%) | 0.57 (+6%) |

| 12 | Label: "LABEL". | 0.55 (+3%) | 0.61 (-2%) | 0.55 (+2%) |

| 13 | The differences between "LABEL" and its alternatives. | 0.54 (+1%) | 0.60 (-4%) | 0.53 (-1%) |

| 14 | This is categorized as "LABEL". | 0.54 (+1%) | 0.63 (+1%) | 0.55 (+1%) |

| 15 | What are the key features of "LABEL"? | 0.52 (-3%) | 0.60 (-3%) | 0.52 (-3%) |

| 16 | LABEL LABEL LABEL LABEL | 0.46 (-14%) | 0.61 (-2%) | 0.46 (-14%) |

tagjina-clip-v1 on AG News

LABEL = ['World', 'Sports', 'Business', 'Sci/Tech']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.57 | 0.62 | 0.56 |

| 1 | How does "LABEL" impact our daily lives? | 0.63 (+11%) | 0.66 (+6%) | 0.62 (+11%) |

| 2 | This news is about "LABEL". | 0.61 (+8%) | 0.65 (+5%) | 0.61 (+9%) |

| 3 | This is seriously about "LABEL". | 0.60 (+7%) | 0.63 (+2%) | 0.60 (+7%) |

| 4 | This is something about "LABEL". | 0.59 (+5%) | 0.63 (+1%) | 0.59 (+6%) |

| 5 | Exploring various aspects of "LABEL". | 0.58 (+3%) | 0.60 (-3%) | 0.58 (+4%) |

| 6 | This article is about "LABEL". | 0.58 (+2%) | 0.61 (-2%) | 0.57 (+2%) |

| 7 | Understanding "LABEL" and why it stands out. | 0.57 (+2%) | 0.60 (-4%) | 0.57 (+2%) |

| 8 | Label: "LABEL". | 0.57 (+1%) | 0.60 (-3%) | 0.57 (+1%) |

| 9 | Why is "LABEL" important in modern society? | 0.56 (-1%) | 0.59 (-5%) | 0.56 (-1%) |

| 10 | A comprehensive overview of "LABEL". | 0.55 (-2%) | 0.58 (-7%) | 0.55 (-2%) |

| 11 | This is categorized as "LABEL". | 0.55 (-2%) | 0.59 (-5%) | 0.55 (-2%) |

| 12 | "LABEL" compared to other related concepts. | 0.55 (-3%) | 0.58 (-6%) | 0.55 (-2%) |

| 13 | What are the key features of "LABEL"? | 0.55 (-3%) | 0.57 (-8%) | 0.55 (-2%) |

| 14 | LABEL LABEL LABEL LABEL | 0.55 (-3%) | 0.60 (-3%) | 0.54 (-3%) |

| 15 | This text discusses the topic of "LABEL" in detail. | 0.54 (-4%) | 0.58 (-7%) | 0.54 (-4%) |

| 16 | The differences between "LABEL" and its alternatives. | 0.54 (-5%) | 0.56 (-10%) | 0.53 (-5%) |

tagjina-embeddings-v2-base-en on TREC

LABEL = ['ABBR', 'ENTY', 'DESC', 'HUM', 'LOC', 'NUM']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.25 | 0.26 | 0.28 |

| 1 | This is categorized as "LABEL". | 0.23 (-10%) | 0.25 (-3%) | 0.22 (-21%) |

| 2 | LABEL LABEL LABEL LABEL | 0.22 (-12%) | 0.26 (-1%) | 0.24 (-11%) |

| 3 | This is seriously about "LABEL". | 0.22 (-12%) | 0.25 (-3%) | 0.22 (-21%) |

| 4 | The differences between "LABEL" and its alternatives. | 0.21 (-15%) | 0.23 (-12%) | 0.21 (-25%) |

| 5 | Label: "LABEL". | 0.21 (-16%) | 0.23 (-10%) | 0.21 (-24%) |

| 6 | This is something about "LABEL". | 0.21 (-16%) | 0.25 (-5%) | 0.22 (-21%) |

| 7 | A comprehensive overview of "LABEL". | 0.21 (-16%) | 0.22 (-14%) | 0.21 (-25%) |

| 8 | This article is about "LABEL". | 0.21 (-18%) | 0.23 (-10%) | 0.20 (-27%) |

| 9 | Exploring various aspects of "LABEL". | 0.20 (-21%) | 0.21 (-19%) | 0.20 (-29%) |

| 10 | Understanding "LABEL" and why it stands out. | 0.20 (-22%) | 0.23 (-12%) | 0.19 (-30%) |

| 11 | This news is about "LABEL". | 0.20 (-22%) | 0.22 (-14%) | 0.19 (-29%) |

| 12 | "LABEL" compared to other related concepts. | 0.19 (-24%) | 0.21 (-18%) | 0.18 (-33%) |

| 13 | What are the key features of "LABEL"? | 0.19 (-26%) | 0.21 (-20%) | 0.19 (-31%) |

| 14 | This text discusses the topic of "LABEL" in detail. | 0.18 (-27%) | 0.22 (-17%) | 0.17 (-37%) |

| 15 | Why is "LABEL" important in modern society? | 0.18 (-30%) | 0.21 (-20%) | 0.17 (-37%) |

| 16 | How does "LABEL" impact our daily lives? | 0.17 (-31%) | 0.21 (-20%) | 0.17 (-40%) |

tagjina-clip-v1 on TREC

LABEL = ['ABBR', 'ENTY', 'DESC', 'HUM', 'LOC', 'NUM']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.20 | 0.24 | 0.19 |

| 1 | Why is "LABEL" important in modern society? | 0.20 (-2%) | 0.22 (-10%) | 0.18 (-5%) |

| 2 | LABEL LABEL LABEL LABEL | 0.20 (-2%) | 0.23 (-7%) | 0.19 (-2%) |

| 3 | What are the key features of "LABEL"? | 0.19 (-4%) | 0.21 (-12%) | 0.18 (-5%) |

| 4 | The differences between "LABEL" and its alternatives. | 0.19 (-5%) | 0.21 (-12%) | 0.18 (-7%) |

| 5 | How does "LABEL" impact our daily lives? | 0.19 (-6%) | 0.21 (-13%) | 0.18 (-9%) |

| 6 | This text discusses the topic of "LABEL" in detail. | 0.19 (-6%) | 0.21 (-13%) | 0.18 (-9%) |

| 7 | Understanding "LABEL" and why it stands out. | 0.18 (-9%) | 0.20 (-16%) | 0.17 (-11%) |

| 8 | "LABEL" compared to other related concepts. | 0.18 (-11%) | 0.20 (-17%) | 0.17 (-14%) |

| 9 | This is seriously about "LABEL". | 0.18 (-11%) | 0.20 (-15%) | 0.17 (-15%) |

| 10 | This is categorized as "LABEL". | 0.18 (-11%) | 0.20 (-17%) | 0.17 (-14%) |

| 11 | Exploring various aspects of "LABEL". | 0.18 (-12%) | 0.20 (-18%) | 0.17 (-14%) |

| 12 | Label: "LABEL". | 0.18 (-13%) | 0.20 (-17%) | 0.17 (-14%) |

| 13 | This article is about "LABEL". | 0.18 (-13%) | 0.20 (-18%) | 0.16 (-17%) |

| 14 | This is something about "LABEL". | 0.18 (-13%) | 0.20 (-18%) | 0.16 (-16%) |

| 15 | This news is about "LABEL". | 0.17 (-14%) | 0.19 (-19%) | 0.16 (-17%) |

| 16 | A comprehensive overview of "LABEL". | 0.17 (-14%) | 0.19 (-21%) | 0.16 (-18%) |

tagjina-embeddings-v2-base-en on GoEmotions

LABEL = ['admiration','amusement','anger','annoyance','approval','caring','confusion','curiosity','desire','disappointment','disapproval','disgust','embarrassment','excitement','fear','gratitude','grief','joy','love','nervousness','optimism','pride','realization','relief','remorse','sadness','surprise','neutral']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.13 | 0.30 | 0.16 |

| 1 | This text discusses the topic of "LABEL" in detail. | 0.18 (+35%) | 0.27 (-10%) | 0.17 (+9%) |

| 2 | This is something about "LABEL". | 0.17 (+27%) | 0.27 (-9%) | 0.17 (+5%) |

| 3 | This is seriously about "LABEL". | 0.17 (+25%) | 0.25 (-16%) | 0.17 (+9%) |

| 4 | Label: "LABEL". | 0.16 (+20%) | 0.27 (-10%) | 0.17 (+6%) |

| 5 | Understanding "LABEL" and why it stands out. | 0.16 (+19%) | 0.27 (-9%) | 0.15 (-4%) |

| 6 | Exploring various aspects of "LABEL". | 0.16 (+18%) | 0.27 (-9%) | 0.15 (-4%) |

| 7 | "LABEL" compared to other related concepts. | 0.15 (+15%) | 0.26 (-13%) | 0.15 (-5%) |

| 8 | This is categorized as "LABEL". | 0.15 (+14%) | 0.27 (-9%) | 0.16 (+3%) |

| 9 | A comprehensive overview of "LABEL". | 0.15 (+14%) | 0.29 (-1%) | 0.15 (-4%) |

| 10 | Why is "LABEL" important in modern society? | 0.15 (+12%) | 0.24 (-19%) | 0.15 (-6%) |

| 11 | This news is about "LABEL". | 0.15 (+10%) | 0.30 (+0%) | 0.16 (-1%) |

| 12 | This article is about "LABEL". | 0.15 (+10%) | 0.23 (-22%) | 0.15 (-4%) |

| 13 | The differences between "LABEL" and its alternatives. | 0.14 (+8%) | 0.24 (-19%) | 0.14 (-10%) |

| 14 | LABEL LABEL LABEL LABEL | 0.14 (+7%) | 0.29 (-1%) | 0.15 (-8%) |

| 15 | How does "LABEL" impact our daily lives? | 0.13 (-3%) | 0.25 (-16%) | 0.13 (-18%) |

| 16 | What are the key features of "LABEL"? | 0.12 (-11%) | 0.27 (-9%) | 0.13 (-21%) |

tagjina-clip-v1 on GoEmotions

LABEL = ['admiration','amusement','anger','annoyance','approval','caring','confusion','curiosity','desire','disappointment','disapproval','disgust','embarrassment','excitement','fear','gratitude','grief','joy','love','nervousness','optimism','pride','realization','relief','remorse','sadness','surprise','neutral']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.12 | 0.22 | 0.14 |

| 1 | This is something about "LABEL". | 0.13 (+15%) | 0.25 (+15%) | 0.15 (+9%) |

| 2 | This is categorized as "LABEL". | 0.13 (+12%) | 0.25 (+16%) | 0.15 (+11%) |

| 3 | This is seriously about "LABEL". | 0.13 (+11%) | 0.26 (+17%) | 0.14 (+4%) |

| 4 | This news is about "LABEL". | 0.13 (+11%) | 0.25 (+16%) | 0.14 (+5%) |

| 5 | Why is "LABEL" important in modern society? | 0.13 (+8%) | 0.22 (+1%) | 0.14 (+4%) |

| 6 | This article is about "LABEL". | 0.12 (+5%) | 0.26 (+19%) | 0.14 (+1%) |

| 7 | How does "LABEL" impact our daily lives? | 0.12 (+2%) | 0.22 (-0%) | 0.13 (-5%) |

| 8 | Understanding "LABEL" and why it stands out. | 0.12 (+2%) | 0.23 (+7%) | 0.13 (-2%) |

| 9 | This text discusses the topic of "LABEL" in detail. | 0.12 (+1%) | 0.22 (+1%) | 0.14 (-1%) |

| 10 | A comprehensive overview of "LABEL". | 0.12 (+0%) | 0.23 (+7%) | 0.13 (-3%) |

| 11 | Exploring various aspects of "LABEL". | 0.12 (-0%) | 0.25 (+17%) | 0.13 (-3%) |

| 12 | "LABEL" compared to other related concepts. | 0.11 (-2%) | 0.24 (+8%) | 0.14 (-1%) |

| 13 | Label: "LABEL". | 0.11 (-2%) | 0.24 (+8%) | 0.13 (-2%) |

| 14 | The differences between "LABEL" and its alternatives. | 0.11 (-5%) | 0.21 (-4%) | 0.13 (-7%) |

| 15 | What are the key features of "LABEL"? | 0.11 (-8%) | 0.20 (-10%) | 0.12 (-10%) |

| 16 | LABEL LABEL LABEL LABEL | 0.09 (-20%) | 0.23 (+4%) | 0.12 (-12%) |

tagjina-embeddings-v2-base-en on Twitter Eval

LABEL = ['negative', 'neutral', 'positive']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.37 | 0.49 | 0.45 |

| 1 | LABEL LABEL LABEL LABEL | 0.52 (+40%) | 0.53 (+7%) | 0.52 (+15%) |

| 2 | "LABEL" compared to other related concepts. | 0.50 (+34%) | 0.51 (+4%) | 0.50 (+11%) |

| 3 | This is categorized as "LABEL". | 0.49 (+32%) | 0.52 (+6%) | 0.49 (+9%) |

| 4 | This text discusses the topic of "LABEL" in detail. | 0.49 (+31%) | 0.52 (+6%) | 0.47 (+6%) |

| 5 | This is seriously about "LABEL". | 0.49 (+30%) | 0.52 (+5%) | 0.48 (+8%) |

| 6 | Exploring various aspects of "LABEL". | 0.47 (+25%) | 0.51 (+3%) | 0.47 (+5%) |

| 7 | How does "LABEL" impact our daily lives? | 0.46 (+22%) | 0.52 (+4%) | 0.45 (+0%) |

| 8 | The differences between "LABEL" and its alternatives. | 0.45 (+22%) | 0.49 (-0%) | 0.46 (+3%) |

| 9 | Label: "LABEL". | 0.44 (+18%) | 0.50 (+1%) | 0.46 (+2%) |

| 10 | Understanding "LABEL" and why it stands out. | 0.44 (+17%) | 0.51 (+4%) | 0.43 (-3%) |

| 11 | This article is about "LABEL". | 0.43 (+14%) | 0.52 (+5%) | 0.42 (-6%) |

| 12 | This is something about "LABEL". | 0.42 (+12%) | 0.54 (+9%) | 0.45 (-0%) |

| 13 | This news is about "LABEL". | 0.41 (+11%) | 0.50 (+1%) | 0.41 (-10%) |

| 14 | Why is "LABEL" important in modern society? | 0.40 (+6%) | 0.51 (+4%) | 0.38 (-16%) |

| 15 | A comprehensive overview of "LABEL". | 0.37 (-1%) | 0.50 (+2%) | 0.38 (-16%) |

| 16 | What are the key features of "LABEL"? | 0.36 (-3%) | 0.49 (-0%) | 0.42 (-6%) |

tagjina-clip-v1 on Twitter Eval

LABEL = ['negative', 'neutral', 'positive']

| Rephrased Label | F1 Score | Precision | Recall | |

|---|---|---|---|---|

| 0 | LABEL | 0.48 | 0.55 | 0.51 |

| 1 | LABEL LABEL LABEL LABEL | 0.52 (+8%) | 0.55 (-1%) | 0.52 (+2%) |

| 2 | This is seriously about "LABEL". | 0.50 (+5%) | 0.54 (-1%) | 0.52 (+3%) |

| 3 | Understanding "LABEL" and why it stands out. | 0.50 (+5%) | 0.52 (-5%) | 0.51 (-0%) |

| 4 | Exploring various aspects of "LABEL". | 0.50 (+4%) | 0.52 (-5%) | 0.51 (-1%) |

| 5 | A comprehensive overview of "LABEL". | 0.49 (+3%) | 0.52 (-5%) | 0.51 (-1%) |

| 6 | How does "LABEL" impact our daily lives? | 0.49 (+3%) | 0.51 (-8%) | 0.50 (-2%) |

| 7 | "LABEL" compared to other related concepts. | 0.49 (+3%) | 0.53 (-4%) | 0.50 (-1%) |

| 8 | Label: "LABEL". | 0.49 (+3%) | 0.53 (-3%) | 0.51 (-1%) |

| 9 | This is categorized as "LABEL". | 0.49 (+2%) | 0.54 (-2%) | 0.51 (+0%) |

| 10 | This is something about "LABEL". | 0.49 (+2%) | 0.53 (-4%) | 0.51 (-0%) |

| 11 | This article is about "LABEL". | 0.48 (+1%) | 0.52 (-4%) | 0.51 (-1%) |

| 12 | This news is about "LABEL". | 0.48 (+1%) | 0.53 (-4%) | 0.51 (-0%) |

| 13 | Why is "LABEL" important in modern society? | 0.48 (+1%) | 0.52 (-6%) | 0.49 (-4%) |

| 14 | The differences between "LABEL" and its alternatives. | 0.48 (+0%) | 0.50 (-9%) | 0.48 (-5%) |

| 15 | This text discusses the topic of "LABEL" in detail. | 0.48 (-0%) | 0.51 (-6%) | 0.49 (-4%) |

| 16 | What are the key features of "LABEL"? | 0.48 (-1%) | 0.51 (-8%) | 0.48 (-5%) |

tagConclusion

Depending on the model and dataset, rephrasing labels can be a highly effective strategy, significantly improving zero-shot classification performance by 30%. Some rephrasing constructions work better when they "hit" exactly the data domain. For example, "This news is about 'LABEL'" performs well on AG News, as observed with both models. Interestingly, generic constructions such as "This is something about 'LABEL'" also work quite well.

Among all the constructions tested, my favorite is "This is seriously about 'LABEL'" as it resonates the "my grandma is dying" vibe in LLM prompting. Either way, this confirms our intuition that converting a classification task into a sentence similarity task can boost zero-shot classification performance.

However, we must acknowledge the limitations of this label rephrasing strategy. When applied to the TREC dataset, where labels are abbreviations with very weak semantics (e.g., ['ABBR', 'ENTY', 'DESC', 'HUM', 'LOC', 'NUM']), rephrasing does not work well. In fact, any kind of label rephrasing underperforms the baseline. This is likely because the very limited semantics in these abbreviations get "whitened out" by other words during the rephrasing process. This also explains why repeating the LABEL four times does not drop the performance too much compared to other constructions.

This leaves us with one final question: what is the best and most generic label construction? Is there a specific trick like "This is seriously about 'LABEL'" or can we use DSPy to figure it out via discrete optimization?

In fact, this line of thinking is at the heart of jina-embeddings-v3. The idea is to introduce a learnable special token during the training time, such as [ZEROCLS], to indicate the task; and prepend this token during the inference time with [ZEROCLS] LABEL to signal the embedding model to "switch" to zero-shot classification mode. In our experiment above, "This is seriously about" essentially plays the role of [ZEROCLS]. There are just a few weeks left until our v3 release, so stay tuned!