In the intricate world of generative AI, boundaries are akin to shifting sands, constantly reshaped by the winds of innovation. Last November, we introduced a fresh perspective with "Search is Overfitted Create; Create is Underfitted Search", urging the community to view these boundaries in a new light. Now, with PromptPerfect's "LLM as a database", we're not merely iterating on a trend; we're materializing our forward-thinking philosophy, crafting tools that resonate with our envisioned future of the generative AI landscape.

tagA Spectrum, Not a Dichotomy

Historically, we've perceived search and creation as two separate realms. But as the generative AI age progresses, it's becoming clear that they're more interconnected than we once believed. Search, in its most rigid form, can be seen as an overfitted version of creation, while creation, with its boundless potential, can be viewed as an underfitted form of search. PromptPerfect's new feature is a nod to this interconnectedness, offering a seamless blend of both.

tagHow to use "LLM as a Database" Feature in PromptPerfect

The beauty of the "LLM as a database" feature lies in its adaptability. Users can slide between the exactness of search and the novelty of creation, ensuring a tailored experience that meets specific needs.

Here's a step-by-step breakdown of how users can navigate and leverage this feature:

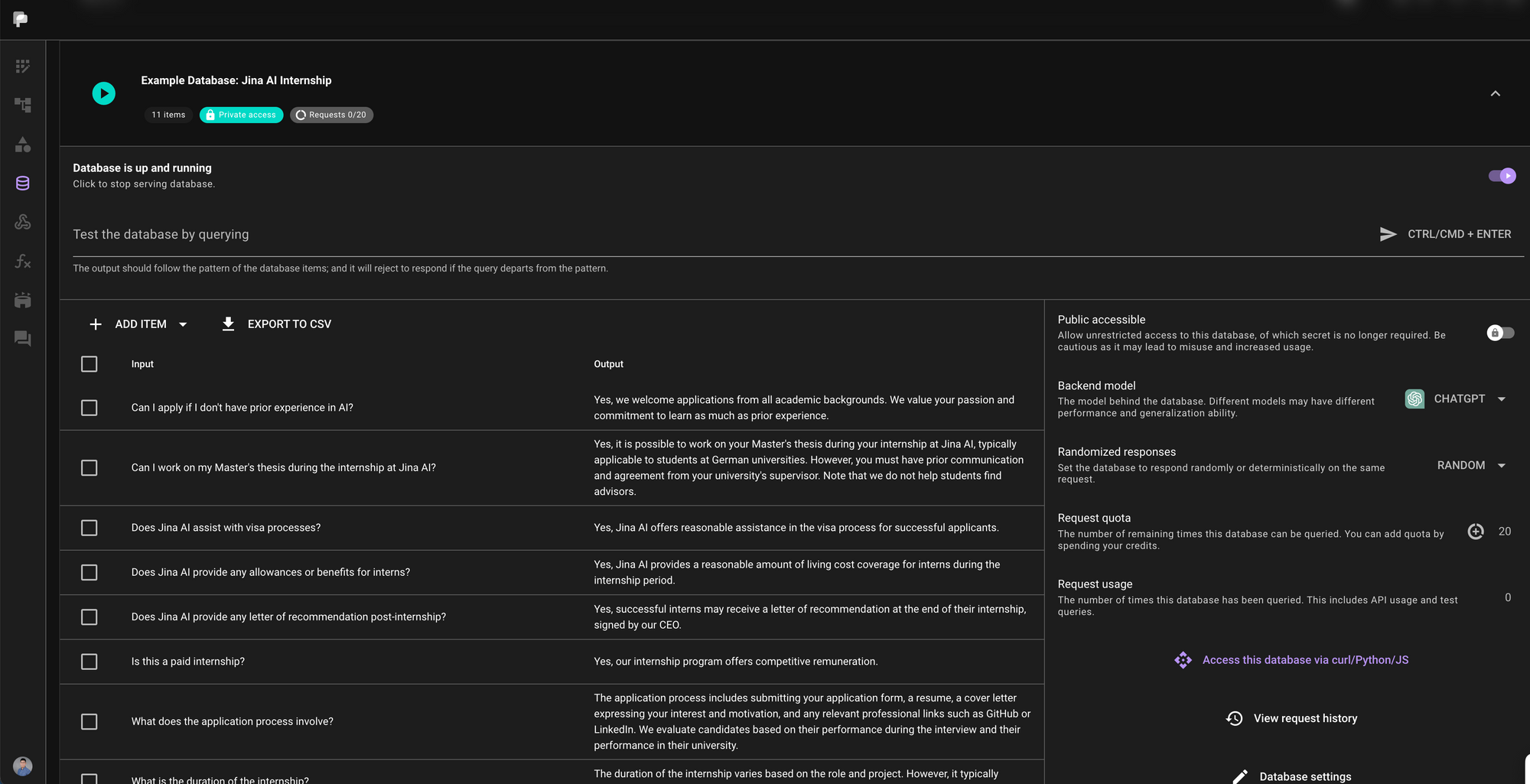

- Accessing the Feature: Upon opening PromptPerfect, users can easily locate and click on the "LLM as a database" tab.

- Database Creation: Users are presented with the option to create a new database. Here, they can specify which backend LLM they wish to use. Whether it's gpt3.5turbo, gpt4, claude2, llama2, or any other available model, the choice is at their fingertips.

- Data Import: Flexibility is key. Users can either bulk import their key-value pairs into the database or choose to manually add them one by one, catering to both large-scale and smaller, more specific projects.

- Deployment: Once satisfied with their database, users can deploy it in production. They have the option to make it public or keep it private, requiring a token for access. This ensures both accessibility and security, depending on the user's preference.

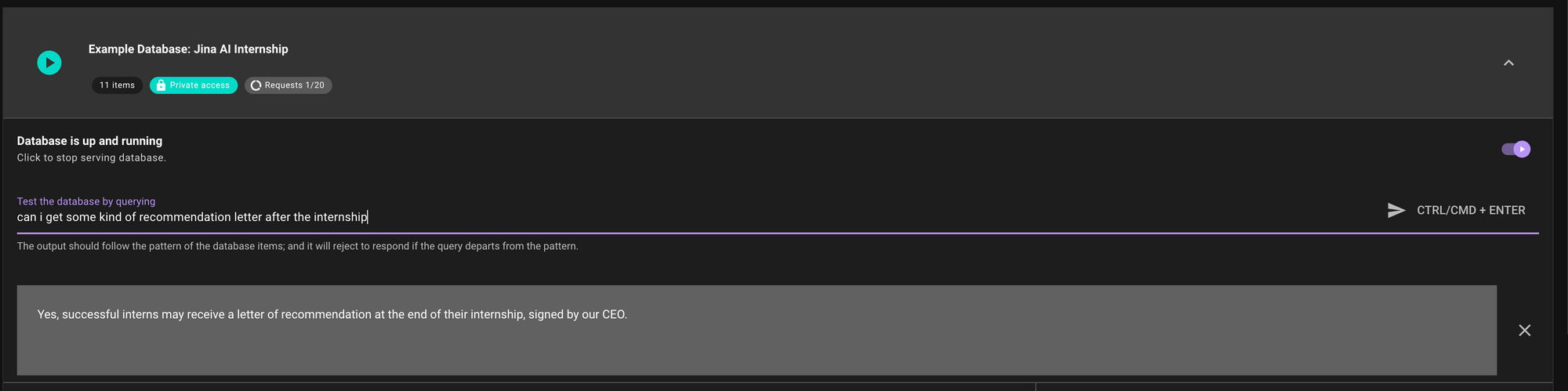

- Querying the Database: The real magic happens here. Users can query their database using curl, Python, or Javascript through a straightforward API. If the queried key exists in the database, it returns the corresponding value. But the standout feature is its ability to interpolate when faced with an unfamiliar key. Instead of returning an error or a blank, the system intelligently draws from existing key-value pairs to generate and return a plausible value.

This user-centric design ensures that while the technology behind "LLM as a database" is complex, interacting with it is straightforward and intuitive. It's a blend of advanced tech with user-friendly design, making it accessible to both tech-savvy individuals and those less familiar with the intricacies of LLMs.

tagWhat Can "LLM as a Database" do: a Glimpse into Examples

To further elucidate the potential of the "LLM as a database" feature, PromptPerfect has incorporated two built-in examples that showcase its versatility and depth:

How to build a chatbot from a FAQ list?

Drawing from real-world needs, we've imported all the FAQ entries from our internship page. This provides users with an interactive bot that can answer any query related to our internship program. It's a testament to how businesses can streamline customer or user interactions, ensuring that queries are addressed promptly and accurately.

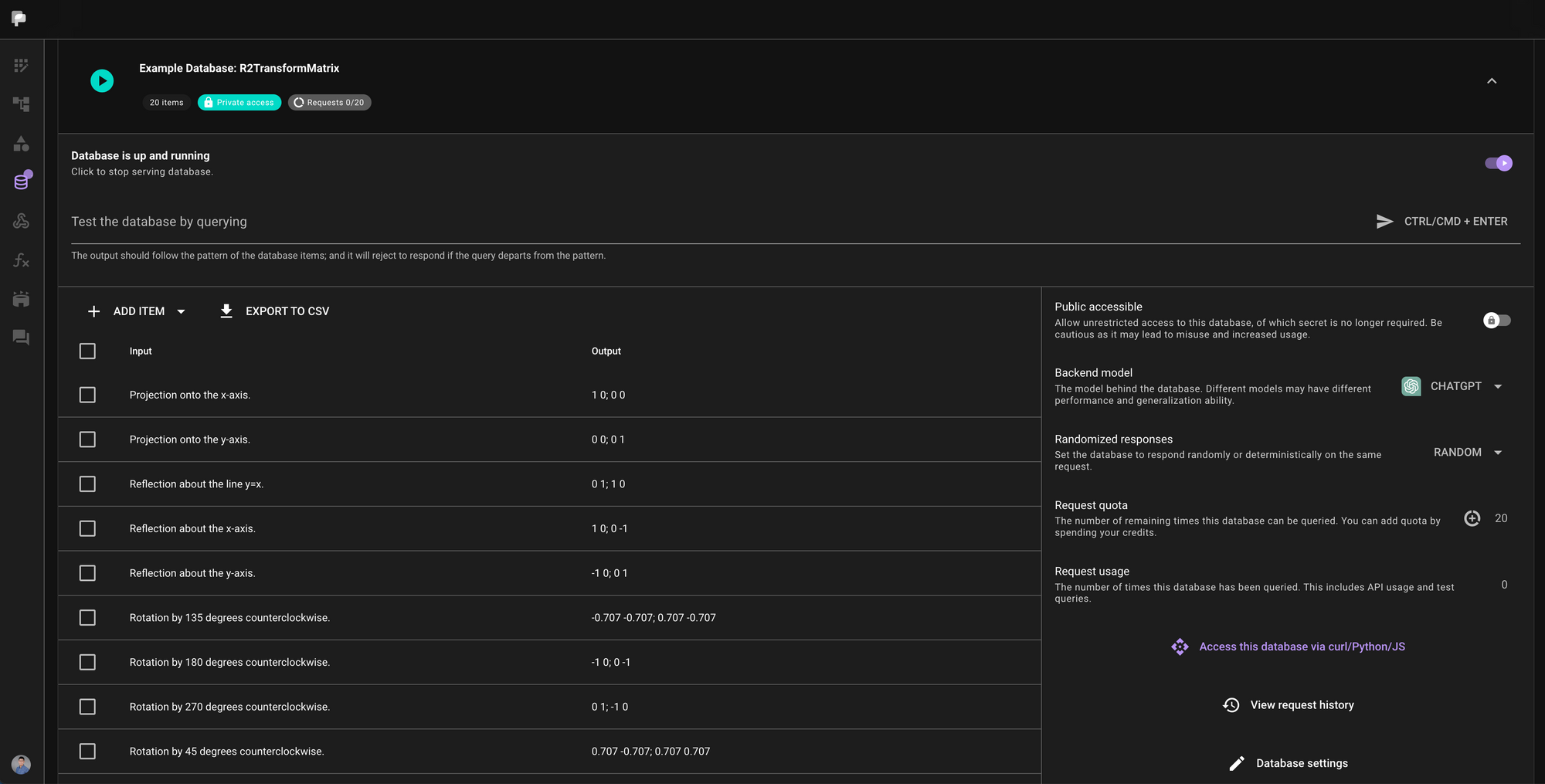

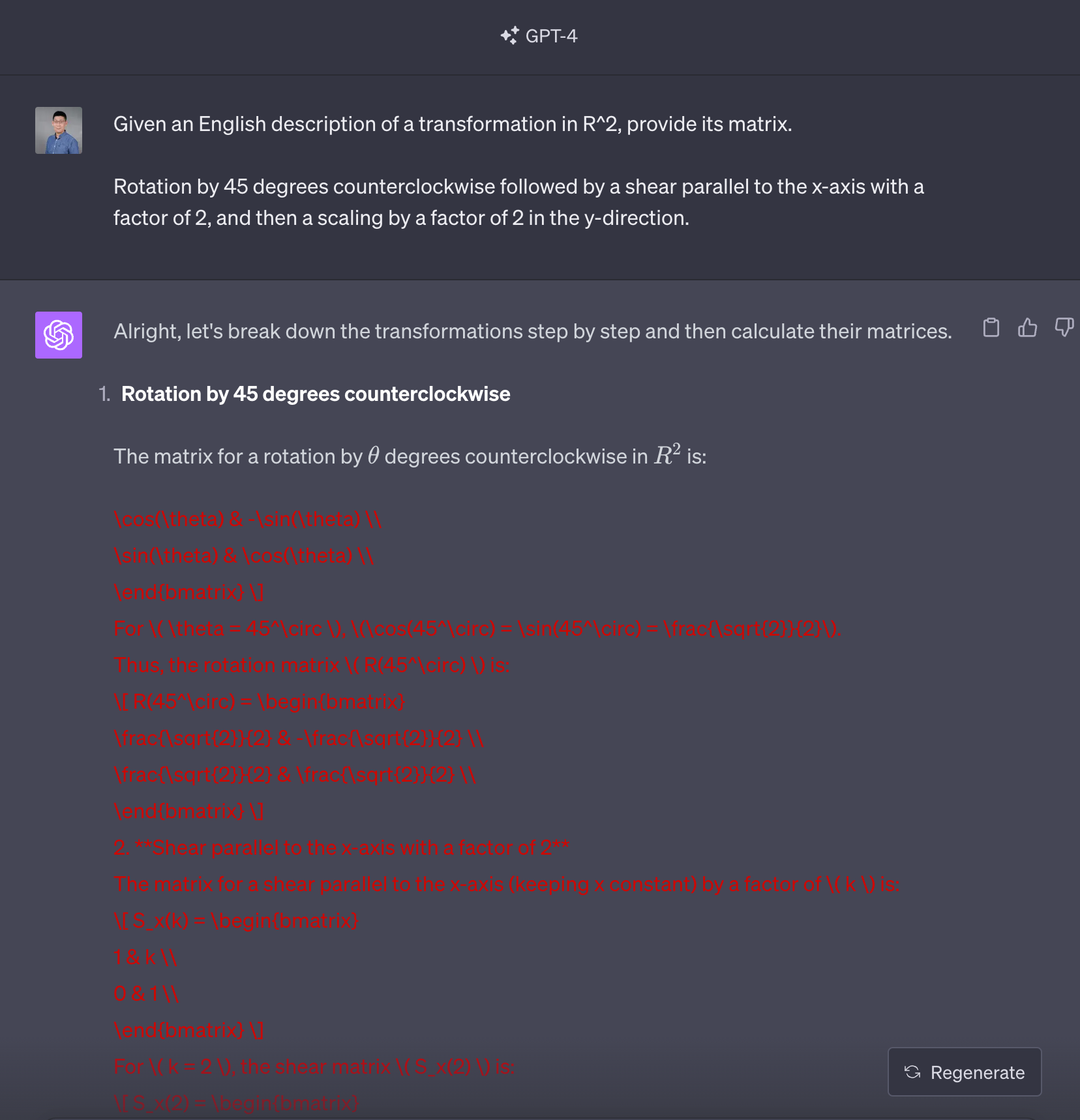

How to build a translator for mathematical expressions?

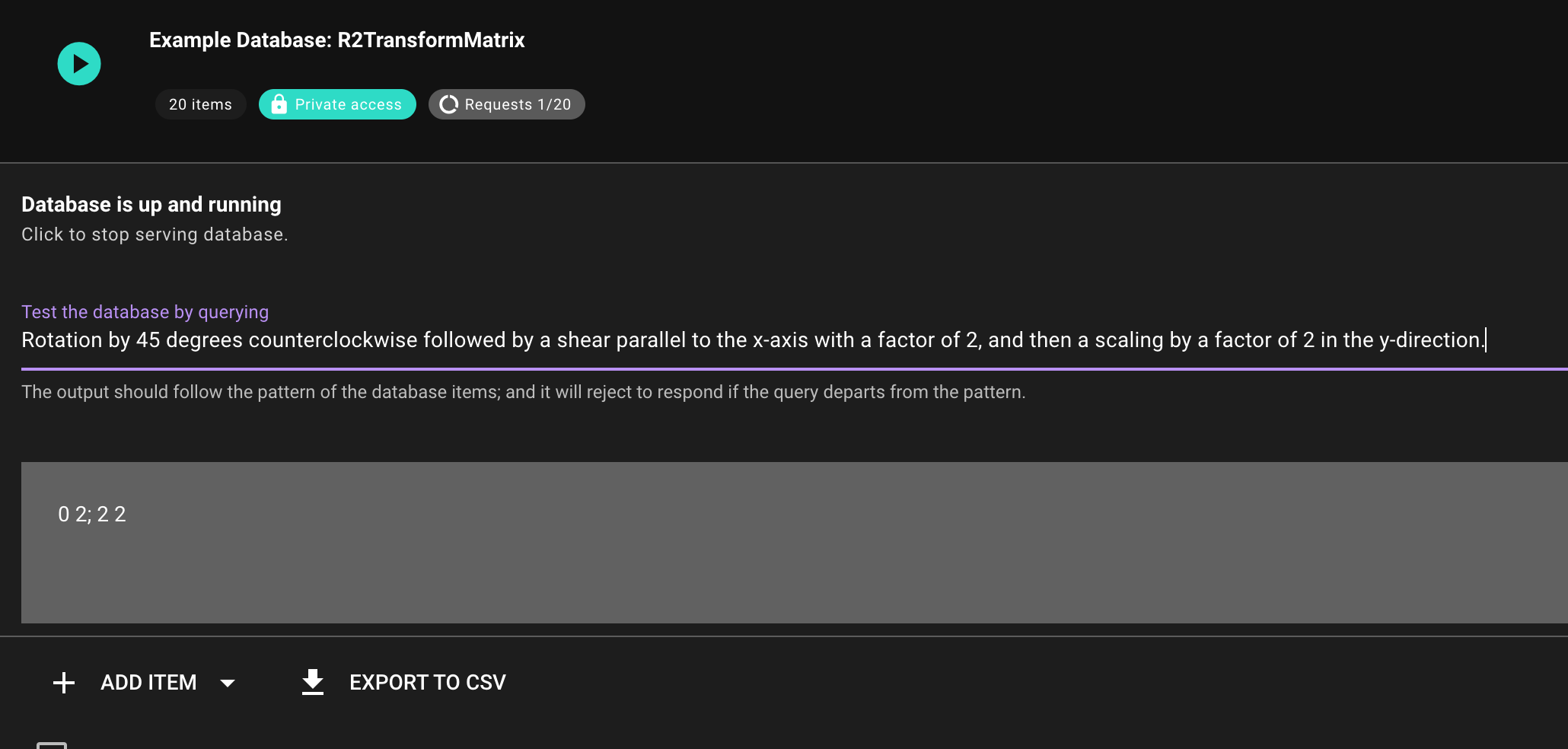

Delving into a more complex domain, we've built a database that bridges the gap between natural language descriptions of mathematical transformations and their matrix representations. This isn't just about translating words into numbers; it's about understanding the underlying mathematical concepts and accurately representing them.

For instance, consider the task of translating an English description of a transformation in into its matrix form. The system is trained with a variety of sequence pairs, such as:

[

{"input": "Rotation by 90 degrees counterclockwise.", "output": "0 -1; 1 0"},

{"input": "Scaling by a factor of 2 in the x-direction.", "output": "2 0; 0 1"},

{"input": "Reflection about the y-axis.", "output": "-1 0; 0 1"},

{"input": "Shear parallel to the x-axis with a factor of 2.", "output": "1 2; 0 1"},

...

]

This example underscores the depth of understanding the system possesses, and its ability to handle intricate tasks that require both linguistic and mathematical prowess.

These built-in examples serve as a starting point, inspiring users to envision and implement their own unique use cases. Whether it's simplifying customer interactions or tackling specialized tasks, the "LLM as a database" feature offers a canvas for innovation.

tagPractical Applications You Can Build with "LLM as a Database"

- Revolutionizing Customer Support: Instead of static responses, imagine a system that evolves with every interaction, learning and improvising, ensuring customers always receive relevant solutions.

- Elevating FAQ Bots: These aren't just chatbots. They're dynamic tools that can craft responses on-the-fly, ensuring every user query gets a tailored answer.

- Enhancing AI Interactions: Agents that recall past interactions and adapt to future ones, offering a user experience that's constantly refined.

tagComparing "LLM as a Database" vs. Few-shot Learning and RAG

In the intricate tapestry of machine learning methodologies, understanding the nuances and positioning of new techniques relative to established paradigms is crucial. The "LLM as a database" feature introduced by PromptPerfect offers a unique perspective, and it's essential to discern its place amidst the prevailing technologies.

- Few-shot Learning: Traditional few-shot learning operates on the principle of leveraging a limited set of examples to guide the model's response generation. It's a method that emphasizes the importance of context, allowing models to generate relevant outputs based on a handful of guiding examples. While powerful in its own right, few-shot learning is primarily context-driven.

- Retrieval-augmented Generation (RAG): At the other end of the spectrum, RAG employs external datastores to enrich the prompt, pulling from vast repositories of information to enhance the generated response. It's a methodology that emphasizes comprehensive data retrieval, ensuring that the model's outputs are informed by a wide array of external data.

- "LLM as a Database" - Striking a Balance: PromptPerfect's "LLM as a database" feature can be visualized as occupying the middle ground between few-shot learning and RAG. It integrates the context-driven approach of few-shot learning with the data-rich capabilities of a database. Users can leverage the richness of key-value pairs for precise responses, while also benefiting from the model's ability to interpolate and generate insights. Moreover, the architecture of "LLM as a database" holds the potential to transition towards a full RAG system, offering users the flexibility to harness extensive external datastores when needed.

In essence, the "LLM as a database" feature doesn't seek to replace or overshadow few-shot learning or RAG. Instead, it offers a harmonized approach, bridging the gap between context-driven generation and extensive data retrieval. It's a testament to the evolving nature of machine learning, where hybrid methodologies can harness the strengths of established paradigms to offer enhanced capabilities.

tagFinal Thoughts

PromptPerfect's "LLM as a database" isn't just a feature; it's a thoughtful exploration of the evolving relationship between search and creation. It's an invitation to see beyond traditional boundaries and to experience a tool that adapts to the user's needs. As we continue to navigate the generative AI age, innovations like these not only enhance our experiences but also challenge us to rethink our established paradigms.