OpenAI has been on the forefront of innovative developments in the realm of artificial intelligence, consistently introducing tools and APIs that allow developers to leverage the power of AI in a variety of applications. One of their latest offerings nested since the gpt-3.5-turbo-0613 and gpt-4-0613 models is the function calling feature, and it's creating quite a buzz.

Function calling is a game-changer for developers. It allows you to describe functions to the model and have it intelligently output a JSON object containing the necessary arguments to call those functions. The beauty of this feature is that it bridges the gap between natural language inputs and the structured data required for a wide array of applications - from creating chatbots and converting natural language into API calls to extracting structured data from text.

However, as with any powerful tool, the function calling feature does come with its complexities. Developers need to provide a detailed JSON schema for every function that clearly labels the purpose, input, and output of each function. For a large system with numerous functions, this requirement can be daunting.

In this blog post, we'll take a closer look at this challenge and explore how the newly released function debugger from PromptPerfect can assist developers in overcoming this hurdle. We'll delve into the debugger's capabilities, from auto-generating JSON schemas to enabling easy testing and debugging of function calls.

So, buckle up for an in-depth exploration of how to leverage the function debugger from PromptPerfect to tame the OpenAI function call beast. The journey ahead promises to be both enlightening and rewarding.

tagFrom Natural Language to Predefined Functions: The Power of OpenAI's Function Calling

The announcement of OpenAI's function calling feature might initially create an impression that it's primarily a tool for retrieving structured data from the model. However, it's the ability to convert natural language into a set of predefined functions that truly embodies its power and significance.

Consider a more interactive scenario - the smart speaker that's become an integral part of our daily lives. Let's say you tell your smart speaker,

"Hey, check the weather for tonight. If it's good, book a table for two outdoors at Luigi's Pizzeria at 7 PM, otherwise, book it indoors."

This one command encompasses two interdependent requests - checking the weather and making a restaurant reservation based on the weather conditions. These actions correspond to two predefined functions, check_weather(location: string, date: string) and book_table(restaurant: string, party_size: int, time: string, location: 'outdoor' | 'indoor'). The function calling feature converts your natural language command into these function calls.

This functionality of the OpenAI API effectively allows Large Language Models (LLMs) to easily access external tools and APIs. It resonates with other advanced frameworks such as ReAct prompting, introduced by Yao et al., 2022, where LLMs generate both reasoning traces and task-specific actions in an interleaved manner. This synergy between reasoning and acting lets the model interact with external tools to retrieve additional information, leading to more reliable and factual responses.

Similarly, it brings to mind concepts like Auto-GPT, an application that uses OpenAI's text-generating models to interact with software and services online. Auto-GPT is essentially a companion bot that uses GPT-3.5 and GPT-4 models to carry out instructions autonomously, leveraging the OpenAI models' ability to interact with apps, software, and services.

The function calling feature brings us one step closer to the seamless integration of artificial intelligence into our daily lives. It allows for active and dynamic interaction with external tools and APIs, going beyond mere structured data retrieval. However, the journey to achieving this fluid interaction is not without challenges. Ensuring that these function calls are triggered correctly and yield the desired results requires thorough debugging and fine-tuning, which is where PromptPerfect's function debugger comes into play. In the next section, we'll delve into how this tool can be utilized to enhance and streamline the development process of such AI systems.

tagBridging the Gap: From OpenAI's Function Calling to Developer's Toolchain

Even though OpenAI's function calling feature offers a powerful capability to convert natural language into predefined functions, there are still some obstacles for developers when it comes to practical implementation. Let's delve into these challenges and see how PromptPerfect can provide solutions to overcome them.

tagProblem 1: JSON Schema Generation and Maintenance

To use OpenAI's function calling feature, developers need to provide a JSON Schema for each function. This schema is crucial because it dictates how the function will be recognized and called by the language model. However, writing these schemas manually can be quite challenging, especially when there are multiple functions involved.

On top of that, maintaining these schemas can also become increasingly complex as the number of functions grows or as functions evolve over time.

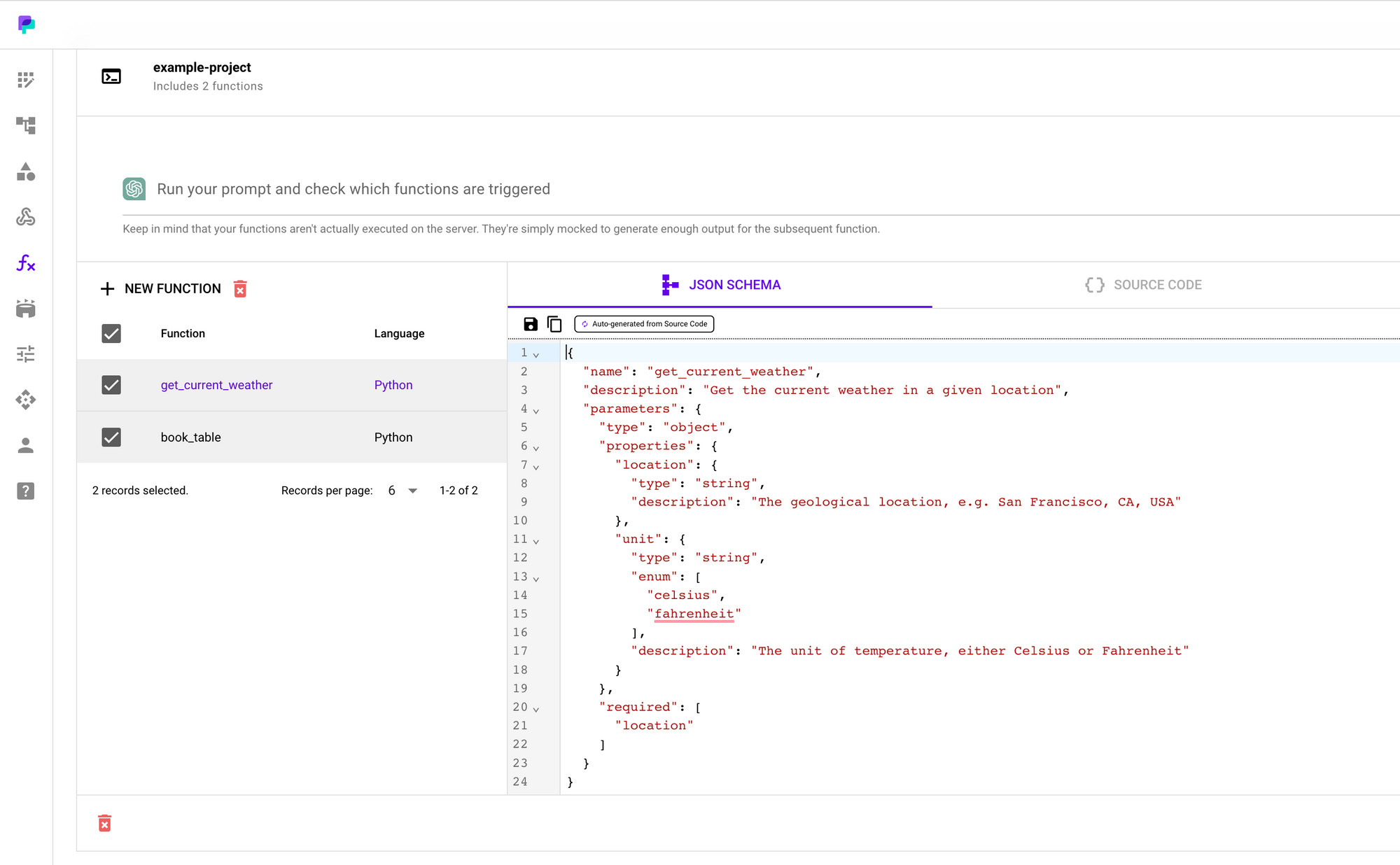

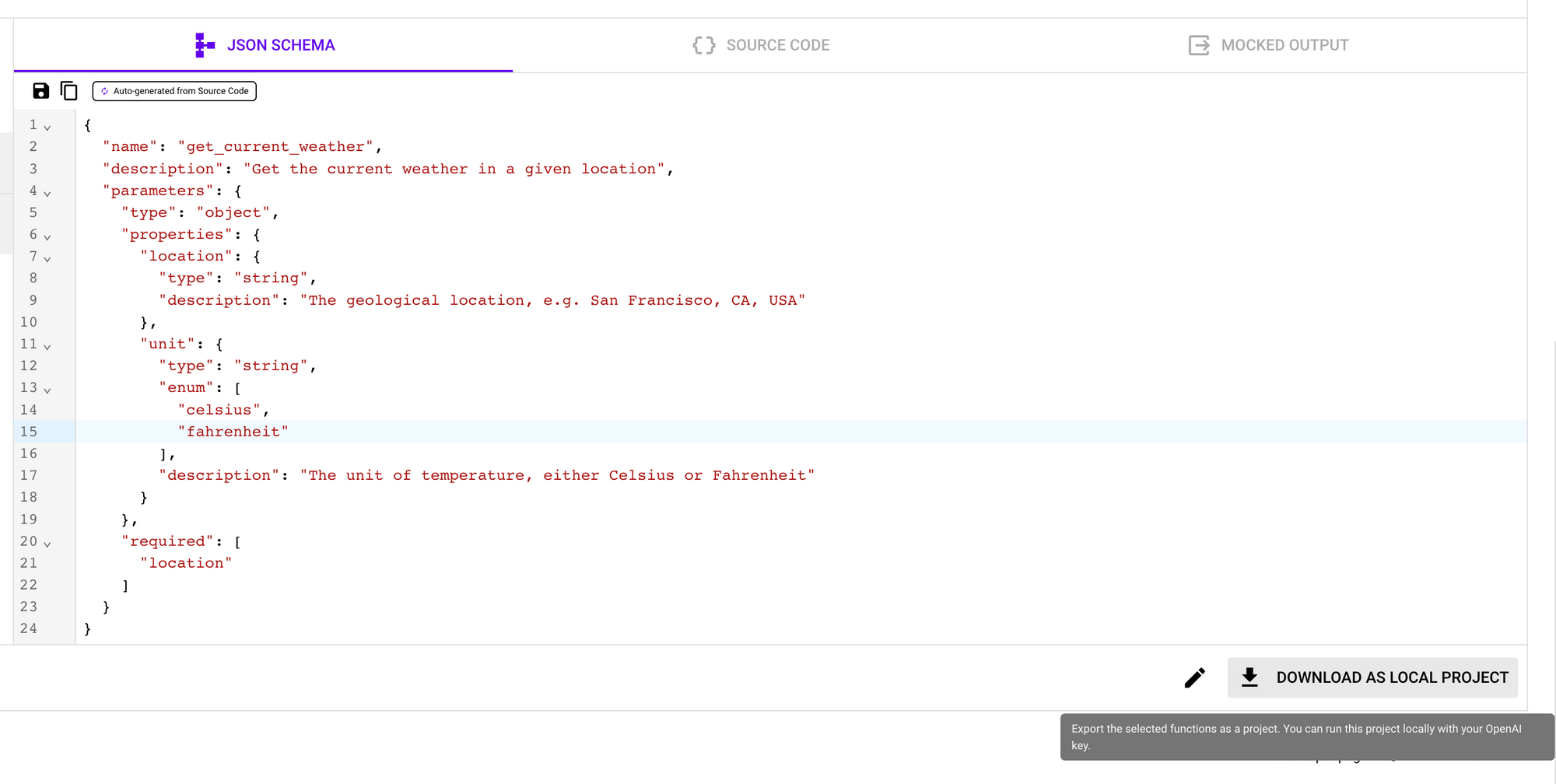

Here is an example of a function and its corresponding JSON Schema:

def get_current_weather(location, unit="fahrenheit"):

"""Get the current weather in a given location"""

weather_info = {

"location": location,

"temperature": "72",

"unit": unit,

"forecast": ["sunny", "windy"],

}

return json.dumps(weather_info)

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

You can see that even for a relatively simple function, the JSON schema can be quite verbose.

Solution: PromptPerfect's Automatic JSON Schema Generation

PromptPerfect comes with a feature that automatically generates the JSON Schema for your functions, significantly reducing the manual work and potential errors. This feature not only generates the schema but also allows developers to modify it according to their needs. On top of that, PromptPerfect comes with a linter that validates the correctness of the JSON Schema on the fly, providing real-time feedback and ensuring the schema's validity.

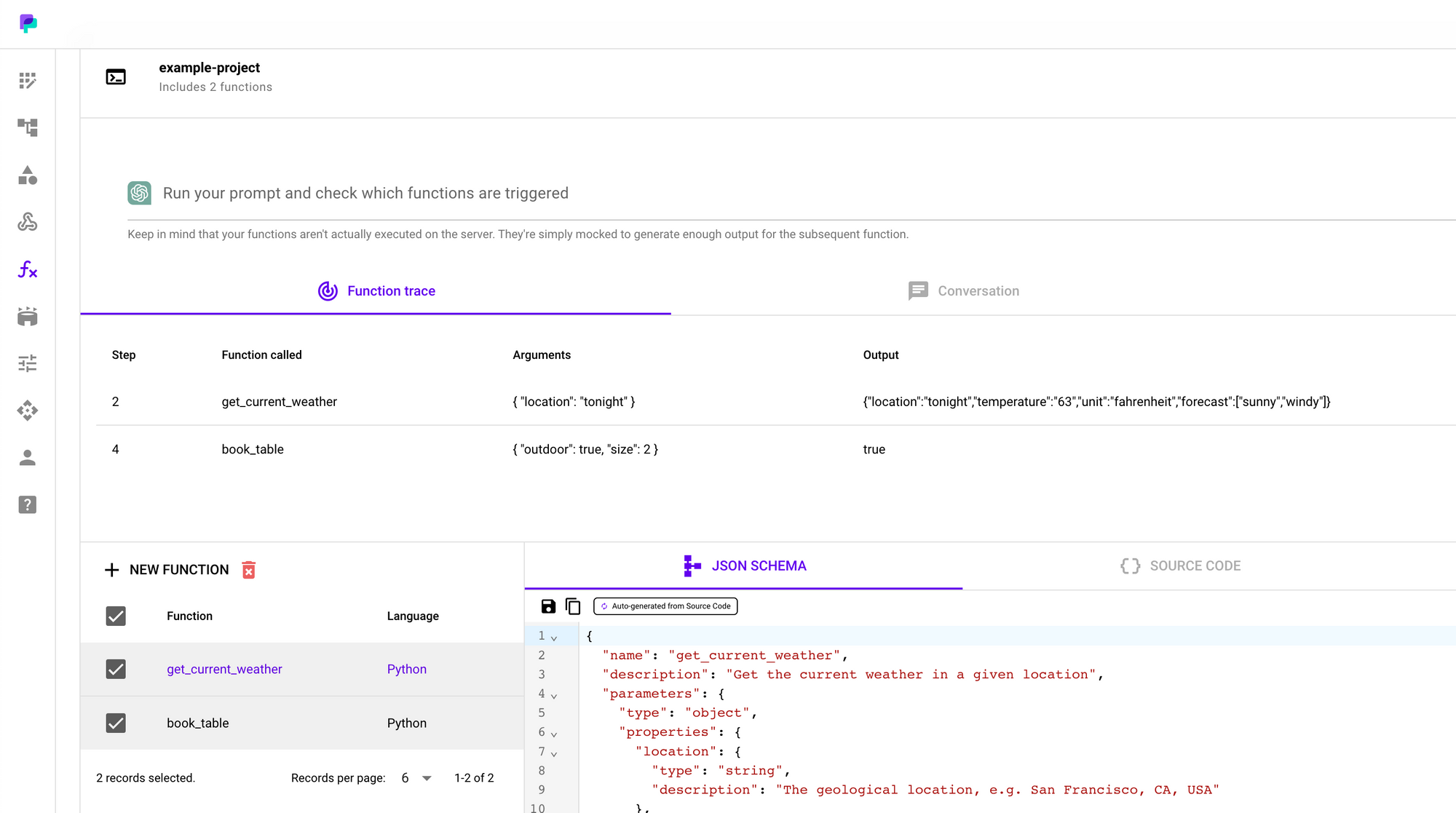

tagProblem 2: Function Call Tracking

When multiple functions are involved, tracking the execution flow becomes challenging. To illustrate, let's go back to our smart speaker example where we had two functions: get_weather and book_table.

Suppose you provide a prompt like, "Hey, check the weather tonight. If it's good, book a table for two outside, otherwise inside." You'd want to know:

- Did both

get_weatherandbook_tableget called? - In what order were they called?

- What arguments were passed to each function?

Understanding these details is critical for debugging and refining the interaction of the language model with your functions.

For instance, if the book_table function is not being called as expected, you'd need to know whether it's because the get_weather function didn't return the expected results, or because the language model didn't understand the conditional part of the prompt correctly.

In a more complex scenario, if these functions call each other or there are more conditions involved, the execution flow can get even harder to track.

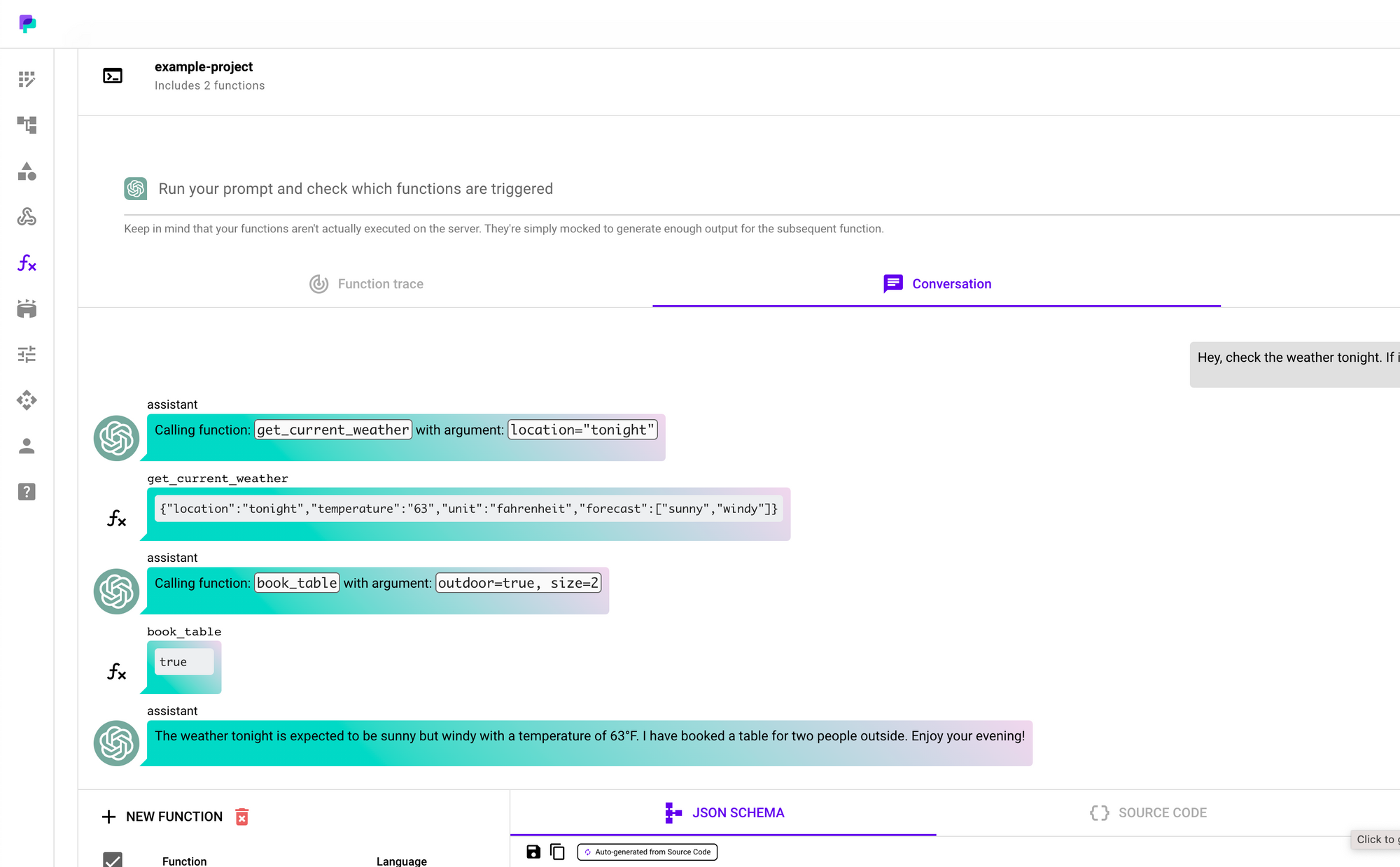

Solution: PromptPerfect's Debug Trace Feature

To address these challenges, PromptPerfect offers a Debug Trace Feature, which acts like a debugger in a standard IDE. This feature provides detailed tracking information, including:

- A step-by-step execution trace, allowing you to see the order in which the functions were called.

- Argument watching, showing the exact arguments that were passed to each function call.

With this feature, you can easily test the prompt and trace down any issues. For example, you could check the arguments that were passed to book_table to see if the booking was made for the outside or inside, or you could see the results returned by get_weather to verify if the weather conditions were interpreted correctly.

PromptPerfect's features bridge the gap between OpenAI's function calling and the developer's toolchain, simplifying the process and making it easier to integrate this powerful capability into your applications.Regenerate ChatGPT may produce inaccurate information about people, places, or facts.

tagClosing the Loop: Running Your Project Locally

After debugging and perfecting the interaction between the language model and your functions with PromptPerfect, the next step is to incorporate it into your local development environment. This is where PromptPerfect really completes the development loop.

With a simple click, PromptPerfect allows you to download your project, including all the source code and JSON Schemas, and run it locally. Currently, PromptPerfect supports Python for local deployment. Once downloaded, all you need to do is provide your own OpenAI API access token, and you're ready to go.

The downloaded code is well-documented and easy to integrate into your existing codebase. This feature accelerates the development process by saving you the time and effort of setting up everything from scratch.

To summarize, here is the typical user journey with PromptPerfect's function debugger:

- Upload your functions: You upload the source code of each function to PromptPerfect.

- Generate and edit JSON Schemas: PromptPerfect automatically generates the JSON Schema for every function. You can edit the schemas or the source code directly in the online editor if needed.

- Test and trace functions: A test prompt environment is provided for function tracing. You can see which functions are called and in what order, with what arguments, to ensure everything works as expected.

- Download your project: Once everything works well, you can download your project and run it locally using your own OpenAI API access token.

PromptPerfect streamlines the development process, making it easier than ever to leverage OpenAI's function call capabilities in your own applications.

tagWrapping Up

The advent of function calls in OpenAI's language models has undoubtedly opened up new possibilities. However, adopting this powerful tool can be a challenging process. PromptPerfect simplifies this with automated JSON Schema generation, streamlined debugging, and efficient function tracing capabilities.

If you're intrigued and want to see PromptPerfect's function debugger in action, check out our video tutorial. Make sure to turn on the sound for complete insights.

We're excited to see what you create with this powerful toolset. Here's to transforming your brilliant ideas into reality. Happy coding!

Please don't hesitate to reach out with any questions or feedback. We're continually refining PromptPerfect based on user inputs and would love to hear your thoughts.