Reading the news (well, watching YouTube since I'm lazy) is so much fun these days, and not at all repetitive in any way:

Oh yeah, machines are dooming music too.

However, if you're reading this, you're probably already in the AI space, working with the very tools that will end the world and keep humanity as either pets or slaves. Or both.

But not you, of course. You're one of the prompt engineers, that elite breed of cool hacker types who are the machine whisperers. Your job won't be in jeopardy, right?

...right?

Guess again, sunshine. New tools are coming along that automate prompt engineering itself. Imagine prompts creating prompts creating prompts. And in a world that creates its own prompts, guess who isn't so invaluable anymore?

What does this mean for prompt engineers? It means things are going to change, and change fast. In some ways for the better, in some ways for the rockier. Rockier, that is, if you don't keep up.

tagA bit of context

Think about "traditional" prompt engineering (you know, like your father and grandfather before him used to do in the prompt mines).

You use the GPT playground, fiddle with some parameters, plug in your prompt and hope for the best. If that doesn't work, you re-word the prompt and try again. Lather, rinse, repeat. Finally, you get something that'll do the job, at least well enough.

That works, technically, but it's slow and frustrating. There's a lot of guessing, praying to whatever gods reside within the machine, and pulling out of hair in frustration.

What if there was a new way? A better way? A more perfect way?

As you may have guessed, that's where PromptPerfect comes in.

PromptPerfect lets you optimize and streamline prompts, create templates, reverse engineer prompts from images, validate with multiple models, and more. It all comes wrapped in a nifty web interface, or via an API if you're in the mood for bulk processing.

What's more, it goes beyond just prompts for text generation, allowing prompts for Stable Diffusion, Midjourney, and other image generation services.

If you think of prompts as the API for LLMs and image generators, then PromptPerfect is the IDE for prompts.

tagPrompts as the API for LLMs

Typically an API looks like a bunch of methods that you use to talk to a service. But in LLMs, you communicate with the API via natural language:

- Prompt for translation:

Translate the following English sentence into French: 'Hello, how are you?' - Prompt for summarization:

Summarize the given article on renewable energy in 3-4 sentences. - Prompt for creative writing:

Write a poem about the beauty of nature. - Prompt for mathematical computation:

Calculate the square root of 625. - Prompt for factual question answering:

What is the capital of Australia? - Prompt for idea generation:

Generate a list of 10 potential interview questions for a software engineer role. - Prompt for writing a specific type of text (email):

Compose an email to invite colleagues for a team-building event next week. - Prompt for recipe suggestion:

Suggest a recipe for a vegetarian lasagna. - Prompt for procedural guidance:

Provide step-by-step instructions on how to change a flat tire. - Prompt for generating humorous content:

Tell me a joke.

tagTypes of prompts

Prompting has evolved a long way from tell me a story of when harry and draco fell in love. Let's look at a few different types of prompts.

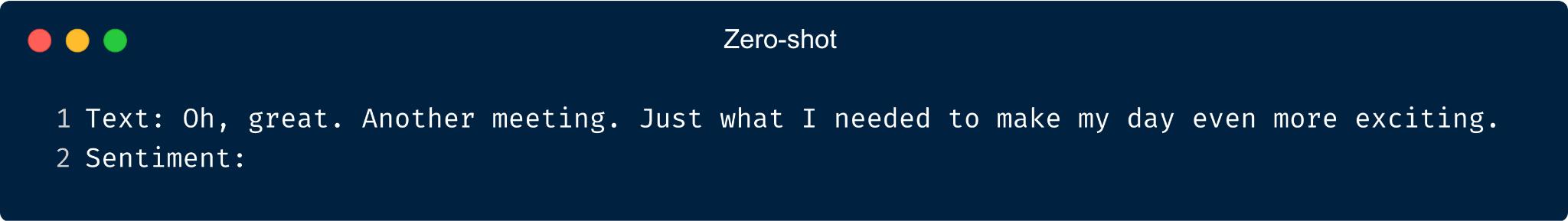

tagZero-shot prompts

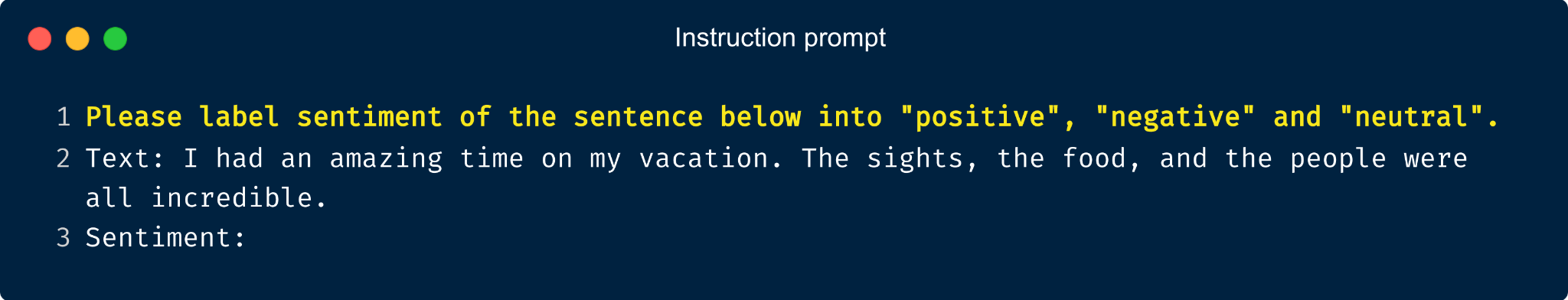

Very simple prompts: For example, getting simple sentiment analysis for a single string without providing any context.

In short, you provide a {test} (namely Oh great, another meeting...) and the label for the thing you want. We can represent this concept as:

{test}tagFew-shot prompts

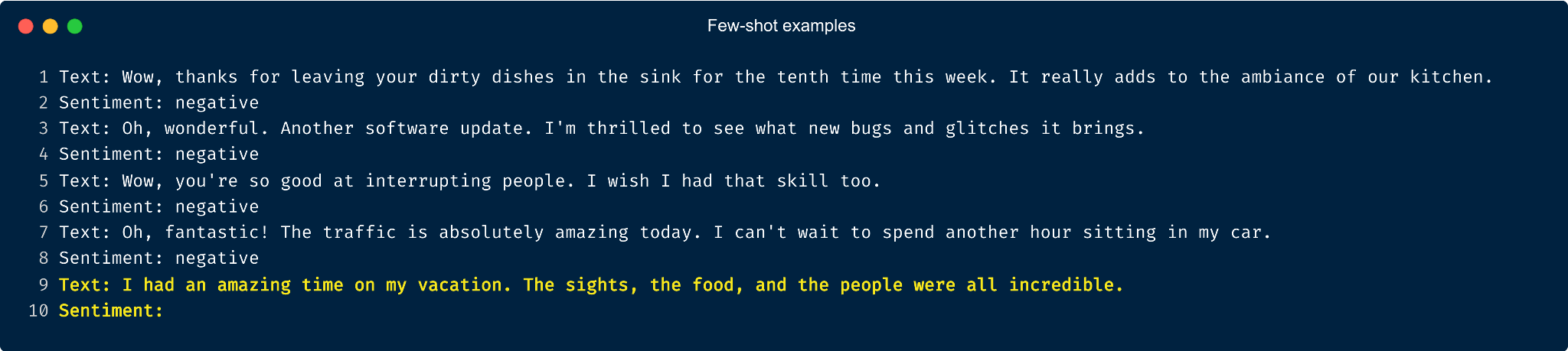

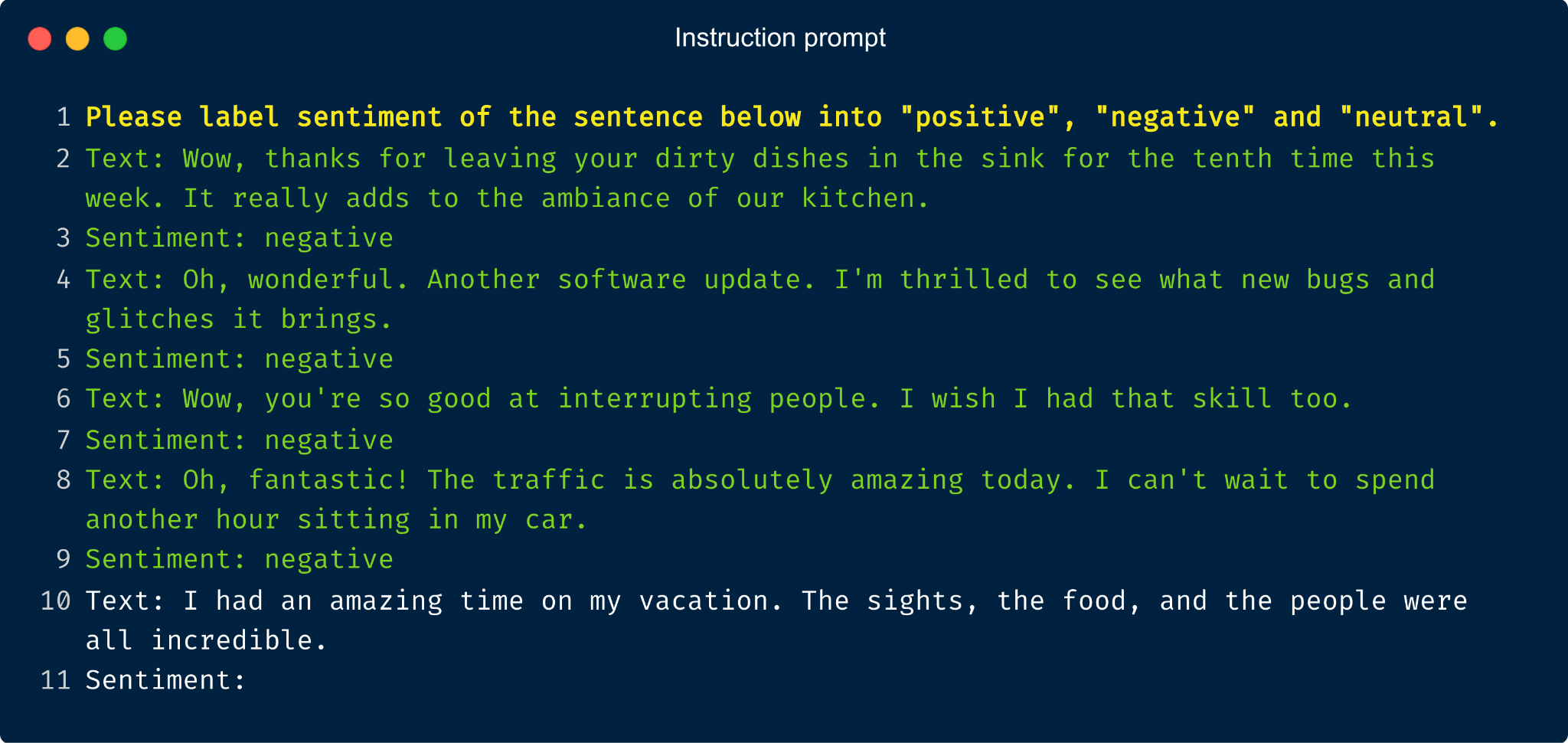

Few-shot prompts present the LLM with several high-quality demonstrations, each consisting of both input and desired output, on the target task. As the model first sees good examples, it can better understand human intention and criteria for what kinds of answers are wanted. Therefore, few-shot learning often leads to better performance than zero-shot.

You can think of this as:

{

[training...]

test

}Some things to bear in mind:

- Output labels are wide open, not limited to just a subset that you want to work with.

- There's more token consumption, so you may hit the context length limit when input and output text are long.

- This kind of prompt can lead to dramatically different performance, from near random guesses to near state-of-the-art. This depends on choice of prompt format, training examples, and the order of those examples.

tagInstruction prompts

Instruction prompts take the zero- or few-shot examples and prepend an instructional prompt to give more fine-grained directions. These can be zero-shot:

{

task instruction,

test

}...or few-shot:

{

task instruction,

[training...],

test

}tagChain of thought prompts

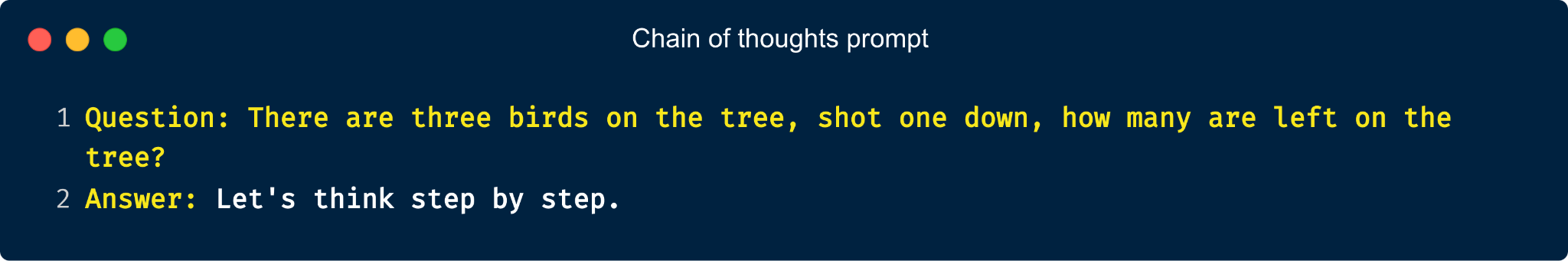

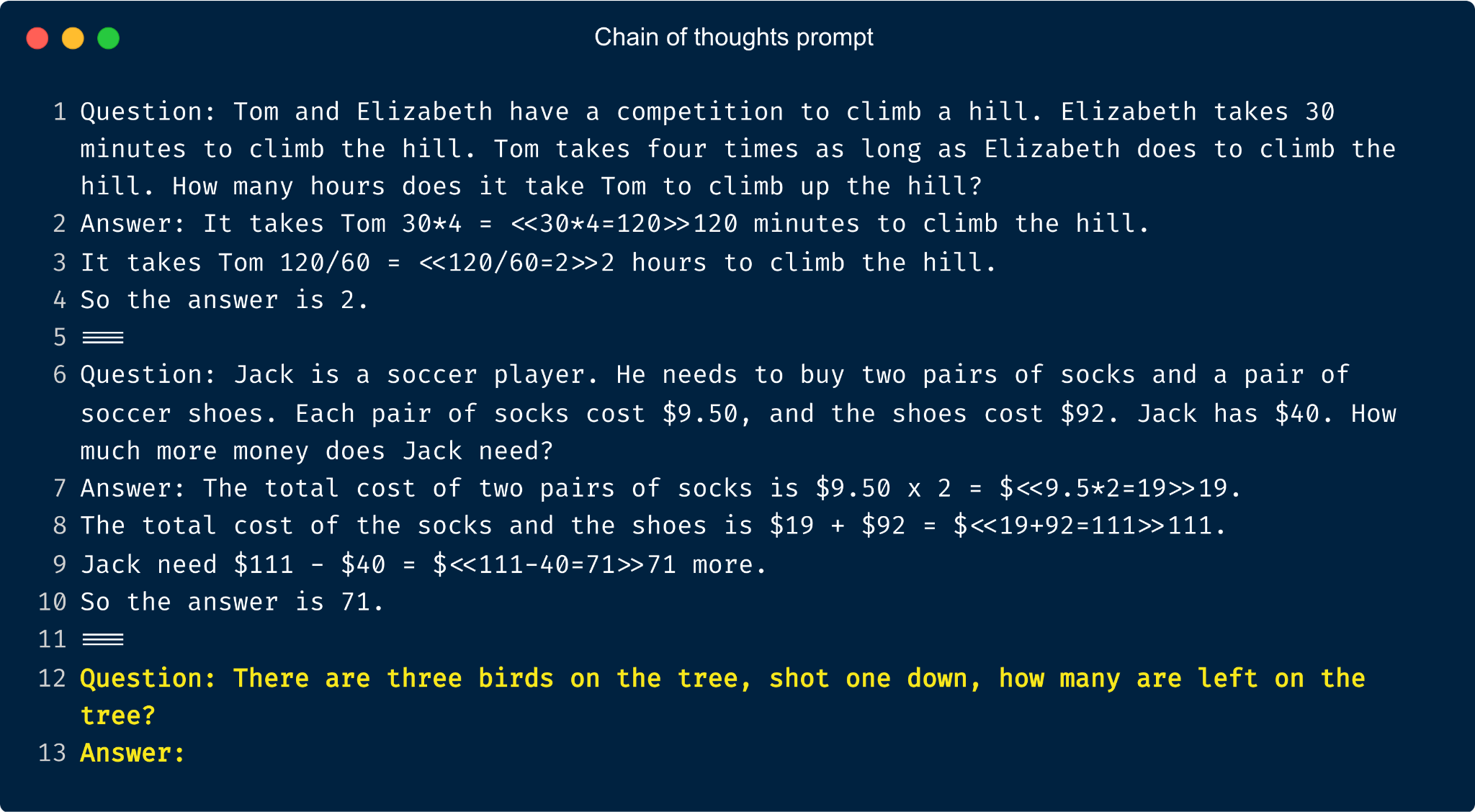

Chain of thought prompts ask the LLM to walk through its thought process, actually making it "think" through the steps of a task and provide (usually) better output. Again, they can be zero-shot:

{

test,

"let's think step by step"

}...Or few-shot. where we provide a human's chain of thought for a given set of test cases, "inspiring" the LLM to do the same when generating output:

{

[(training, thought)...]

test

}tagChallenges of more complex prompts

Of course, more complex prompts bring more challenges:

- Not all LLMs understand complex prompts (the best at present is GPT-4)

- The length of the context window

- Cost per token (money and time)

- Escape problem, i.e. unintentional escaping due to delimiter and instruction override, or malicious escapes due to user input few-shot examples

- Probabilistic output: Longer prompts mean higher variance on the output, making it harder to debug

- Solution: Break long prompt into a chain of simple prompts (using tools like LangChain)

tagCreating better prompts

Now that we know the different types of prompts, we can start using PromptPerfect to create better prompts.

tagOptimize your first prompt

We all need to start somewhere, and this is where. Watch our handy-dandy video to get a feel for how things work and start optimizing your first prompt.

tagStreamline prompts

PromptPerfect's Streamline option gives you the power to endlessly refine and personalize your prompts. It's not about one-size-fits-all here; it's about creating a journey of continuous improvement that suits you. You can adjust your prompts, bit by bit, learning and adapting as you go. Streamline doesn't just offer a set of tools; it gives you an open road for exploration, growth, and innovation. Dive in, start tweaking, and watch how your ideas evolve.

tagReverse engineer image prompts

It's not just LLMs that are changing the world. Image generation algorithms have gotten in on the action too. Now you can unlock the secrets of the prompts that create great images with PromptPerfect's reverse engineering feature.

tagCreate few-shot prompts

A few-shot prompt serves as a well-structured guide for your tasks. It neatly packages an instruction, a selection of examples, and your target task all in one place. But what makes it stand out? It's the ability to harness the in-context learning power of LLMs, allowing you to address a wide array of complex tasks efficiently and effectively. It's not just about getting the job done, it's about paving a clearer, more informed path to your goal.

tagPrompt templates

Think of prompt templates as your own, reusable magic spells, packed with variables that you can swap out at will. They're like handy little functions that you can call upon time and again. Now, how do you create these variables? It's simple - you declare them right in the prompt using [VAR] or $VAR. They're like placeholders waiting for your command. Once you've got the hang of it, you'll see how these versatile templates can become a powerful ally in your creative process.

tagTest prompts with multiple models

GladAItors, are you ready? Throw GPT-4 into PromptPerfect's Arena against other models like Claude and StableLM to see who wins the slugfest. For added fun, introduce some sibling-on-sibling rivalry by pitting GPT-4 against its brothers ChatGPT and the venerable GPT-3 and see who comes out on top.

tagBulk optimize from CSV

Nobody wants to sit in front of a screen, copying, pasting, and clicking all day. With bulk optimize you can fire and forget when it comes to prompt optimization.

tagAPI

With PromptPerfect's API you can skip the web interface and use cURL, Javascript, or Python instead, either for solo or batch jobs.

We'd include a video, but it's not exactly an engaging visual experience...

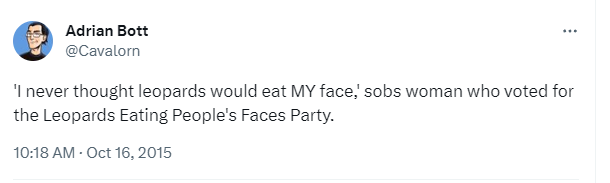

tagYour future in prompt engineering

If you want to stay on top of the game, you need best-in-class tools. Use PromptPerfect for optimizing, streamlining, and testing out your prompts, and stand out from the huge crowd of other prompt engineers. Don't let a leopard eat your face.

tagWhat's next?

Simply visit PromptPerfect's website to sign up and get started. Want us to sweeten the pot? Use the voucher code PromptEngineeringto get 25% off your first-time purchases before June 26 2023 CET.

Want support? Check out the Discord, and stay tuned to this blog for a walkthrough of using PromptPerfect for real-world tasks.