In today's world of multimodal AI, the ability to efficiently query and retrieve relevant information from massive amounts of data is crucial. This is especially true when it comes to dealing with embedding data, which is common in many fields including deep learning, computer vision, and natural language processing. Vector databases offer an effective solution to this challenge.

Among various vector databases in the market, we are excited to introduce our vectordb - a Python vector database tailored to your needs, no more, no less.

tagWhat is a vector database?

Vector databases serve as sophisticated repositories for embeddings, capturing the essence of semantic similarity among disparate objects. These databases facilitate similarity searches across a myriad of multimodal data types, paving the way for a new era of information retrieval. By providing contextual understanding and enriching generation results, vector databases greatly enhance the performance and utility of Language Learning Models (LLM). This underscores their pivotal role in the evolution of data science and machine learning applications.

tagWhat is VectorDB?

vectordb is a Python library designed to manage, query, and retrieve high-dimensional vector data efficiently. It provides an easy-to-use API, allowing you to work with vector data just like how you work with regular Python objects. With vectordb, you can perform CRUD operations (Create, Read, Update, Delete) on your vector data, and conduct a nearest neighbor search based on vector similarity.

tagWhy do we need VectorDB?

Navigating the intersection of AI and databases, vectordb emerges as a Pythonic vector database, providing a balance of functionality and simplicity.

Boasting a comprehensive suite of CRUD operations, vectordb also provides robust scalability with sharding and replication features. It is deployable across various environments, including local, on-premise, or cloud setups, ensuring adaptability to your project requirements.

|

|

|

Embodying the Pythonic principle of delivering exactly what's needed and no more, vectordb avoids over-engineering. Its lean yet powerful design makes it an efficient tool for managing your vector data, satisfying both simplicity and functionality demands.

In a nutshell, vectordb is a comprehensive and adaptable solution for vector data management challenges, seamlessly integrating into your data workflow.

tagUnderlying technologies of VectorDB

The strength of vectordb lies in the combined power of two groundbreaking technologies: Jina and DocArray.

Jina transitions smoothly from local deployment to advanced orchestration frameworks, including Docker-Compose, Kubernetes, and the Jina AI Cloud. It supports any data type, any mainstream deep learning framework, and any protocol, thus offering a highly adaptable solution.

For high-performance microservices, Jina provides easy scalability, duplex client-server streaming, and async/non-blocking data processing over dynamic flows. Its integration with Docker containers through Executor Hub, observability via OpenTelemetry/Prometheus, and rapid Kubernetes/Docker-Compose deployment make it an indispensable part of vectordb.

DocArray supports various vector databases such as Weaviate, Qdrant, ElasticSearch, Redis, and HNSWLib. It offers native support for NumPy, PyTorch, and TensorFlow, providing flexibility specifically for model training scenarios. Being based on Pydantic, DocArray is instantly compatible with web and microservice frameworks like FastAPI and Jina, allowing data transmission as JSON over HTTP or as Protobuf over gRPC.

tagThe magic of Jina and DocArray in VectorDB

vectordb leverages the retrieval capabilities of DocArray and the scalable, reliable serving abilities of Jina to create a powerful and user-friendly vector database. DocArray drives the vector search logic, making it fast and efficient, while Jina ensures scalable and reliable index serving. This harmonious fusion delivers a robust yet approachable vector database experience - that's the essence of vectordb.

tagThe mission of VectorDB

"A Python vector database you just need - no more, no less." This is the mantra we uphold when designing and building vectordb. Our mission is to provide a vector database solution that is intuitive to use, adaptable to various use cases, yet without unnecessary complications.

tagGet started

pip install vectordb

tagUse VectorDB locally

- Kick things off by defining a Document schema with the DocArray dataclass syntax:

from docarray import BaseDoc

from docarray.typing import NdArray

class ToyDoc(BaseDoc):

text: str = ''

embedding: NdArray[128]

- Opt for a pre-built database (like

InMemoryExactNNVectorDBorHNSWVectorDB), and apply the schema:

from docarray import DocList

import numpy as np

from vectordb import InMemoryExactNNVectorDB, HNSWVectorDB

# Specify your workspace path

db = InMemoryExactNNVectorDB[ToyDoc](workspace='./workspace_path')

# Index a list of documents with random embeddings

doc_list = [ToyDoc(text=f'toy doc {i}', embedding=np.random.rand(128)) for i in range(1000)]

db.index(inputs=DocList[ToyDoc](doc_list))

# Perform a search query

query = ToyDoc(text='query', embedding=np.random.rand(128))

results = db.search(inputs=DocList[ToyDoc]([query]), limit=10)

# Print out the matches

for m in results[0].matches:

print(m)

Since we issued a single query, results contains only one element. The nearest neighbour search results are conveniently stored in the .matches attribute.

tagUse vectordb as a service

vectordb is designed to be easily served as a service, supporting gRPC, HTTP, and Websocket communication protocols.

Server Side

On the server side, you would start the service as follows:

with db.serve(protocol='grpc', port=12345, replicas=1, shards=1) as service:

service.block()

This command starts vectordb as a service on port 12345, using the gRPC protocol with 1 replica and 1 shard.

Client Side

On the client side, you can access the service with the following commands:

from vectordb import Client

# Instantiate a client connected to the server. In practice, replace 0.0.0.0 to the server IP address.

client = Client[ToyDoc](address='grpc://0.0.0.0:12345')

# Perform a search query

results = client.search(inputs=DocList[ToyDoc]([query]), limit=10)

This allows you to perform a search query, receiving the results directly from the remote vectordb service.

tagHosting VectorDB on Jina AI Cloud

You can seamlessly deploy your vectordb instance to Jina AI Cloud, which ensures access to your database from any location.

Start by embedding your database instance or class into a Python file:

# example.py

from docarray import BaseDoc

from vectordb import InMemoryExactNNVectorDB

db = InMemoryExactNNVectorDB[ToyDoc](workspace='./vectordb') # notice how `db` is the instance that we want to serve

if __name__ == '__main__':

# IMPORTANT: make sure to protect this part of the code using __main__ guard

with db.serve() as service:

service.block()

Next, follow these steps to deploy your instance:

If you haven't already, sign up for a Jina AI Cloud account.

Use the jc command line to login to your Jina AI Cloud account:

jc login

- Deploy your instance:

vectordb deploy --db example:db

tagConnect from the client

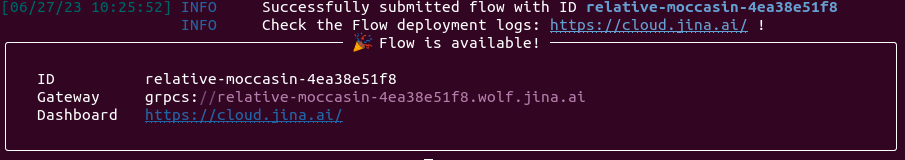

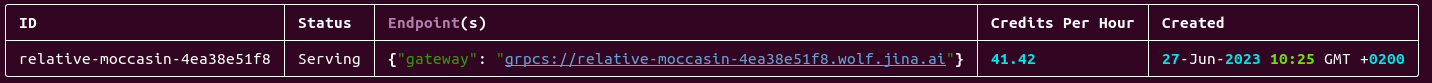

After deployment, use the vectordb Client to access the assigned endpoint:

from vectordb import Client

# replace the ID with the ID of your deployed DB as shown in the screenshot above

c = Client(address='grpcs://ID.wolf.jina.ai')

tagManage your deployed instances using jcloud

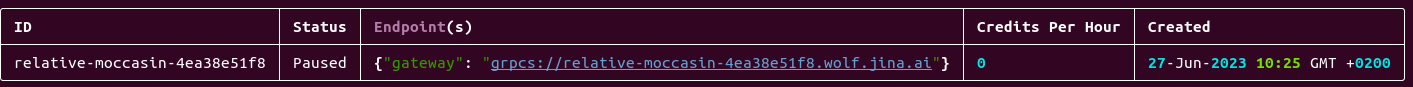

You can then list, pause, resume or delete your deployed DBs with jc command:

jcloud list ID

jcloud pause ID or jcloud resume ID

jcloud remove ID

tagAdvanced Topics

tagCRUD support

Both the local library usage and the client-server interactions in vectordb share the same API. This provides index, search, update, and delete functionalities:

index: Accepts aDocListto index.search: Takes aDocListof batched queries or a singleBaseDocas a single query. It returns either single or multiple results, each withmatchesandscoresattributes sorted byrelevance.delete: Accepts aDocListof documents to remove from the index. Only theidattribute is necessary, so make sure to track theindexedIDsif you need to delete documents.update: Accepts aDocListof documents to update in the index. Theupdateoperation will replace theindexeddocument with the same index with the attributes and payload from the input documents.

tagService endpoint configuration

You can serve vectordb and access it from a client with the following parameters:

- protocol: The serving protocol. It can be

gRPC,HTTP,websocketor a combination of them, provided as a list. Default isgRPC. - port: The service access port. Can be a list of ports for each provided protocol. Default is 8081.

- workspace: The path where the VectorDB persists required data. Default is '.' (current directory).

tagScaling your DB

You can set two scaling parameters when serving or deploying your Vector Databases with vectordb:

- Shards: The number of data shards. This improves latency, as

vectordbensures Documents are indexed in only one of the shards. Search requests are sent to all shards and results are merged. - Replicas: The number of DB replicas.

vectordbuses the RAFT algorithm to sync the index between replicas of each shard. This increases service availability and search throughput, as multiple replicas can respond in parallel to more search requests while allowing CRUD operations. Note: In JCloud deployments, the number of replicas is set to 1. We're working on enabling replication in the cloud.

tagVector search configuration

Here are the parameters for each VectorDB type:

InMemoryExactNNVectorDB

This database performs exhaustive search on embeddings and has limited configuration settings:

workspace: The folder where required data is persisted.

InMemoryExactNNVectorDB[MyDoc](workspace='./vectordb')

InMemoryExactNNVectorDB[MyDoc].serve(workspace='./vectordb')

HNSWVectorDB

This database employs the HNSW (Hierarchical Navigable Small World) algorithm from HNSWLib for Approximate Nearest Neighbor search. It provides several configuration options:

workspace: Specifies the directory where required data is stored and persisted.

Additionally, HNSWVectorDB offers a set of configurations that allow tuning the performance and accuracy of the Nearest Neighbor search algorithm. Detailed descriptions of these configurations can be found in the HNSWLib README:

space: Specifies the similarity metric used for the space (options are "l2", "ip", or "cosine"). The default is "l2".max_elements: Sets the initial capacity of the index, which can be increased dynamically. The default is 1024.ef_construction: This parameter controls the speed/accuracy trade-off during index construction. The default is 200.ef: This parameter controls the query time/accuracy trade-off. The default is 10.M: This parameter defines the maximum number of outgoing connections in the graph. The default is 16.allow_replace_deleted: If set toTrue, this allows replacement of deleted elements with newly added ones. The default isFalse.num_threads: This sets the default number of threads to be used duringindexandsearchoperations. The default is 1.

tagCommand line interface

vectordb includes a simple CLI for serving and deploying your database:

| Description | Command |

|---|---|

| Serve your DB locally | vectordb serve --db example:db |

| Deploy your DB on Jina AI Cloud | vectordb deploy --db example:db |

tagFeatures

User-friendly Interface: With vectordb, simplicity is key. Its intuitive interface is designed to accommodate users across varying levels of expertise.

Minimalistic Design: vectordb packs all the essentials, with no unnecessary complexity. It ensures a seamless transition from local to server and cloud deployment.

Full CRUD Support: From indexing and searching to updating and deleting, vectordb covers the entire spectrum of CRUD operations.

DB as a Service: Harness the power of gRPC, HTTP, and Websocket protocols with vectordb. It enables you to serve your databases and conduct insertion or searching operations efficiently.

Scalability: Experience the raw power of vectordb's deployment capabilities, including robust scalability features like sharding and replication. Improve your service latency with sharding, while replication enhances availability and throughput.

Cloud Deployment: Deploying your service in the cloud is a breeze with Jina AI Cloud. More deployment options are coming soon!

Serverless Capability: vectordb can be deployed in a serverless mode in the cloud, ensuring optimal resource utilization and data availability as per your needs.

Multiple ANN Algorithms: vectordb offers diverse implementations of Approximate Nearest Neighbors (ANN) algorithms. Here are the current offerings, with more integrations on the horizon:

- InMemoryExactNNVectorDB (Exact NN Search): Implements Simple Nearest Neighbor Algorithm.

- HNSWVectorDB (based on HNSW): Utilizes HNSWLib

tagRoadmap

The future of Vector Database looks bright, and we have ambitious plans! Here's a sneak peek into the features we're currently developing:

- More ANN Search Algorithms: Our goal is to support an even wider range of ANN search algorithms.

- Enhanced Filtering Capabilities: We're working on enhancing our ANN Search solutions to support advanced filtering.

- Customizability: We aim to make

vectordbhighly customizable, allowing Python developers to tailor its behavior to their specific needs with ease. - Expanding Serverless Capacity: We're striving to enhance the serverless capacity of

vectordbin the cloud. While we currently support scaling between 0 and 1 replica, our goal is to extend this to 0 to N replicas. - Expanded Deployment Options: We're actively working on facilitating the deployment of

vectordbacross various cloud platforms, with a broad range of options.

tagContributing

The VectorDB project is backed by Jina AI and licensed under Apache-2.0. Contributions from the community are greatly appreciated! If you have an idea for a new feature or an improvement, we would love to hear from you. We're always looking for ways to make vectordb more user-friendly and effective.