tagSe-what now?

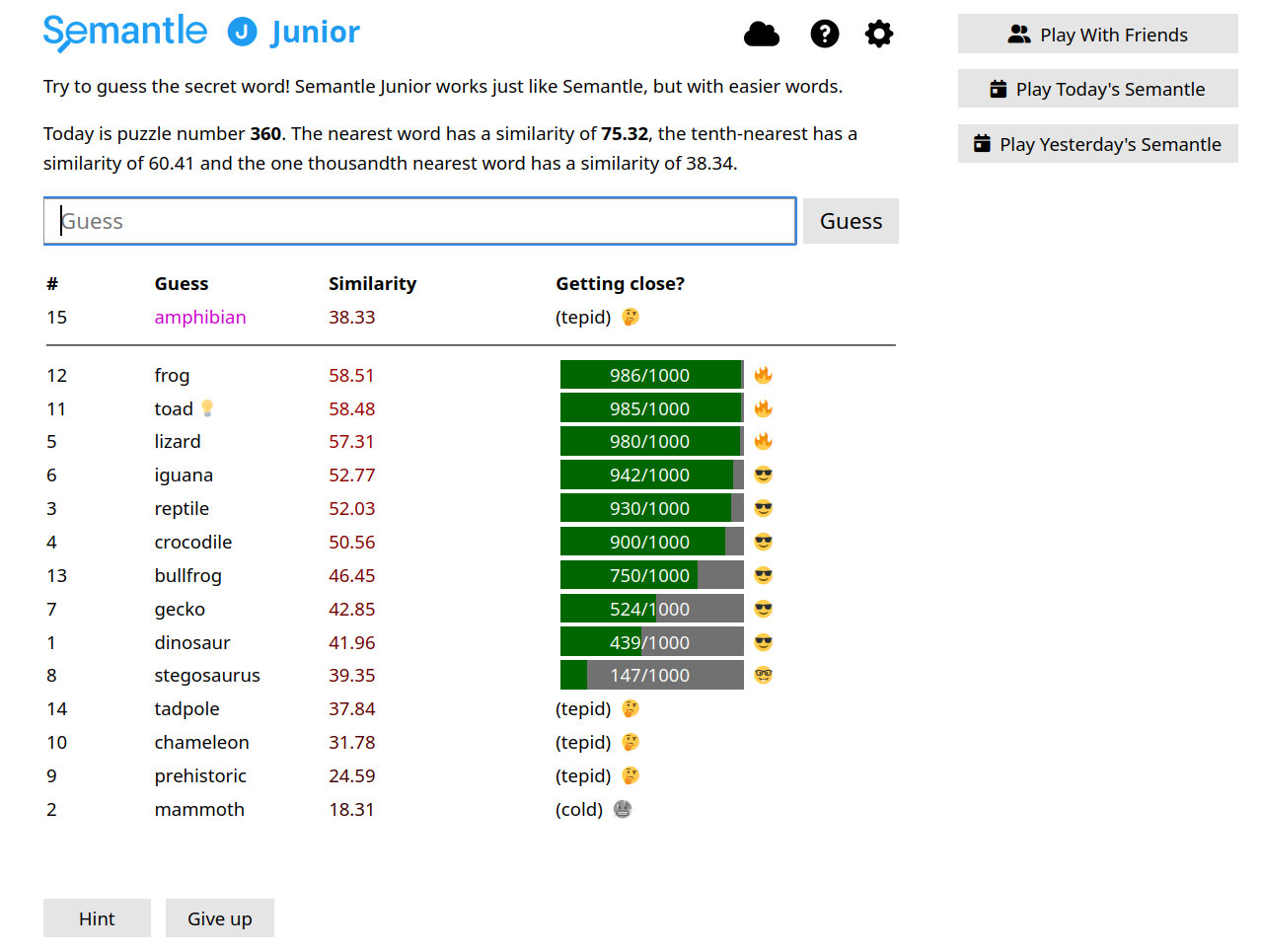

Semantle is a game similar to Wordle, but rather than guessing based on the word's letters, you guess based on the word's semantics.

We'll build our own simple version using DocArray v2 and OpenAI’s large language models, based on the top 10,000 most common words in English.

tagPlay with it yourself

To test it out yourself, you can install the version from the [repo]. It's a little bit enhanced, but all the relevant stuff is covered in this tutorial.

git clone https://github.com/alexcg1/semantle-docarray

tagUnderstanding the game

In our implementation of Semantle:

- A loop is started where the user has to guess the word.

- As soon as the guess matches the target word, the loop ends and the game displays the guess and hint counts to the user.

- If the user asks for a hint, we use GPT-4 to generate a hint from the target word.

- If the user doesn't get it right and doesn't ask for a hint, we fall back to

else, where: - The guess counter increments.

- The distance is calculated and presented to the user.

Keep reading to see how we build it all up!

tagGetting started

tagInstall requirements

To build our game, we'll need a few dependencies:

docarray- our data structure, ideal for representing multimodal documents and embeddings.openai- we'll use their models to generate embeddings for the target word, guess words, and hints.

pip install docarray openai

tagGet the list of words

wget https://raw.githubusercontent.com/first20hours/google-10000-english/master/google-10000-english.txt

Alternatively, for an easier version you can use a list of just fruits:

wget https://raw.githubusercontent.com/alexcg1/semantle-docarray/main/wordlists/fruits.txt

tagSet OpenAI API key

You'll need an OpenAI API key to use the models. You use this command from the CLI:

export OPENAI_API_KEY=<your_key_here>

tagLet’s write some code

tagDefining our data structure

Since we’re just working with text and embeddings, we’ll define a data structure with just a text field and an embedding field by subclassing from BaseDoc. Let’s call that data structure WordDoc. Not that kind of Word doc. Don't worry. No Clippy here).

We'll create a file called app.py:

from docarray import Doclist, BaseDoc

class WordDoc(BaseDoc):

text: str = ''

embedding: NdArray[1536] | None

Here we've defined our .embedding attribute as an NdArray with a length of 1,536, which is the length of the embeddings we'll get later from OpenAI. We didn't need to define the .text attribute, since that's already provided by TextDoc.

That means we have two useful attributes to work with:

text: the string of the word.embedding: theNdArrayof the vector embedding of the word.

tagChoosing our target word

We'll randomly choose one of those words from our list and encode it into a WordDoc:

word_list = 'google-10000-english.txt' # or fruits.txt

def wordlist_to_doclist(filename: str):

docs = DocList[TextDoc]()

with open(filename, 'r') as file:

for line in file:

doc = TextDoc(text=line.lower().strip())

docs.append(doc)

return docs

all_words = wordlist_to_doclist(word_list)

target_word = random.choice(all_words)

tagGetting the embedding for our target word

We need to generate an embedding for our target word so that we can give the user a score for their guess based on how near or far it is from our target word.

import openai

openai.api_key = os.environ['OPENAI_API_KEY']

def gpt_encode(doc: WordDoc, model_name: str='text-embedding-ada-002'):

response = openai.Embedding.create(input=doc.text, model=model_name)

doc.embedding = response['data'][0]['embedding']

return doc

gpt_encode(target_word)

ada-002 model here since we're just generating the embedding for a simple string. Using GPT-4 would be like using a nuclear bomb to crack a nut.tagGetting a user's guess

A user has a (currently) infinite number of guesses:

guess = WordDoc(text='')

guess_counter = 0

hint_counter = 0

while guess.text != target_word.text:

guess = WordDoc(text=input('What is your guess? '))

tagResponding to a wrong guess

If the user's input doesn't match the target word, we'll encode the input into vectors using our function from above:

gpt_encode(guess)

Then we work out the distance of the vectors of both the guess and the target word:

distance = cosine(target_word.embedding, guess.embedding)

if guess.text != target_word.text:

print(f'Try again. Your distance is {round(distance, 2)}.')

The score corresponds to the distance between the guess and the target, so an exact match is 0, while the most distant match could be up to 1.

while loop checks if the guess matches the target. If not, the user gets another guess.tagResponding to a /hint request

We can re-arrange the above into an if block:

while guess.text != target_word.text:

guess = WordDoc(text=input('What is your guess? '))

if guess.text.lower() == '/hint':

hint_counter += 1

# give hint

else:

# check if guess is right or wrong

For the hint, we once again use OpenAI, but this time with GPT. This is because generating a hint for a word is way more complex than just generating an embedding, and requires decent prompting:

import openai

def get_hint(doc, model_name='text-davinci-003'):

response = openai.Completion.create(

model=model_name,

prompt=f'Create a crossword clue for the word "{doc.text}". You will give a brief dictionary definition of the word, but not say the word itself.',

max_tokens=100,

temperature=0.7,

)

hint = response.choices[0].text.strip()

return hint

tagResponding to a correct guess

This is handled by the while loop itself. As soon as the text of the guess is equal to that of the target word, the loop ends and the user is given their score.

while guess.text != target_word.text:

guess = WordDoc(text=input('What is your guess? '))

if guess.text.lower() == '/hint':

hint_counter +=1

print(get_hint(target_word))

else:

guess_counter += 1

gpt_encode(guess)

distance = cosine(target_word.embedding, guess.embedding)

if guess.text != target_word.text:

print(

f'Try again. Your distance is {round(distance, 2)}.'

)

print(

f'Congratulations! You guessed in {guess_counter} turn(s) and {hint_counter} hint(s).'

)

tagNext steps

DocArray is way more than a toy just for making games. You can use it to build search engines, chatbots, image generation APIs, music search (ala Shazam) and much more.

How would you improve the game? Or what (actually useful) thing would you build with DocArray? Join our Discord community and let us know, or make your first contribution today!